On 12 September 1958, Jack Kilby demonstrated the integrated circuit for the first time.

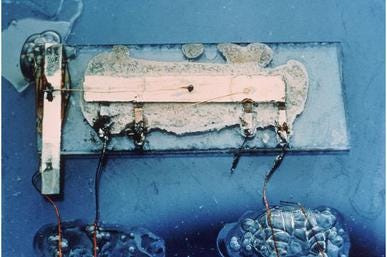

It was an unassuming object. A seeming hodge-podge of germanium, aluminium, gold wires and glue. A simple oscillator circuit with a single transistor, a capacitor and three resistors.

But staff at Texas Instruments, where Kilby had been employed for just a few months, understood some of its significance. The head of semiconductors at TI made sure he was in the room when Kilby’s new device was first switched on.

And it worked. The ‘chip’ was born.

A few months later, Robert Noyce at Fairchild would develop a more elegant solution, based on the ‘planar process’ invented by Jean Hoerni. Noyce’s ‘monolithic integrated circuit’ would allow more and more, smaller and smaller components to be made at the same time, on a single piece of silicon.

The ‘tyranny of numbers’ was over. Transistors could be made smaller, but a big problem had remained. How to avoid the need to solder, by hand, the intricate connections between them. The integrated circuit solved that problem.

In the middle of the integrated circuit’s seventh decade, the era of Moore’s Law has come to an end, and scaling of the components on the most advanced integrated circuits has slowed.

But, we can now make ‘chips’ with tens of billions of transistors. And scaling has slowed, but not stopped, as engineers continue to find ways to build circuits that are even smaller and more efficient. Just like Kilby and Noyce did 65 years ago.

And, in 2023, it feels like a new era has begun. To echo David Patterson, we may be entering a new ‘golden age’ not just for computer architectures but for the power of the applications that run on those architectures.

The integrated circuit has changed the world over the last 65 years. It seems possible that it might bring even more profound change over the next 65.

Happy birthday, IC.