Jensen Groks Groq

On Nvidia's fascinating 'acqui-something' of AI chip startup Groq

Is this the deal that launched a million blog posts? It certainly feels like a million and, initially, I didn’t want to make it a million and one, but … this Substack is passionately interested in computer architecture and, for reasons that I’ll explain later, this feels like a significant moment in the history of computer architecture.

Plus, now that things have settled down a little after the initial wave of excitement and puzzlement about the deal - see the quote from Irrational Analysis below - so it might be a good point to take stock and to share a distinctive perspective on the deal.

We’ll start with some Questions and Answers on Groq and the ‘deal’ between Groq and Nvidia.

What is Groq?

Startup founded in 2016 by members of Google’s original TPU team including CEO Jonathan Ross;

Total publicly reported funding of more than $1.4bn including most recently $750m at a $6.9bn valuation in September 2025 (note that some reports put total funding at $3.3bn);

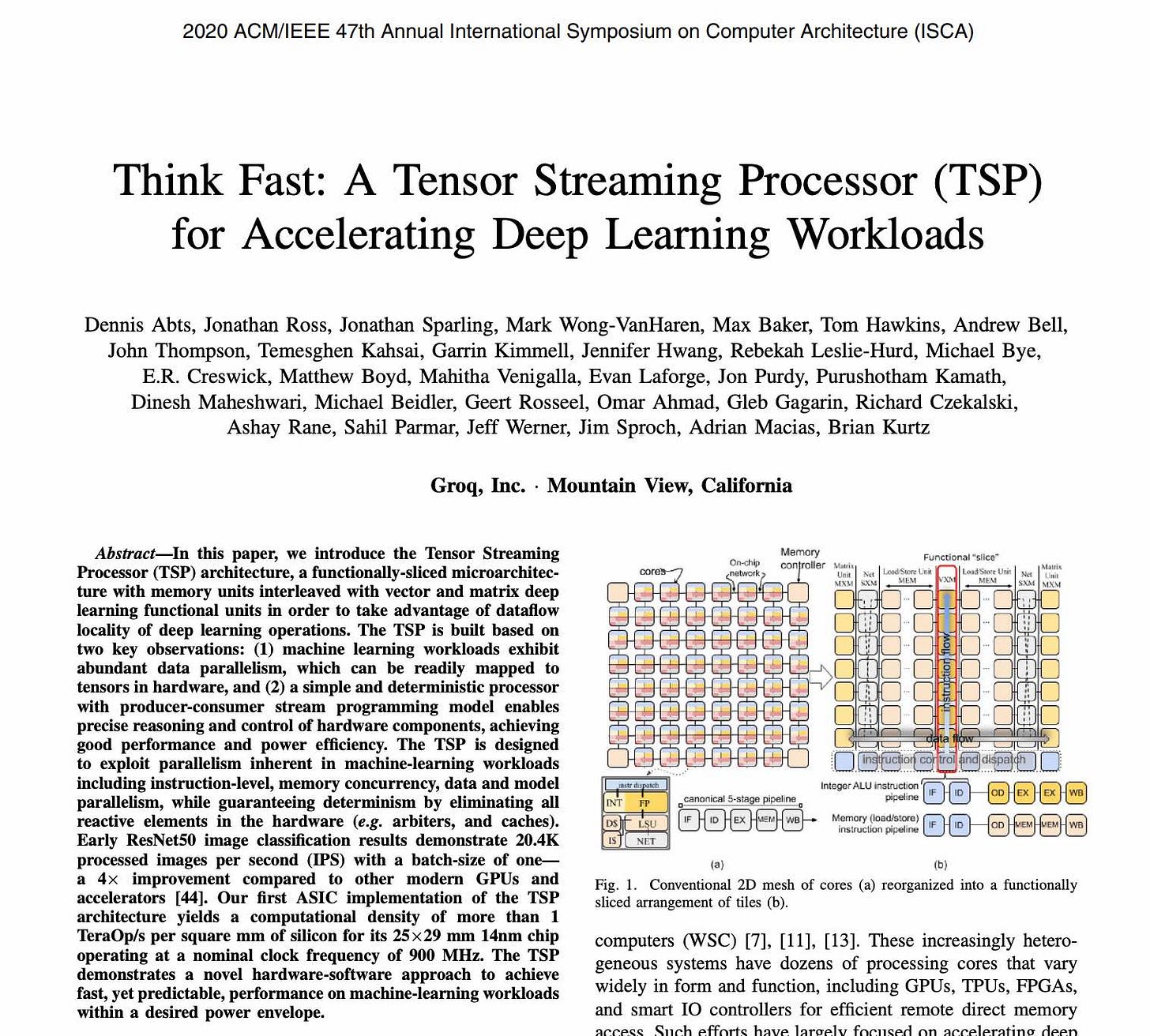

Groq created chips that it calls Language Processing Units (LPUs - originally Tensor Streaming Processors or TSPs);

LPUs are designed for AI inference only;

From 2024 Groq stopped selling its chips to third parties; instead it focused on:

partnering with others - notably in Saudi Arabia - to create datacenter scale deployments;

offering inference as a service to developers via what it calls GroqCloud;

For more on the LPU Groq has provides a good overview.

I’ve kept this summary brief. There are lots of links with further reading at the end of this post.

What makes Groq distinctive?

Apart from its business model Groq’s hardware is distinctive in that it can serve results (much) faster than competing hardware for a given cost.

SemiAnalysis did a great comparison of Groq’s first systems with the then current Nvidia alternatives back in 2024, concluding that:

Groq has a genuinely amazing performance advantage for an individual sequence. This could enable techniques such as chain of thought to be far more usable in the real world. Furthermore, as AI systems become autonomous, output speeds of LLMs need to be higher for applications such as agents. … . These services may not even be viable or usable for end market customers if the latency is too high.

This has led to an immense amount of hype regarding Groq’s hardware and inference service being revolutionary for the AI industry.

Groq achieves this speed by loading all the parameters it needs into Static RAM (SRAM) on LPUs. Each LPU can only hold a limited amount of SRAM and so models usually need lots (hundreds) of LPUs networked together.

This also means that LPUs don’t depend on access to expensive and scarce High Bandwidth Memory.

If you want to dive straight into Groq’s technology in detail then here is a 2020 paper from the Groq team.

Finally, Groq’s hardware is made in the US with its latest (second generation) LPU made by Samsung in Texas:

Groq on Sunday announced that it has chosen the South Korean chip giant as its “next-gen silicon partner,” and the chips, its new AI chips, will be manufactured on the 4-nanometer (nm) process, or the SF4X process, at Samsung’s new chip factory under construction in Taylor, Texas.

So Groq, despite its roots, is not just another version of Google’s TPU.

What is the deal?

Groq announced the deal in a minimal press release, reproduced in full below:

Today, Groq announced that it has entered into a non-exclusive licensing agreement with Nvidia for Groq’s inference technology. The agreement reflects a shared focus on expanding access to high-performance, low cost inference.

As part of this agreement, Jonathan Ross, Groq’s Founder, Sunny Madra, Groq’s President, and other members of the Groq team will join Nvidia to help advance and scale the licensed technology.

Groq will continue to operate as an independent company with Simon Edwards stepping into the role of Chief Executive Officer.

GroqCloud will continue to operate without interruption.

Nvidia has been publicly silent on the deal, but an internal email to staff has made its way to the Financial Times, with Nvidia CEO Jensen Huang quoted as saying:

“We plan to integrate Groq’s low-latency processors into the Nvidia AI factory architecture, extending the platform to serve an even broader range of AI inference and real-time workloads.”

Nvidia isn’t buying Groq to end development of its technology.

In addition more detail about the deal has subsequently emerged. Notably (per Axios):

Around 90% of Groq employees [over 400] are said to be joining Nvidia, and they will be paid cash for all vested shares. Their unvested shares will be paid out at the $20 billion valuation, but via Nvidia stock that vests on a schedule.

The bottom line: Everyone gets paid. A lot.