Learning Assembly for Fun, Performance and Profit

Why take an interest in assembly language and which to learn?

(computer languages) A programming language in which the source code of programs is composed of mnemonic instructions, each of which corresponds directly to a machine instruction for a particular processor.

A skilled programmer can write very fast code in assembly language.

Source Wiktionary

If you can't do it in FORTRAN, do it in assembly language. If you can't do it in assembly language, it isn't worth doing.

Real programmers don’t use Pascal - Ed Post

Low-level languages have been in the news recently. Use of Nvidia’s ptx has been revealed as part of DeepSeek’s ‘secret sauce’. And there is still plenty of interest in learning assembly language. A recent Substack post advocating learning assembly language for the venerable, but well loved, 6502 as a first step garnered over 240 ‘upvotes’ and more than 290 comments on Hacker News.

This continuing interest might seem a little counterintuitive. Programming in assembly language can be hard, error prone and unproductive. Isn’t the trend to move over time to ever higher level and more abstract programming languages? We can generate code with nothing more than a ‘natural language’ prompt handed to a Large Language Model, so why would anyone bother with assembly. Other, that is, than a tiny number of dedicated individuals who do essential work on operating systems and compilers.

The ‘evergreen’ answer to this is that understanding assembly language is essential if you really want to understand how a computer works. It might - just - be possible to grasp in the abstract that a computer executes instructions one after another and to have a sense of what those instructions do. Having a concrete example of the set of instructions that a real computer uses in practice is much more useful though.

And although only a very small number of people will ever need to write assembly, many more will need to read it, usually in the course of debugging code written in a compiled higher-level language. Hence the popularity of Matt Godbolt’s wonderful Compiler Explorer which gives a readily accessible way of viewing the assembly language generated by a range of compilers.

What about DeepSeek’s use of ptx? To clarify, ptx isn’t an assembly language. Rather, it’s an intermediate language that is translated to the assembly language (known as SASS) for the actual Nvidia GPU that the ptx code will be running on. Use of ptx rather than SASS allows code to run across a wide range of Nvidia architectures. However, ptx is still a very low level language, much closer to assembly than a language like c.

We know that the ptx code in DeepSeek’s training model was being used to help accelerate networking operations. We don’t know, however, precisely why it was necessary to drop down to a lower level than CUDA’s c-like language.

I think that there are good reasons why we might have expected some use of assembly or other low level language as the roll-out of LLM continues. The stakes for leading AI companies (and for the US and China) are enormous. Hundreds of billions of dollars are being spent on GPUs and associated data-center hardware. Getting the best performance out of that hardware is potentially worth billions of dollars. The use of ptx gives a fine-grained level of control over those GPUs that, in some cases, may not be possible using a higher level language. It shouldn’t be a surprise that at least one firm has used this extra control - difficult though it may be - to help gain an advantage.

I’d like to return to ptx in a later post, but hopefully all this has convinced you that, even in the era of LLMs, there is still value in learning, at least a little, assembly language.

Back in 2023, I asked readers whether they had used assembly language. A large majority, of those who responded, had.

This leaves the question, though, if you haven’t used assembly language and are ‘assembly curious’ as to which one to learn? In the main this is about is about selecting a computer architecture. There are alternative assembly languages (and assemblers) for some architectures but the key choice is the architecture itself.

There are probably hundreds of architectures that have gained a degree of adoption over the years. Each has taken a subtly different approach and each has its own strengths, weaknesses and quirks.

So if you’re learning assembly for the first time, or perhaps want to refresh your existing assembly skills on a new architecture, then which architecture to choose? In the rest of this post we’ll narrow down the field by considering just seven options.

Note that they are all CPUs as the common GPU, for example, options are much more complex and less accessible.

This is a free post so please feel free to share.

The Contenders

I’m going to group the options into two groups: Retro and Modern (or, subjectively, more fun or more useful):

Retro (more fun): 6502, Z80, 8086, 68000

Modern (more useful): x86-64, ARM (64-bit), RISC-V

Why these choices? In large part because each has to have readily accessible tools and other materials to support learning the architecture.

Before we consider these in more detail, a few words about two architectures that just failed to make the cut.

Motorola’s 6809 : This was possibly the most sophisticated 8-bit architecture but had much more limited adoption than its competitors.

Early ARM : The early ARM architectures were elegant and innovative but included features that have now been dropped from mainstream designs.

Modern 32-bit ARM : This was the most difficult omission. However, there are probably fewer platforms - either real hardware or emulated - on which to write code and so it fails to make the cut.

The Retro and Modern groups of architectures and assembly languages each have distinct pros and cons:

Retro

These are architectures that were important in the past but are no longer being used in mainstream user-programmable systems.

Pros:

Simpler architectures than their modern counterparts and so easier to learn.

Lots of emulators readily available, including online.

Some amazing learning resources, both historic and more recent.

Fun for programming retro systems.

Cons:

Less useful if you want to progress to assembly on modern machines.

Typically harder to use them to program any given task.

Modern

These are architectures that are beings shipped today in mainstream server, desktop, laptop and single board computer systems (like the Raspberry PI).

Pros:

You may well have access to real hardware running one of these architectures.

Give a more realistic understanding of how modern computers work.

Useful when debugging modern high level compiled languages.

Cons:

Complex architectures can make them daunting for beginners.

8086 and x86-64 are sometimes considered together as a single architecture (called simply x86). Modern Intel and AMD chips can usually run both and although they have some things in common (x86-64 is a descendant of 8086) they are really quite different. For this reason, in the rest of this post, we will consider them separately.

I need to emphasise that with the more recent architectures, I’m not suggesting that most readers should learn all of the ISA. Modern ISAs are really too complex (even the ones that claim to be RISCy) to be worth learning in full for anyone who isn’t either a compiler writer or who has too much time on their hands. However, it should still be possible to learn a useful subset for educational purposes.

I’ll be rating each architecture and corresponding assembly language as follows:

Learning Material : ⭐️⭐️⭐️⭐️⭐️

Learning Curve. : ⭐️⭐️⭐️

Ease of use. : ⭐️

Accessibility. : ⭐️⭐️⭐️

Fun : ⭐️⭐️⭐️⭐️⭐️

Usefulness. : ⭐️

Total : 18 ⭐️By word of explanation of each rating:

Learning material : The quality of the (free) material available online

Learning Curve : How easy is it to get started

Ease of use : How easy is it to write real-world programs

Accessibility : How easy is it to get hold of a platform to run code

Fun : Somewhat subjective, but essentially how easy is it to write simple games?

Usefulness : How useful is it in for more serious work - e.g. debugging modern programs written in a high-level language.

None of what follows is meant to be an assessment on the quality of each architecture, for example on performance grounds, but instead an attempt to consider each from an educational perspective. There isn’t space to consider each architecture, and its strengths and weaknesses great detail so what follows is necessarily both high level and highly subjective.

So, with that said, let’s move on to consideration of each individual architecture.

Retro

6502

8-bit with 64 KByte Address Space

History

The 6502 was created by the team at MOS Technology in 1975.

It was chosen as the CPU in the Apple II, Commodore 8-bit computers including the PET, VIC-20 and 64, the Atari 400 and 800 and the BBC Micro. It was selected in each case for its combination of low cost and high performance when compared to competing designs.

Variants of the 6502 were later used in games consoles such as the Nintendo NES and in (probably billions of) microcontrollers. You can still buy later versions of 6502 compatible chips today.

Architecture

The 6502 has a minimalist architecture that makes the most of the limited transistor budget (4,528) that the designers gave themselves.

It has a small number of 8-bit registers (A,X,Y,SP) plus a 16-bit program counter.

All maths operations are 8-bit, so no 16-bit addition for example.

It compensates for these limitations by

Giving fast access to the first 256 bytes of memory (known as zero page).

Having some sophisticated addressing modes

e.g. Indexed Indirect such as LDA ($30),Y which loads A with the contents of the memory address derived by adding Y to the 16 bit address held in memory at locations $30 and $31.

These features enable the 6502 to use the first 256 bytes in memory as a large set of ‘pseudo registers’.

Assessment

In the right hands the 6502 was a great CPU for its era. There were some remarkable feats of programming skill performed in 6502 assembly language. Just one example is the game Elite, first written for the BBC Micro, with exquisitely documented source code online.

However, the 6502 is a great example of simple not equalling easy, at least as far as assembly language programming is concerned. Even something as simple as a 16-bit integer addition needs multiple loads and stores from memory coupled with two 8-bit addition instructions.

Ratings

Learning Material : ⭐️⭐️⭐️⭐️⭐️

Learning Curve. : ⭐️⭐️⭐️

Ease of use. : ⭐️

Accessibility. : ⭐️⭐️⭐️

Fun : ⭐️⭐️⭐️⭐️⭐️

Usefulness. : ⭐️

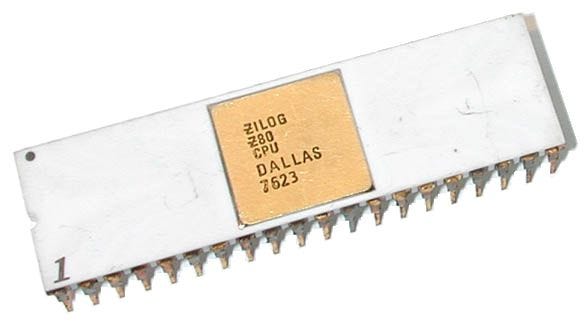

Total : 18 ⭐️Z80

8-bit with 64 KByte Address Space

Full disclosure: Z80 was the first assembly language I learned so I may be biased!

History

The Z80 was created by the team at Zilog - especially Masatoshi Shima - working under the direction of Federico Faggin.

The Z80 was the choice for a generation of business computers running the CP/M operating system in the 1970s and 1980s. It was also used in many popular home computers such as the Sinclair ZX81 and ZX Spectrum and the TRS-80.

It is backwards compatible with Intel’s 8080 and shares some features with the 8086 used in the original IBM PC (so the Z80 is sort of the uncle of Intel’s 8086).

Architecture

The Z80 is more complex than the 6502 as Faggin and Shima had a bigger transistor budget (more than 8500). That means:

More registers: A,B,C,D,H,L (all 8-bit), IX,IY,SP (16-bit) plus two 8-bit registers can sometimes be combined into a single 16-bit register (B and C become BC).

Wider range of instructions:

16-bit arithmetic: ADD HL,BC

Complex instructions such as DJNZ which subtract 1 from a register and perform a jump or not depending on the result.

Assessment

The Z80 could be slower than the 6502 despite it’s greater resources, but it was generally ‘easier’ to program. You could put a full 16-bit memory address into a register and perform 16-bit arithmetic on it, in contrast to the 6502 which needed to put 16-bit memory addresses in ‘zero-page’. A 16-bit addition can be performed with a single instruction.

Ratings

Learning Material : ⭐️⭐️⭐️⭐️⭐️

Learning Curve. : ⭐️⭐️⭐️

Ease of use. : ⭐️⭐️⭐️

Accessibility. : ⭐️⭐️⭐️

Fun : ⭐️⭐️⭐️⭐️

Usefulness. : ⭐️⭐️⭐️

Total : 21 ⭐️

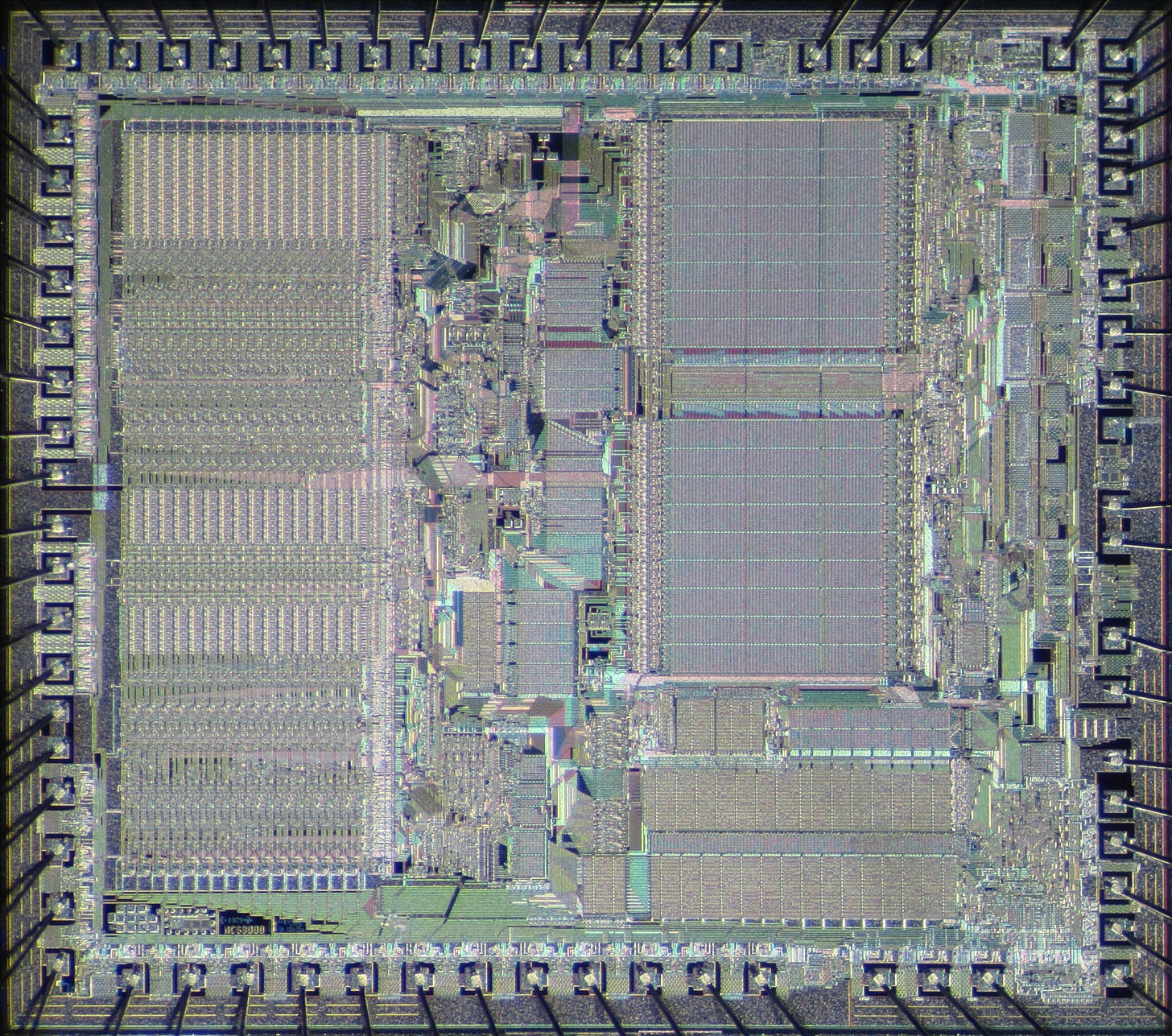

8086

8/16-bit with 1 MByte Address Space

History

The 8086 was famously created by a small team at Intel in the mid 1970s as a ‘stop-gap’ before the mighty iAPX432 ‘micro-mainframe’ was ready. The iAPX432 was a commercial disaster, IBM chose the 8088, the cheaper 8-bit bus version of the 8086, for the first IBM PC and the rest is history. Intel became half of the ‘Wintel’ duo that has dominated the desktop and laptop markets for more than four decades.

Architecture

The 8086 has much in common with the Z80 as both share heritage in Intel’s 8080.

The 8086 provided several upgrades when compared to the 8080:

1 megabyte address range (but split into 64K byte segments)

Expanded set of registers and instruction set.

Assessment

The 8086 was a useful 16-bit backwards (source code) compatible successor to the 8080 (and Z80). It soon became almost ubiquitous due to the success of the IBM PC and clones.

There is more to learn than for the Z80 but 8086 has the advantage that you can run 8086 code on modern Intel hardware in addition to using a wide range of emulators.

Ratings

Learning Material : ⭐️⭐️⭐️⭐️⭐️

Learning Curve. : ⭐️⭐️⭐️

Ease of use. : ⭐️⭐️⭐️

Accessibility. : ⭐️⭐️⭐️

Fun : ⭐️⭐️⭐️⭐️

Usefulness. : ⭐️⭐️⭐️

Total : 21 ⭐️

68000

16/32-bit with 16 MByte Address Space

History

The 68000 was created by Motorola in the late 1970s. It became the CPU of choice for the first generation of computers with WIMP (Windows, Icon, Mouse, Pointer) user interfaces such as the first Macintosh, Atari ST and the Commodore Amiga.

Architecture

The 68000’s ISA was a significant upgrade on the earlier 8-bit and 8/16-bit systems (such as the 8086). It brought:

lots of 32-bit registers (8 for data, and 7 for addresses)

32-bit arithmetic

more complex addressing modes, such as:

These complex addressing modes are one of the reasons why the 68000 is regarded as one of the more CISCy of the the ISAs of the 1980s.

Assessment

The 68000 is in most respects a much more sophisticated architecture than the other Retro options. It offers 32-bit arithmetic and a much larger number of registers and this makes it closer in spirit in many ways to Modern architectures.

However, there has a smaller selection of learning resources available for it than is the case for the Retro alternatives. Unlike the 8086, the 68000 was also an architectural dead end, so learning the 68000 doesn’t provide a direct upgrade path to a modern architecture.

Ratings

Learning Material : ⭐️⭐️⭐️

Learning Curve. : ⭐️⭐️⭐️⭐️

Ease of use. : ⭐️⭐️⭐️⭐️

Accessibility. : ⭐️⭐️⭐️

Fun : ⭐️⭐️⭐️

Usefulness. : ⭐️⭐️

Total : 19 ⭐️Retro Winner

Let’s first discount the losers.

6502

I have to disagree with Nemanja Trifunovic and say that I think that the 6502 is the least suitable ISA to learn assembly language programming on the whole list. It was a clever design but it’s not an easy CPU for beginners to start with and it doesn’t provide an easy upgrade path to more sophisticated designs.

Recommended only for retro games enthusiasts.

68000

In some ways the 68000 represents a halfway house between the retro and modern architectures. It has an easier to use and more modern architecture that the 8 or 8/16 bit alternatives whilst avoiding their complexity.

However, this ‘in-between’ status also means that it fails to be ideal on any of these counts.

Recommended only for some retro Atari, Amiga enthusiasts.

That leaves the Z80 and the 8086.

8086

The 8086 is unique among the retro systems here in that you can buy a mainstream desktop system that runs 8086 code directly. It also provides a stepping stone towards modern x86-64 should you want to progress to programming a modern architecture.

Z80

The Z80, the 8086’s uncle, has a lot in common with the Intel design but is a little simpler at the cost of not having modern hardware to run Z80 code.

So the winners are:

🏆 Z80 and 8086

It’s a tie, with the Z80 and 8086 as joint first!

(If you have to push me I’d say the 8086, but somewhat grudgingly).

That’s it for the retro ISAs now on to the modern options.

Modern

All the architectures that follow are 64-bit (64-bit registers and have 64-bit addresses) and have very large - many gigabyte - address spaces.

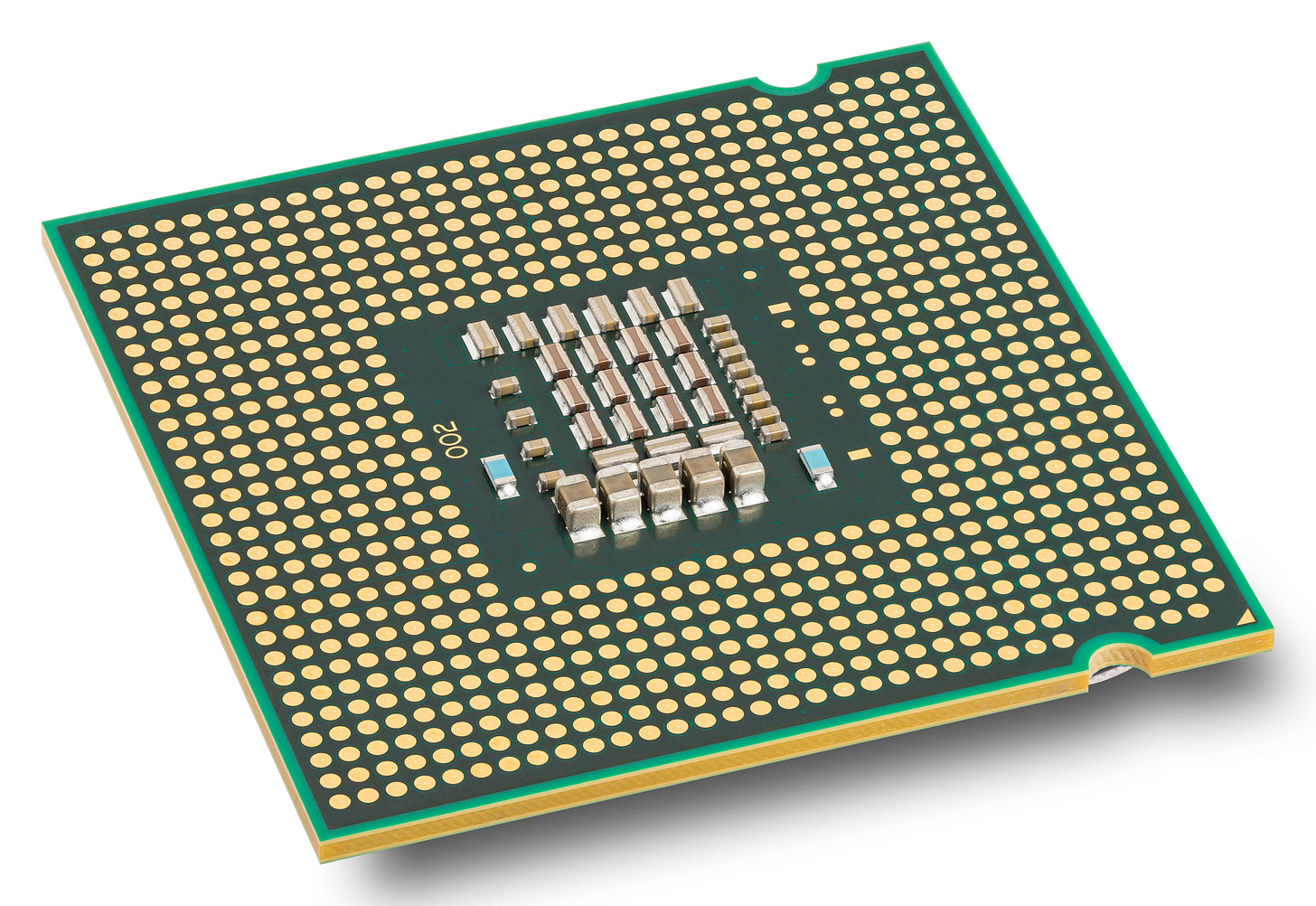

x86-64

History

This is the AMD developed 64-bit version of the x86 architecture that started with Intel’s 8086. Today both Intel and AMD use it for almost all of their modern CPUs. If you’re reading this on a (non-Apple) desktop or laptop it’s almost certain that it’s running x86-64 code.

Architecture

x86-64 is the great … (insert many greats) … grandson of the 8086. In between there has been 80286, 80386, 80486, Pentiums (I-IV), Core’s plus lots of AMD designs with (seemingly) countless extensions to the architecture.

The range of extensions is both a blessing and a curse. It’s a blessing, as it gives the opportunity to learn about just about every architectural feature that has been added to mainstream architectures over the last fifty years.

The curse is complexity. Sometimes features have been added multiple times whilst leaving original implementations in place. This makes x86-64 potentially a daunting prospect for the beginner. To give an approximate indication of the complexity that this had led to, the combined Software Development Manuals for the 32-bit and 64-bit x86 architectures weigh in at a remarkable 5,060 pages.

Assessment

The x86-64 is probably the architecture that the majority of readers have access to through their desktop / laptop computer. That would seemingly make it a natural choice. However, x86-64 is the most complex of all the instruction sets we’ll consider - often unnecessarily so - and this makes it a thoroughly daunting prospect for anyone who wants to learn a little assembly.

It’s also true that x86 is on a slow path of decline. I expect that it will still be around for decades to come, but it has to be acknowledged that it is losing ground to Arm and probably quite soon to RISC-V. As a result it loses a ⭐️ on its usefulness rating.

Ratings

Learning Material : ⭐️⭐️⭐️⭐️

Learning Curve. : ⭐️⭐️

Ease of use. : ⭐️⭐️⭐️⭐️

Accessibility. : ⭐️⭐️⭐️⭐️

Fun : ⭐️⭐️

Usefulness. : ⭐️⭐️⭐️⭐️

Total : 20 ⭐️

ARM64

History

I need to be clear that here that we’re discussing the 64-bit version of the Arm architecture announced in 2011. It’s almost certain that your smartphone is running ARM64 code.

Architecture

64-bit Arm represents a break with earlier 32-bit versions in many ways beyond just increasing the size of registers and address space. Several features that made the original ARM architecture distinctive have been dropped.

Assessment

In many respects ARM64 is a great architecture to learn as a first assembly language. It’s modern, with all the features that one would expect in a modern ISA, without most of the cruft than has accumulated over decades on x86-64. Hardware, for example single board computers like the Raspberry PI, are readily available and affordable and it can be emulated on more powerful x86-64 systems.

The main drawbacks are the lack of really accessible learning material as there is much less available than for x86-64.

Ratings

Learning Material : ⭐️⭐️⭐️

Learning Curve. : ⭐️⭐️⭐️

Ease of use. : ⭐️⭐️⭐️⭐️

Accessibility. : ⭐️⭐️⭐️

Fun : ⭐️⭐️

Usefulness. : ⭐️⭐️⭐️⭐️

Total : 19 ⭐️

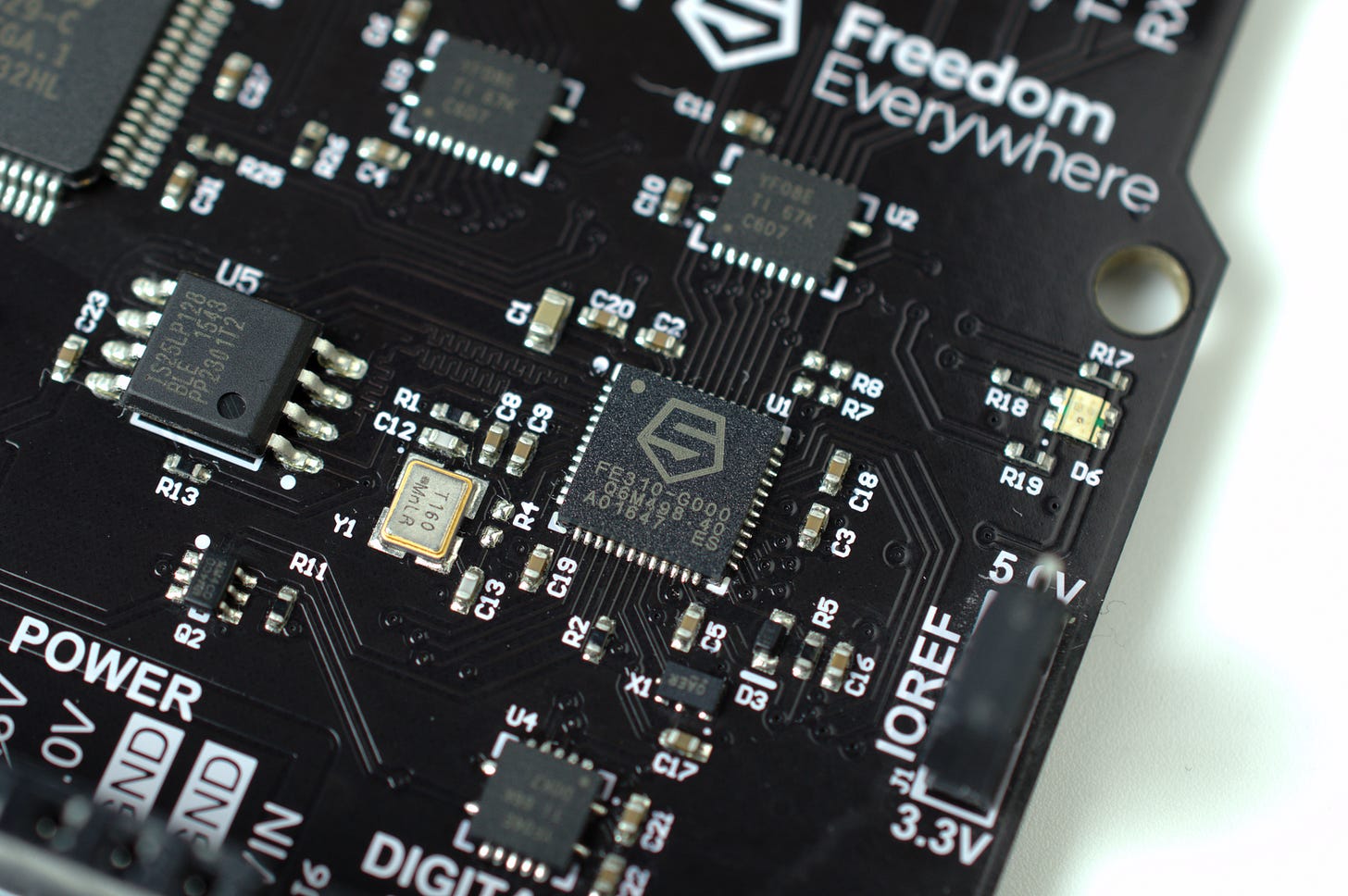

RISC-V

History

RISC-V originated from work done at UC Berkeley with the first proposals published in 2011. Since then it has spawned the RISC-V foundation and lots of implementations in hardware ranging from microcontrollers to servers.

RISC-V builds on the original RISC principles developed by David Patterson at UC Berkeley at the start of the 1980s. Crucially, RISC-V is an open ISA that can be implemented and extended without fees or permission (or worrying about patent infringement).

Architecture

RISC-V isn’t really a single instruction set but rather a collection of - sometimes quite different - instruction sets with a common core.

The most fundamental split within this collection of instruction sets is whether they relate to the 32-bit (RV32 with 32-bit wide integer registers and address space) RISC-V architecture or its 64-bit (RV64 with 64-bit wide integer registers and address space) counterpart.

These two sets of instruction sets are aimed at different applications. 32-bit is largely intended for microcontrollers, where it has already gained a lot of traction. 64-bit is intended for everything from single board computers, through smartphones, desktops and servers, where adoption has been slower.

32-bit and 64-bit share a common core of simple integer instructions to which the designer can add a wide range of official instruction set extensions.

RISC-V’s core has a lot in common with the original Berkeley RISC-I architecture from 1981. It is opinionated in rejecting the addition of some more complex instructions that have been adopted by other - ostensibly RISC - architectures, such as ARM64.

Assessment

The architectural approach adopted by RISC-V has some important advantages from an educational perspective. It’s possible to learn the simple integer assembly core of 32-bit RISC-V using a simple emulator or on a cheap accessible microcontroller and then take that learning to more complex 64-bit designs.

It does bring some downsides though. Moving beyond the base instruction set requires the beginner to navigate the numerous and - to me at least - somewhat confusing range of extensions that are available.

Single board computers with RISC-V designs are available and affordable (although less common that ARM systems) and it can be emulated on more powerful x86-64 systems.

Ratings

Learning Material : ⭐️⭐️⭐️⭐️

Learning Curve. : ⭐️⭐️⭐️⭐️

Ease of use. : ⭐️⭐️⭐️⭐️

Accessibility. : ⭐️⭐️⭐️

Fun : ⭐️⭐️⭐️

Usefulness. : ⭐️⭐️⭐️

Total : 21 ⭐️Modern Winner

Let’s first discount the losers again.

x86-64

x86-64 is just too complex to be a good ISA to first learn assembly language. It still retains many of the (sometimes quirky) features of the 8086 and has had decades of 'additions’ added on top.

It’s also the case that x86-64 is almost certainly in long-term decline as devices based on ARM64 gradually take market share from it on both desktops and on servers. I expect that it will be around for a long time but understanding x86’s quirks will become less useful over time.

One caveat to this. One learning path would be to learn 8086 as a stepping stone to later expanding your knowledge to x86-64, with which it has much in common.

Recommended if you are likely to be mainly focused on x86-64 systems in future.

ARM64

ARM64 is much more ‘regular’ ISA with fewer quirks than x86-64. Hardware running ARM64 is readily accessible and affordable, chiefly as a result of Apple and the Raspberry PI series of single board computers.

It’s still a substantial ISA though and learning a major part of ARM64 is a significant challenge.

I also found that there is less useful learning material available on ARM64 than the alternatives and what there is is less accessible for anyone starting out.

Recommended if you are likely to be mainly focused on ARM64 systems in future.

Which takes us to winner …

🏆 RISC-V

I don’t make this recommendation without quite a few misgivings.

Although RISC-V advocates often claim that the architecture is be much simpler than the alternatives, by the time all the extensions required to create a modern system are added it’s still quite complex. Understanding what all the extensions are and what they do adds another layer of complexity for the beginner.

However, the ability to move from the simple integer core of RV32 to a much more sophisticated modern 64-bit application processor, means that RISC-V provides a unique path for someone learning assembly for the first time. The open nature of the architecture and the wide range of readily accessible tools are a nice bonus.

Overall Winner

The assessments above have led to a three-way tie between Z80, 8086 and RISC-V.

I debated for a while as whether to declare an overall winner. In the end I decided that failing to do so would be ducking out. So the overall winner is:

🏆 RISC-V

Mainly because RISC-V is still developing and I’d expect to see it become more widely used in the future.

I hope this was useful (and a little fun). Please do share your thoughts in the comments below.

Just recently retired after programming solely in commercial settings for over 50 years, more than half as a compiler writer for DEC, Apollo Computer, HP, IBM and Mathworks. Of course I started in assembly. There was really nothing else available for building commercial applications. I cut my teeth on PDP-11 assembly, learning from instruction set manauls that DEC handed out for free. Though not perfectly uniform (in particular, no byte add nor byte subtract), the regularity and orthogonality of the architectural features made learning a breeze.

To this day, if I were asked to teach assembly language concepts, the PDP-11 would be my very first choice.

In my case, that choice is not based on any early love. Were I truly to follow my heart, I could pitch the ISP of Apollo Computer's DN10000, which I designed (patent US5051885A) and for which I led the compiler team. After HP bought Apollo, we retargetted our compiler's backend to generate code for PA-RISC and Intel i860 (though HP never shipped those products). Along the way, I did persuade HP to include multiple DN10000 instruction set features into the PA93, the 64-bit extension of PA-RISC.

In addition to the machines mentioned above, I have deep exposure to a bewildering collection of assembly languages . I have shipped commercial assembly code for DEC PDP-8, VAX, and Alpha, Motorola 68000 and 68020, IBM PowerPC (Motorola MPC5xx, IBM/AMCC 440), MIPS32, and Intel 8080 and 80x86, . I further learned the assembly languages well enough to debug code on the DEC PDP-6 and -10, the PDP-9 and -15, the IBM 1130, many ARM variants, and even MIT's one-off PCP-1x during the hacker heyday :-).

Still, I stand by my position that the PDP-11 assembly language is a consummate teaching vehicle.

Getting to the point of being able to read and debug disassembled real code requires develing into the arcana of calling conventions. Not for the feint of heart!

Wow, what a highly debatable subject!

I think you will always have a soft spot for what you grew up with, and whilst I’ve done my fair share of Z80 and 8086 assembly, I’d always prefer my first 8 and 16 bit loves… 6502 and 68000.

But hey, I still love Pascal so what would I know!