McCulloch, Pitts, Neural Networks and the Origins of Modern Computing

How a 'wild-bearded, chain-smoking philosopher-poet' and a homeless, self-taught, boy from Detroit helped lay the foundations of both the modern computer and AI

In today’s post we look at how some of the very earliest work on neural networks helped influence the development of the modern ‘stored program’ computer.

I got attached to this McCulloch and somewhere I wrote, maybe 100 years from now he will be seen as the greatest 20th century philosopher along with Russell and a few people like that. Marvin Minsky

It’s hard to escape the recent excitement around ChatGPT and other ‘generative’ AI models. These models seemingly have the potential to change our lives and to revolutionise businesses. Major firms are rushing to invest billions of dollars to develop increasingly sophisticated versions of these models and to build the technology into their products. Under the hood, these models all use neural networks, running on Graphics Processing Units or Tensor Processing Units.

But interest in neural networks is not new. In fact, it predates modern computing. The very earliest work on neural networks actually helped influence the development of the modern ‘stored program’ computer.

This early interest in neural networks shouldn’t come as a surprise. For researchers who wanted to understand how to build ‘mechanical’ intelligence, it was natural to turn to the brain and nervous systems of humans and other animals as a source of ideas and inspiration. But first, researchers needed to understand how these natural systems worked.

One key early discovery was the role of electricity in the nervous system in the second half of the eighteenth century, with important discoveries by Luigi Galvin and then Emil du Bois-Reymond in the late 18th and early 19th centuries.

Then, crucially, Santiago Ramón y Cajal developed the ‘neuron doctrine’, the idea that the nervous system is made up of discrete individual cells. Y Cajal won the Nobel Prize in Physiology or Medicine in 1906 for this work.

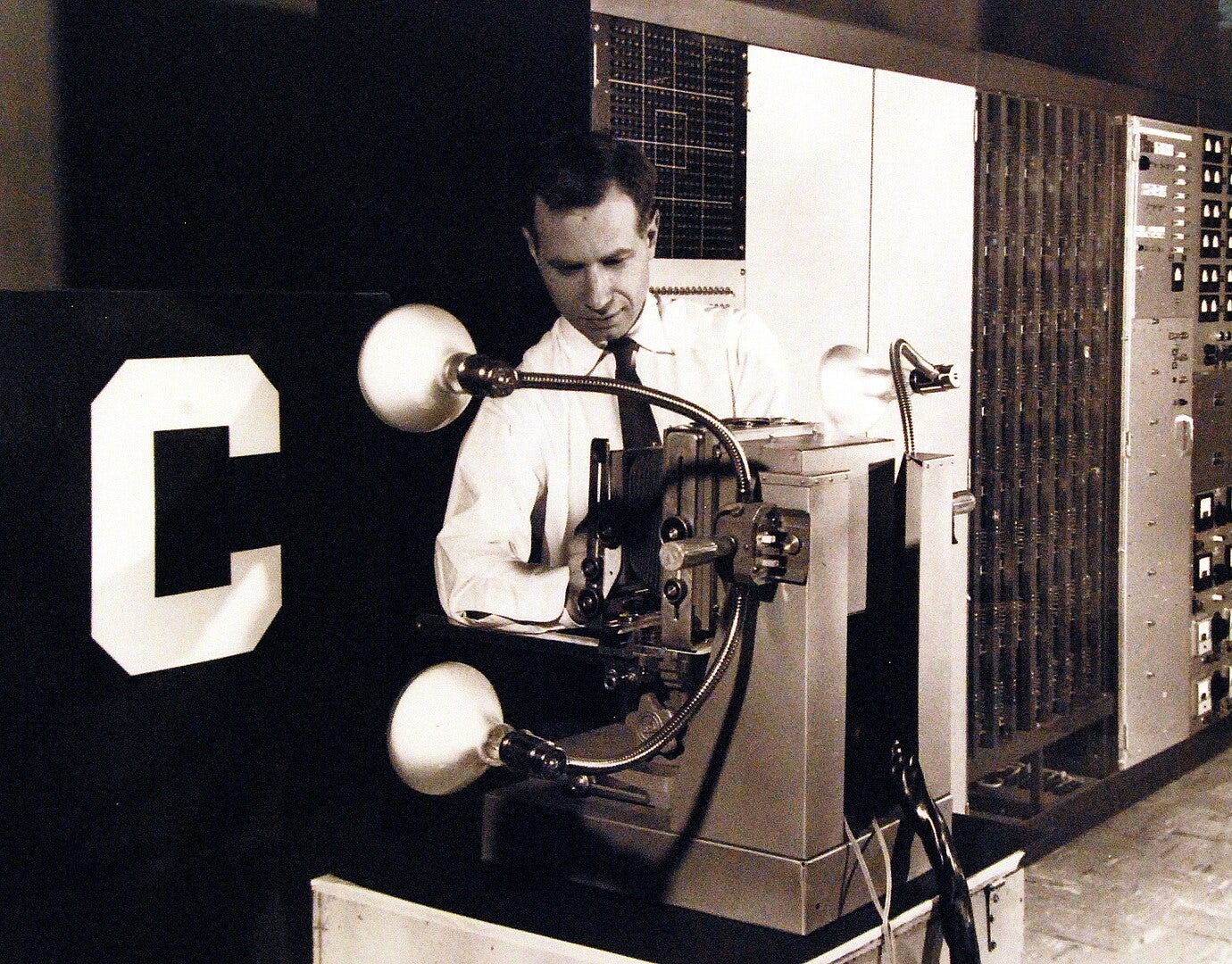

Incidentally, it’s interesting how crucial the role of optics has been throughout thew development of modern computers. We can see y Cajal with a Carl Zeiss microscope in the picture above. It is, of course, Carl Zeiss optics today that enable the production of the hardware that powers the development of modern artificial neural networks.

Folloowing Y Cajal’s Work continued to build scientific understanding of the workings of the brain and the nervous system over the coming decades, spawning a new scientific discipline, neurophysiology.

Warren McCulloch

Warren McCulloch was born in New Jersey in 1898. After studying philosophy and psychiatry at Yale, he continued his studies in psychiatry, eventually moving to work as a practising doctor with psychiatric patients in New York. He returned to Yale in 1934, working at the Laboratory for Neurophysiology until 1941, when he moved to Chicago, becoming professor of psychiatry and director of the Illinois Neuropsychiatric Institute.

Philosophy, psychiatry, neurophysiology. Even listing McCulloch’s diverse roles and studies doesn’t fully reflect the breadth of his interests. He wrote poetry. He designed buildings. And crucially, he maintained an interest in mathematical logic, particularly the work of Gottfied Leibniz and Bertrand Russell.

Amanda Gefter has described McCulloch as “a confident, gray-eyed, wild-bearded, chain-smoking philosopher-poet who lived on whiskey and ice cream and never went to bed before 4 a.m.” Marvin Minsky said that ‘all the world was a stage for him’. We are fortunate to have a video clip of an interview with McCulloch from 1969, shortly before his death.

McCulloch was an intuitive researcher. A man of ideas. But, he sometimes lacked the skills to make the most of those ideas.

Then, in 1940, he was fortunate to meet someone who could compliment his skills and help him turn some of his ideas into scientific breakthroughs.

Walter Pitts

Walter Pitts, just 17 when they met, was an unlikely scientific partner for a senior academic like McCulloch. But then Walter Pitts’s early career was just unlikely.

Born in 1923 in Detroit, Pitts taught himself logic and mathematics along with several languages. He was reported to have locked himself in a library at the age of 12 to study Russell and Whitehead’s Principia Mathematica. Discovering some errors, he wrote to Russell, who offered him a place to study at Cambridge University in England.

Pitts declined the offer. He ran away from home at age 15, eventually finding a new home at the University of Chicago. He never registered as a student, but lived, studied and worked with leading figures there in a variety of fields, including logic and mathematical biology.

McCulloch and Pitts may have been an unlikely pair, but by the time they first met, they had two strong common interests: logic and biology.

Soon, McCulloch invited Pitts to stay with him and his wife at their home, a farm near Old Lyme in Connecticut. There, the two men would work late into the night.

“A Logical Calculus of the Ideas Immanent in Nervous Activity”

The paper that would first make their collaboration famous would come out of one of McCulloch’s ideas. He’d read a paper by Alan Turing describing a machine that could perform computations. With his knowledge of biology and logic, he was able to make a link between what he knew about the human brain fns Turing’s ideas.

McCulloch had the intuition that the Brain was a sort of machine. Neurons, joined in ‘neural networks’, would perform logical operations, and these in turn would perform the computations needed to realise a ‘Turing machine’.

To demonstrate that this might be the case, the pair needed to strip the operation of physical neurons down to their basic essentials. They would then use these basic elements as building blocks to construct something that could perform the computation needed by the Turing machine.

The result of the McCulloch and Pitts collaboration was the 1943 paper “A Logical Calculus of the Ideas Immanent in Nervous Activity”.

In this paper, the pair described how their basic building block, which has become known as the ‘McCulloch Pitts Neuron’ can be used to create the elements needed to build logic structures.

The paper then goes on to link these ‘nets’ with Turing machines:

It is easily shown: first, that every net, if furnished with a tape, scanners connected to afferents, and suitable efferents to perform the necessary motor-operations, can compute only such numbers as can a Turing machine; second, that each of the latter numbers can be computed by such a net; …

And then goes on to draw a link between Turing’s work and psychology:

This is of interest as affording a psychological justification of the Turing definition of computability and its equivalents, Church’s A-definability and Kleene’s primitive recursiveness: if any number can be computed by an organism, it is computable by these definitions, and conversely.

This “Logical Calculus” paper was widely read. Frank Rosenblatt used the ideas to build the ‘Mark 1 Perceptron’, a machine designed to perform image recognition.

Rosenblatt’s machine was controversial. Some claims made about it were exaggerated, and it had several problems that remained unresolved, until much more recent breakthroughs such as the development of feed-forward networks.

Nonetheless, modern neural networks used in, for example, generative AI, can claim a direct lineage back to Rosenblatt’s and hence McCulloch and Pitts’s work.

But the “Logical Calculus” paper also had a much more rapid impact on the development of mainstream computing.

Von Neumann and the ‘First Draft’

As a result of his expertise in explosive charges, vital to the development of the atomic bomb, John von Neumann became involved in the Manhattan Project. He began to undertake frequent trips from his home in Princeton to the Los Alamos Laboratory in New Mexico.

Von Neumann used his commuting time to Los Alamos to gather his ideas on the development of a computer in the form of the (incomplete) ‘First Draft of a Report on the EDVAC’.

This 101-page document was the “first published description of the logical design of a computer using the stored-program concept” and describes what has become known as the ‘von Neumann’ computer architecture.

Von Neumann had read Alan Turing’s work and found it useful, if incomplete. In particular, Turing didn’t provide any clues as to how to build an actual computer.

To develop a working computer, von Neumann again turned to the central nervous system and the neuron for inspiration.

Every digital computing device contains certain relay like elements, with discrete equilibria. Such an element has two or more distinct states in which it can exist indefinitely.

It is worth mentioning, that the neurons of the higher animals are definitely elements in the above sense. They have all-or-none character, that is two states: Quiescent and excited.

Von Neumann then cites the MacCulloch and Pitts paper and its simplified model of the operation of neurons.

Following W.S. MacCulloch and W. Pitts ("A logical calculus of the ideas immanent in nervous activity," Bull. Math. Biophysics, Vol. 5 (1943), pp. 115-133) we ignore the more complicated aspects of neuron functioning: Thresholds, temporal summation, relative inhibition, changes of the threshold by after-effects of stimulation beyond the synaptic delay, etc. It is, however, convenient to consider occasionally neurons with fixed thresholds 2 and 3, that is, neurons which can be excited only by (simultaneous stimuli on 2 or 3 excitatory synapses (and none on an inhibitory synapse).

And then, crucially, he makes the leap from McCulloch’s neurons to the vacuum tubes that would provide the switching circuits for the earliest computers:

It is easily seen that these simplified neuron functions can be imitated by telegraph relays or by vacuum tubes. Although the nervous system is presumably asynchronous (for the synaptic delays), precise synaptic delays can be obtained by using synchronous setups.

Before finally considering how the performance of these vacuum tubes would compare with those of neurons in the central nervous system.

It is clear that a very high-speed computing device should ideally have vacuum tube elements. Vacuum tube aggregates like counters and scalers have been used and found reliable at reaction times (synaptic delays) as short as a microsecond (= 10-6 seconds), this is a performance which no other device can approximate. Indeed: Purely mechanical devices may be entirely disregarded, and practical telegraph relay reaction times are of the order of 10 milliseconds (= 10–2 seconds) or more. It is interesting to note that the synaptic time of a human neuron is of the order of a millisecond (= 10^-3 seconds).

The paper then goes on to show how to use vacuum tubes to create the modern stored program computer, with what has become known as the ‘von Neumann’ architecture.

At the most granular level, the computer is made of “E elements” constructed from vacuum tubes and which would today be described as a kind of gate. The simplest E element corresponds to a two input AND gate with one element inverted.

These E elements are combined to create all the key elements of the modern computer: control, arithmetic, memory, input, output and external memory (such as punched cards or magnetic wire).

As we’ve seen in Unveiling the Modern Computer, von Neumann’s paper was written up by Herbert Goldstine, and 24 copies were distributed in June 1945 to individuals working on the EDVAC project. The approach it set out would form the starting point for the development of the next generation of computers - the Manchester Baby, EDVAC itself and the Princeton IAS machine - and for each generation of computers that would follow.

The ‘First Draft’ was controversial. Members of the EDVAC project thought that von Neumann had taken ideas that he had come across working as a consultant at University of Pennsylvania's Moore School of Electrical Engineering, and simply converted these ideas into the language of ‘formal logic’. The paper failed to name any contributors from the Moore School. In addition, the publication of the ‘First Draft’ prevented the EDVAC team from patenting the approach it set out, further enabling the rapid spread and development of those ideas.

Epilogue

They did not envisage either modern computers or AI, but together they did help to lay the foundations for both.

After their 1943 paper, McCulloch and Pitts, continued to work together collaborating with colleagues at Chicago. Pitts would later move to MIT where he would study under Norbert Wiener, mathematician, philosopher and one of the pioneers of cybernetics and robotics.

Their story doesn’t have a happy ending though. As Amanda Gefter recounts in her article The Man Who Tried to Redeem the World with Logic, Walter Pitts became strong friends with Wiener only for Wiener to desert him, triggering depression and then alcoholism and associated health problems. Pitts died in 1969 aged only 46. McCulloch passed away just four months later.

From the perspective of 2023, it’s remarkable how influential their work has now become. They did not envisage either modern computers or AI, but together they did help to lay the foundations for both. Their contribution was to connect the concepts of biological neurons and mathematical logic, with the ability to perform computations. That contribution helped von Neumann devise the modern computer. Now, almost eighty later, neural networks running on the latest incarnations of those computers promise to change the world again.

After the break, more on the “Logical Calculus” paper and on Warren McCulloch and Walter Pitts.

The rest of this edition is for paid subscribers. If you value The Chip Letter, then please consider becoming a paid subscriber. You’ll get additional weekly content, learn more and help keep this newsletter going!