A History of C Compilers - Part 1: Performance, Portability and Freedom

The first part of a whistle stop tour of the history of C compilers. Also GNU/Linux, dragons and lots of architectures!

The economic advantages of portability are very great. In many segments of the computer industry, the dominant cost is development and maintenance of software.

Dennis Ritchie and Stephen Johnson 1978

… many insist that C is the programming language and that it will last forever.

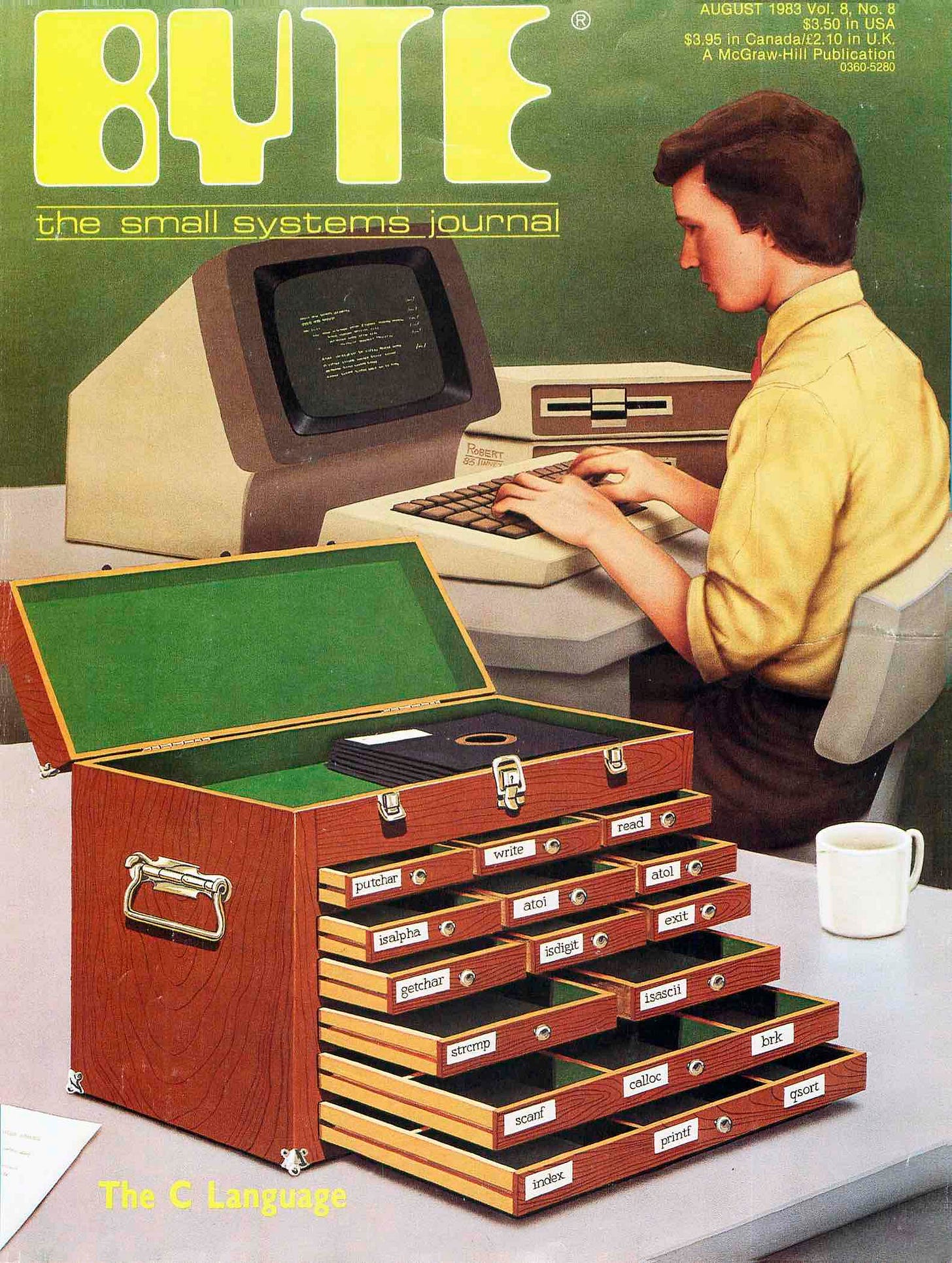

Byte Magazine 1983

The August 1983 issue of Byte Magazine devoted its cover, and a large part of its editorial content, to the C programming language. After an introduction to C, co-authored by Brian Kernighan of K&R fame, Byte reviewed as many as 20 C compilers - programs to turn C language code into ‘executable programs’ that could be run on the IBM PC or personal computers running the CP/M or CP/M-86 operating systems.

That same year, MIT’s Richard Stallman approached Andrew Tanenbaum for permission to use the 'Free University Compiler Kit' (better known as the 'Amsterdam Compiler Kit') as the C compiler for his planned GNU (‘GNU’s Not Unix’) operating system. Stallman's GNU would be 'Free as in Freedom' software. However, Tanenbaum's compiler was only 'Free as in the Free University of Amsterdam' and so deemed unsuitable by Stallman for GNU.

So Stallman set about creating his own “free software” C compiler. The first version, 0.9, was released to the world as a download from MIT in March 1987.

Forty years after that Byte issue, C remains an essential language and will continue to be so for the foreseeable future. If anything this illustration from Wikipedia, designed to show the pervasiveness of C, understates how widely used it is. C, and its object-oriented derivative, C++ are everywhere!

The compilers that Byte discussed, though, have mostly disappeared, along with the companies that made them. By contrast, Stallman's compiler, now known as GCC for the 'GNU Compiler Collection', and a more recent open-source compiler ecosystem - LLVM / Clang - are pervasive. Other compilers, including important proprietary compilers from Microsoft and Intel, are available, but none can match GCC and LLVM's broad reach - in architectures, operating systems, and languages - and utility.

As we've seen, after a period of relative stability, or perhaps stagnation, in computer architecture, we are now living through a period of much greater competition and innovation. Arm is challenging x86, and RISC-V in turn is challenging Arm. GPUs and a wide range of AI-focused Application Specific Integrated Circuits (ASICs), such as Google's TPUs, are becoming key pieces of hardware in the data center and elsewhere.

But none of this hardware innovation would be useful without software. Almost all other software for these new architectures, from operating systems to machine learning tools, depends on having available performant compilers for C, C++, and more specialized C-like languages, such as that used for GPU kernels in Nvidia’s CUDA. So now is a good point to look at the history of the compiler and to try to understand what has shaped the development of these vital tools.

Note that this is a free post with additional material including further reading at the end of the post for premium subscribers.

Grace Hopper's non-compiler 'compiler', Manchester 'Autocodes', and early mainframe compilers.

Grace Hopper coined the term 'compiler' but used it for a piece of software that would today be called a linker and loader.

The first compiler, in the modern sense of the term, was written at the University of Manchester in the UK by Alick Glennie for the Manchester Mark 1 computer. This was a compiler for one of the Manchester 'Autocodes' or, as we would now say, programming languages - see a sample of Glennie’s first Autocode below.

c@VA t@IC x@½C y@RC z@NC

INTEGERS +5 →c # Put 5 into c

→t # Load argument from lower accumulator

# to variable t

+t TESTA Z # Put |t| into lower accumulator

-t

ENTRY Z

SUBROUTINE 6 →z # Run square root subroutine on

# lower accumulator value

# and put the result into z

+tt →y →x # Calculate t^3 and put it into x

+tx →y →x

+z+cx CLOSE WRITE 1 # Put z + (c * x) into

# lower accumulator

# and returnFORTRAN is the first compiled 'high-level' language that is still in widespread use today. The language was developed under the supervision of John Backus who delivered the first FORTRAN compiler, for the IBM 704 mainframe, in 1957 after a three-year development project.

As an aside, some indication of the challenges around compiler construction in this era can be gained from the approach taken by the FORTRAN compiler for the IBM 1401 (announced in 1959) which needed 63 passes to compile a program.

FORTRAN was provided for the IBM 1401 by an innovative 63-pass compiler that ran in only 8k of core. It kept the program in memory and loaded overlays that gradually transformed it, in place, into executable form, ... The executable form was not machine language; rather it was interpreted …

In this era, most new machines came with new architectures. Mainframes were hugely expensive - even large firms would sometimes rent rather than buy their machine. Compilers would usually be made available for free or bundled in with hardware sales. In these circumstances why would anyone try to create a FORTRAN or COBOL compiler that would compete with, say, IBM's?

Minicomputers, Unix, and C

The wider availability of lower-cost minicomputers from companies such as DEC in the later 1960s and early 1970s created new opportunities for the development of operating systems, computer languages, and their compilers by third parties. The DEC PDP-11 alone had several dozen operating systems written for it.

The most famous and consequential example of third-party software for the PDP-11 was the development of the Unix operating system and the C programming language at Bell Labs. Unix was originally written in PDP-11 assembly language, but seeking greater portability, in 1972 Dennis Ritchie developed the C language (a development of B which in turn was a development of BCPL) and a compiler for his new language and then rewrote Unix in C.

The Portable C Compiler

Ritchie's C compiler was written for the DEC PDP-11 but soon made its way onto other machines. According to Ritchie and Stephen Johnson:

C was developed for the PDP-11 on the UNIX system in 1972. Portability was not an explicit goal in its design, even though limitations in the underlying machine model assumed by the predecessors of C made us well aware that not all machines were the same. Less than a year later, C was also running on the Honeywell 6000 system at Murray Hill. Shortly thereafter, it was made available on the IBM 370 series machines as well. The compiler for the Honeywell was a new product but the IBM compiler was adapted from the PDP-11 version, as were compilers for several other machines.

Although the original PDP-11 compiler was adapted for other machines it was not ideally suited for porting. A few years later, the 'Portable C Compiler' (or 'pcc') was written by Johnson, also at Bell Labs, which would provide a C compiler for Unix that made this adaptation much easier. It was quickly ported to a range of other machines. Quoting Johnson:

To summarize, however, if you need a C compiler written for a machine with a reasonable architecture, the compiler is already three-quarters finished!

A large fraction of the machine-dependent code can be converted in a straightforward, almost mechanical way.

Johnson's pcc was used as the C Compiler in Seventh Edition Unix (Version 7 Unix) in 1979. Before long Johnson and Ritchie could report that pcc:

is now in use on the IBM System/370 under both OS and TSS, the Honeywell 6000, the Interdata 8/32, the SEL86 the Data General Nova and Eclipse, the DEC VAX-11/780, and a Bell System processor.

The portability of pcc was a key in enabling Unix itself to be ported to new machines. The first machine for which this task was undertaken was another minicomputer, the Interdata 8/32, which was notable for being the first minicomputer priced under $10,000. Porting of Unix with pcc to the Interdata machine went relatively smoothly:

In about six months, we have been able to move the UNIX operating system and much of its software from its original host, the PDP11, to another, rather different machine, the Interdata 8/32. The standard of portability achieved is fairly high for such an ambitious project: the operating system (outside of device drivers and assembly language primitives) is about 95 percent unchanged between the two systems; inherently machine-dependent software such as the compiler, assembler, loader, and debugger are 75 to 80 percent unchanged; other user-level software (amounting to about 20,000 lines so far) is identical, with few exceptions, on the two machines.

Later, the portability of pcc enabled Version 7 Unix and its derivatives to be ported to a wide range of the new 16-bit microprocessor architectures, including the Motorola 68000 (e.g. the first Sun Workstations), the Intel 8086 (including Xenix) the Zilog Z8000, and more.

A repository of the code of pcc is available today for inspection on GitHub (see further reading at the end of this post) and can produce code for sixteen architectures ranging from the PDP-11 and Data General Nova from the 1960s and early 1970s, all the way to modern 64-bit x86 (AMD64).

Two Books - K&R and the Dragon

The first Unix, Ritchie's C compiler, and pcc were initially proprietary software, with the rights owned by Bell Labs. The story of Unix, and the tortuous disputes around it, is for another time. It's worth noting though that the C language itself was not subject to copyright or other intellectual property restrictions. It was soon well known, in large part through Kernighan and Ritchie's famous 1978 book, 'The C Programming Language'.

The year before that Alfred Aho and Jeffrey Ullman (both of whom also worked at Bell Labs for a time) published the book Principles of Compiler Design. The book would become known as the ‘Green Dragon Book’ as it featured a cartoon of a medieval knight on a horse battling a green dragon labeled ‘Complexity of Compiler Design’. According to the introduction to the book, its purpose was:

to acquaint the reader with the basic constructs of modern programming languages and to show how they can be efficiently implemented in the machine language of a typical computer.

In addition to teaching the theory behind compiler design, the book also looks briefly at some then-current ‘real world’ compilers, noting that by 1977:

C compilers exist for a number of machines, including the [Dennis Ritchie’s for the] PDP-11, [and Stephen Johnson’s for] the Honeywell 6070, and the IBM-370 series.

The ‘green dragon book’ and its successor, Compilers: Principles, Techniques, and Tools - also known as the ‘red dragon book’ published in 1986, would become standard textbooks for anyone wanting to learn how to build compilers. They would make the theory and the techniques creating a compiler available to all.

8-Bit Compilers

Meanwhile, when Intel launched their first 8-bit microprocessor, the 8008, in 1972 they commissioned Gary Kildall to write a compiler for the architecture. Kildall wrote a compiler for PL/M, a language that he designed specially for the purpose.

Famously, Kildall would go on to create CP/M, the most popular operating system for 8-bit Intel and Zilog processors and its 16-bit successor CPM-86. Less well known is that he also did novel research on compiler design, see more in the further reading at the end of this post.

There were soon efforts to port the C language to 8-bit architectures, most notably in the form of Ron Cain’s small-C for the Intel 8080. Cain has written that:

… I ran off a listing of the Small-C compiler, strapped it to the back of my motorcycle (which must have been a BMW if memory serves) and ran it over to the local office of Dr. Dobbs. This was an unsolicited and unexpected visit, so you can appreciate the look of puzzlement when I said "Here's a compiler for a subset of the C language. Sorry all I have is hard copy. Want to run it?"

"Sure," they said, "just give us a day or so to look at the code to make sure it does what you say it does. Er, a compiler you say? C to 8080 ASM code? You wrote this? Sure you don't have it on mag tape or something?"

A listing of Cain’s Small-C duly appeared in Dr Dobb’s journal and soon spawned several derivative compilers for 8-bit machines.

These 8-bit C compilers remained primitive though. Most successful commercial 8-bit software, limited by the quality of the compilers and needing to make the most of the limited resources offered by 8-bit machines, continued to be written in assembly language.

Early PC Compilers

Commercial programs continued to be written in assembly language in the early 16-bit microprocessor and the (IBM) PC era. The first (1983) version of Lotus 1-2-3, the spreadsheet that was one of the most important early PC software packages, was written in 8086 assembly language, before being rewritten in C a few years later.

But with the increase in RAM that the new 16-bit systems like the IBM PC offered, with a maximum of 1 Megabyte compared to 64 KBytes for 8-bit systems, software was growing in complexity, and using assembly language was becoming more problematic. And with a range of competing platforms and architectures, portability became more important. As the software market grew, so did the demand for software development tools, including compilers.

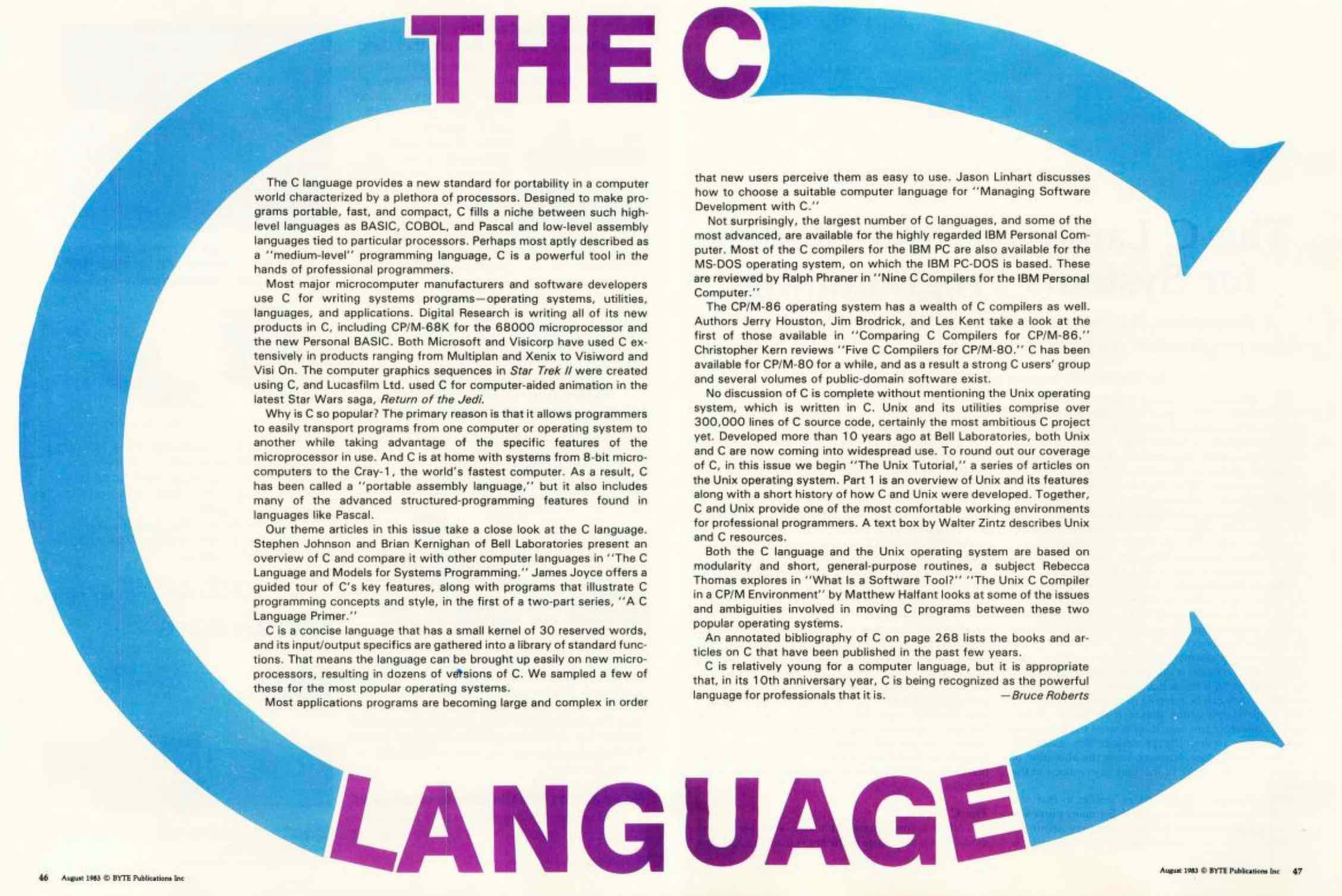

This takes us to the 1983 Byte 'C Programming Language' edition. Commentary on C in the 'Features' section of the magazine starts with a clear articulation of one of the key attractions of the language.

The C language provides a new standard for portability in a computer world characterized by a plethora of processors.

Later on in the magazine, an article titled ‘The C Language and Models for Systems Programming’ by, none other than, Stephen Johnson and Brian Kernighan states that:

Using functions to extend the base language explains why a language that is so low level can be so portable. C compilers have been built for more than 40 different machines, from the Z80 to the Cray-l.

Byte’s introductory feature also discusses C's growing popularity in the world of microcomputer software:

Most major microcomputer manufacturers and software developers use C for writing systems programs-operating systems, languages, and applications. [Gary Kildall’s] Digital Research is writing all of its new products in C, including CP/M-68K for the 68000 microprocessor and the new Personal BASIC. Both Microsoft and Visicorp have used C extensively in products ranging from Multiplan and Xenix to Visiword and VisiOn.

And again emphasizing the importance of C's portability.

Why is C so popular? The primary reason is that it allows programmers to easily transport programs from one computer or operating system to another while taking advantage of the specific features of the microprocessor in use. And C is at home with systems from 8-bit micro-computers to the Cray-1, the world's fastest computer. As a result, C has been called a 'portable assembly language'...

Byte highlighted that growing interest in Unix was a key part of the reason for C's increasing popularity.

No discussion of C is complete without mentioning the Unix operating system, which is written in C. Unix and its utilities comprise over 300,000 lines of C source code, certainly the most ambitious C project yet. Developed more than 10 years ago at Bell Laboratories, both Unix and C are now coming into widespread use.

Kernighan and Johnson write about how radical the use of a high-level language for performance-critical applications had been when Ritchie had created C, but how well C programs had, in fact, performed when compared to assembly language:

At the time, using a high-level language for such applications was a radical departure from standard practice; everyone knew that these programs had to be written in assembly language "for efficiency." Yet in many cases the C code, although clearly less efficient in any given routine, produced programs that outperformed similar programs written in assembly language.

Kernighan and Johnson end their article noting some of C’s drawbacks but finish on an optimistic note:

One or two such goofs while learning the language can lead a beginner to burn the manual. Despite its problems, however, C continues to be used and developed, a good sign that there is a place for a portable low-level language with powerful model-building facilities.

This strong interest in C had already led to the development of a large number of C compilers and Byte tested twenty such compilers, for 8080, Z80, and 8086-based machines. The compilers tested were:

8080/Z80 running Digital Research’s CP/M-80: Aztec, BDS, C/80, Telecon, Whitesmiths.

8086/88 running Digital Research’s CP/M-86: Lattice, Mark DeSmet, Digital Research, Mark Williams, Computer Innovations, Supersoft.

8086/88 running Microsoft’s MS-DOS: c-systems, Caprock, Computer Innovations, DeSmet, Intellect, Lattice, Quantum, Supersoft, Telecon.

All the compilers tested by Byte were proprietary products, ranging in price from $35 to $750.

Let's briefly look at one of the featured C compilers for CP/M-86, Mark Williams C which was priced starting at $500.

The Mark Williams Company, based in Chicago Illinois, tried to capitalize on the increasing interest in Unix and C, releasing Coherent, a Unix-like operating system for the IBM PC, and several C compilers including Mark Williams CC86 for CP/M-86 and a low-cost C compiler 'Let's C' for the IBM PC. The Mark Williams CC86 C Compiler for CP/M 86 was a high-quality product and Byte commented:

CC86 has the most professional feel of any package we tested. It makes a good attempt at full Kernighan and Ritchie and Unix version 7 compatibility.

Notably, and perhaps surprisingly, absent from the list of companies offering C compilers was Microsoft. MS-DOS 4.0, which was released as late as 1988, still included large amounts of 8086 assembly.

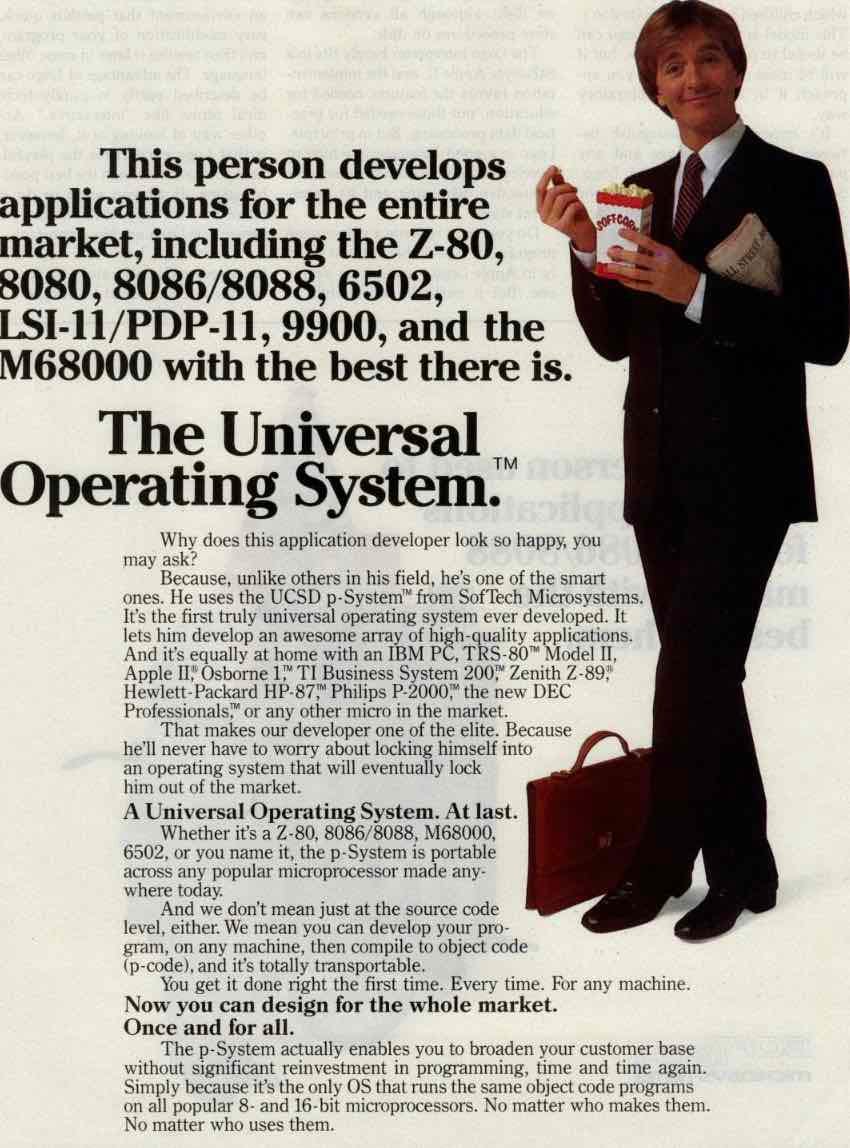

Another approach: UCSD Pascal

Now you can design for the whole market. Once and for all.

Softech UCSD Pascal Advertisement

At this point, it's worth mentioning another then-popular language that promised a strong level of portability but used a very different approach, Niklaus Wirth’s Pascal. UCSD Pascal implemented a virtual machine (the p-machine) that enabled it to run on a wide variety of architectures. One advertisement from the time captured the appeal very effectively.

In practice, UCSD Pascal wasn't successful as an alternative to a native code C compiler and soon faded. Unlike compiled C there was a significant performance penalty when compared to using assembly language. A successful bytecode ecosystem would have to wait until the Java programming language and the Java Virtual Machine were released in 1995.

Stallman and GCC

Richard Stallman's recursively named 'GNU' operating system project was 'Not Unix' but was closely modeled on Unix.

In the 'GNU Manifesto' written in 1985 Stallman stated:

GNU, which stands for Gnu's Not Unix, is the name for the complete Unix-compatible software system which I am writing so that I can give it away free to everyone who can use it.

Unix used C and so GNU would need a C compiler. Stallman, disappointed by the 'unfree' nature of Andrew Tanenbaum's compiler, set out to create his own. The first version of the compiler - originally called the GNU C Compiler - was aimed at the more powerful 16/32-bit microprocessors then becoming increasingly popular. Stallman announced the first release of GCC in March 1987.

The GNU C compiler is now available for ftp from the file /u2/emacs/gcc.tar on prep.ai.mit.edu. This includes machine descriptions for vax and sun, 60 pages of documentation on writing machine descriptions (internals.texinfo, internals.dvi and Info file internals). This also contains the ANSI standard (Nov 86) C preprocessor and 30 pages of reference manual for it. This compiler compiles itself correctly on the 68020 and did so recently on the vax

The compiler included the, by now aging, DEC VAX minicomputer as a target as Stallman had been offered a VAX 11/750 at MIT as a free development machine.

The first published version of GCC (0.9) is still available for download.

Crucially, and aligned with Stallman’s objectives for the GNU project, GCC was released with one of Stallman’s ‘free software’ licenses. Users could freely take and modify the code for their own use, but if they distributed those changes they had to share them with others.

Portability and the RISC Cambrian Explosion

The portability of GNU and GCC wasn't an explicit objective of Stallman's manifesto, but it was surely a necessary consequence. What was the point of freedom if it was limited to the users of a few architectures?

However, envisioning difficulties with less powerful machines, Stallman left them to others:

The extra effort to make it run on smaller machines will be left to someone who wants to use it on them.

The timing of the release of GCC was fortuitous. As we saw in The RISC Wars: The Cambrian Explosion, the mid-1980s saw the appearance of a large number of new RISC architectures. The firms creating these new designs sometimes had their own C compilers but, with the appearance of GCC, there was a new option: just write a new ‘back-end’ to enable GCC to target the new architecture.

The RISC Wars Part 1 : The Cambrian Explosion

I’d like to start this week’s post with a small confession. In last week’s post, I called Berkeley RISC-I ‘the first RISC microprocessor’. In fact, some believe that a derivative of the IBM 801, known as ROMP, was the first RISC microprocessor. The story of the 801’s successors, including ROMP, will be the subject of a later post. I believe that RISC-I …

Before long GCC was being ported to a range of other operating systems and architectures. To give just one example, the Sun SPARC port appeared as early as 1988. One can sense the breadth of interest in GCC over the 1990s from a 2001 list of contributors and their work. It includes some of the machines that GCC had been ported to by that stage (most of which have now disappeared!)

Vax, Sony NEWS Machine, NatSemi 32000, Motorola 88000, Convex, MIPS, Tahoe, Pyramid, Charles River Data Systems, Elxsi, Clipper, PowerPC, Hitachi SH, Fujitsu G/Micro Tron, AMD 29000, DEC Alpha, IBM RT, IBM RS/6000

Along with architectures, GCC was also adding languages, including Bjarne Stroustrup’s C++, which had appeared as an extension to the C programming language as recently as 1985. According to Michael Tiemann:

I wrote GNU C++ in the fall of 1987, making it the first native-code C++ compiler in the world.

The 80386, GCC and GNU / Linux

The first version of GCC (1.27) supporting the Intel 80386 appeared in 1988, with most of the work on that port being done by Bill Schelter, a maths professor at the University of Texas, Austin.

The availability of 80386 support would later be helpful for Linus Torvalds. Torvalds has said that he preferred the architecture of the Motorola 68000 series, but he could see where the desktop computer market was heading. In his autobiographical book 'Just for Fun' he wrote:

I faced a geek's dilemma. Like any good computer purist raised on a 68008 chip, I despised PCs. But when the 386 chip came out in 1986, PCs started to look, well, attractive. They were able to do everything the 68020 did, and by 1990, mass-market production and the introduction of inexpensive clones would make them a great deal cheaper. I was very money-conscious because I didn't have any. So it was, like, this is the machine I want to get. And because PCs were flourishing, upgrades and add-ons would be easy to obtain. Especially when it came to hardware, I wanted to have something that was standard.

I decided to jump over and cross the divide. I would be getting a new CPU.

When he started his Masters Degree course in Computer Science in Helsinki, C and Unix were two of the main attractions:

"... I was most looking forward to was in the C programming language and the Unix operating system"

Torvalds soon started developing his kernel for a Unix-like operating system. As with Stallman, there was interest in, but eventual rejection of, Andrew Tanenbaum's work, in this case, the MINIX operating system:

Early Linux kernel development was done on a MINIX host system, which led to Linux inheriting various features from MINIX, such as the MINIX file system. Eric Raymond claimed that Linus hasn't actually written Linux from scratch, but rather reused source code of MINIX itself to have working codebase. As the development progressed, MINIX code was gradually phased out completely.

Torvalds needed a C compiler and an 80386 version of GCC was readily available. When he first announced his project to the world in 1991, via a Usenet posting, GCC even got a mention:

I've currently ported bash(1.08) and gcc(1.40), and things seem to work. This implies that I'll get something practical within a few months, and I'd like to know what features most people would want. Any suggestions are welcome, but I won't promise I'll implement them :-)

Torvalds had written that:

"It is NOT protable (uses 386 task switching etc)"

but, despite this, the first responses to Torvalds's announcement were very interested in the portability of the system to other architectures.

"How much of it is in C? What difficulties will there be in porting?

Nobody will believe you about non-portability ;-), and I for one would

like to port it to my Amiga (Mach needs a MMU and Minix is not free)."

The combination of Torvald's kernel and Richard Stallman's GNU software tools, including GCC, would soon become widely known as Linux. GCC has been an essential part of Linux ever since.

Microsoft Visual C

By the early 1990s the availability of Linux as a free Unix-like operating system for the x86 and other architectures, along with GCC as a high-quality C compiler, meant that, with a few exceptions, commercial competitors struggled to compete. Most of the compilers tested by Byte in 1983 quickly disappeared completely. The Mark Williams Company, for example, closed its operations in 1995.

One that has survived is Lattice C, one of the most performant of the compilers tested by Byte. Lattice C was so good that it was licensed by Microsoft and sold as Microsoft C V1.0 before being replaced by Microsoft’s own in-house Microsoft C V2.0 compiler.

Other compilers for the MS-DOS and then Windows operating systems, notably Borland’s Turbo C and Borland C++, Zortech C++ (later Symantec and then Digital Mars), and Watcom C/C++ did well for an extended period before the combination of GCC and Microsoft’s own C/C++ compilers ended their commercial viability.

Borland’s C and C++ - and other Borland products Zortech and its successors - and Watcom C/C++ really deserve an article all to themselves, but although they were popular and important at the time their impact on today’s compiler ecosystems is smaller than the other compilers we dicuss.

Today, Microsoft still offers the descendants of its first in-house compiler, in the form of Microsoft Visual C++. Just as Windows has become and remains the most popular operating system on the desktop, Microsoft’s compiler has remained dominant on Windows.

GCC and EGCS

The open-source nature of GCC soon led to several experimental forks with changes that were not accepted back into the main GCC project. This led to the creation of the Experimental/Enhanced GNU Compiler System (EGCS) in 1997, which merged a number of these forks. According to Wikipedia:

EGCS development proved considerably more vigorous, so much so that the FSF officially halted development on their GCC 2.x compiler, blessed EGCS as the official version of GCC, and appointed the EGCS project as the GCC maintainers in April 1999. With the release of GCC 2.95 in July 1999 the two projects were once again united.

GCC Today

Today GCC has been ported to a wide range of architectures and operating systems, almost certainly more than any other compiler. It also compiles a range of programming languages, including C++, Fortran, Ada, and more.

On architectural portability, according to the GCC website:

GCC itself aims to be portable to any machine where

intis at least a 32-bit type. It aims to target machines with a flat (non-segmented) byte addressed data address space (the code address space can be separate). Target ABIs may have 8, 16, 32 or 64-bitinttype.charcan be wider than 8 bits.

Here is a list of the architectures that GCC supported in 2023 (source):

aarch64, alpha, arc, arm, avr, bfin, c6x,cr16, cris, csky, epiphany, fr30, frv, gcn, h8300, i386,ia64, iq2000, lm32, m32c, m32r, m68k, more, mep, microblaze, mips, mmix,mn10300, moxie, msp430, nds32, nios2, nvptx, pa, pdp11, pru, riscv, rl78, rs6000, rx, s390, sh, sparc, stormy16, tilegx, tilepro, v850, vax, visium, xtensa.

Note that both of the originally supported architectures - m68k and vax - are still supported today! Notably absent, though, are the 8-bit microprocessors and microcontrollers that Richard Stallman left to others to implement back in 1987.

Along with this growth in architectural and language support GCC itself has grown hugely. By 2019 GCC had grown in size to over 15 million lines of code.

Today though, GCC isn’t the only pervasive open-source C compiler. The LLVM ecosystem has gained increasing popularity and is vital to the success of many of the new architectures and hardware that we’ve discussed in recent posts. We'll discuss LLVM, and its relationship with GCC, in more detail in the next post in this series.

Before we leave though, a reminder of how important compilers are to the success of an architecture. Intel's Itanium architecture, on which the company spent billions of dollars, is generally thought to have failed, in large part, because the company failed to get a compiler for the new architecture to work well enough. The recent announcement of the removal of support for it in the next GCC (version 15) provides final confirmation of the fact that the architecture is obsolete:

Support for the

ia64*-*-target ports which have been unmaintained for quite a while has been declared obsolete in GCC 14. The next release of GCC will have their sources permanently removed.

The success or failure of many of the 'Cambrian Explosion' of machine learning ASICs will, in many cases, depend on the success or failure of their software ecosystems and the availability of a high-quality compiler for C and C-like languages is essential to all those ecosystems.

The rest of this post is for premium subscribers and includes links to:

Brian Kernighan on the C programming language.

Dennis Ritchie and Stephen Johnson on the portability of C and Unix.

The earliest known version of Dennis Ritchie’s first C compiler.

The C Language and Models for Systems Programming Byte article by Stephen Johnson and Brian Kernighan.

Gary Kildall’s compiler research.

Dr Dobb’s Journal Articles and Resources on C.

More on Small C.

More on the Portable C compiler.

Running the first GCC with 80386 support.

C++ on the Commodore 64.

Upgrade your subscription by clicking on this button.