Google's First Tensor Processing Unit: Origins

Why and how did Google build the first AI accelerator deployed at scale?

Note: This is a two-part post. This part provides context and covers the story of the development of the first Google TPU. Part 2 looks at the architecture and the performance of the TPU in more detail.

If you’re interested in neural network accelerators you might enjoy this week’s short Chiplet on Intel’s first AI chip, the 80170. More on how to sign up to receive Chiplets here.

And now I see with eye serene

The very pulse of the machine

A Being breathing thoughtful breath

She Was a Phantom of Delight William Wordsworth

One of the themes of this Substack is that ideas from the history of computing that have been discarded or have gone out of fashion can still be interesting and, on occasion, extremely useful. It’s unlikely that there will be a more consequential example of this in any of our lifetimes than the use of neural networks, which have gone through several periods of neglect (“AI winters”) over almost seven(!) decades, alternating with periods of, sometimes extreme, hype.

If interest in neural networks has gone through multiple periods of boom and bust, then the same is true of hardware dedicated to accelerating their operation. We briefly mentioned one early example, Intel’s 80170 analog neural network chip, in our recent Chiplet post. There are many others too and we’ll have a look at some of them in future posts.

This hardware has consistently had a ‘chicken and egg’ problem though. CPUs are not an efficient way of implementing a neural network. But how could firms justify ongoing investment in specialized hardware without proof that neural networks are useful? But for neural networks to work, they needed more powerful hardware. Time and again projects to build dedicated hardware have been abandoned as the hype cycle turned. In the end, it took hardware originally built for another purpose, GPUs, to break out of the impasse.

After that, it was inevitable that we would soon see dedicated hardware. We are certainly in a ‘hype’ period now, and that hype has led many firms, from startups to hyperscalers, to build their own accelerators. Most of those designs are likely to fail, but dedicated neural networks accelerators will certainly form a significant part of the computing landscape in the future. And Google’s Tensor Processing Units or TPUs, the first accelerators to be deployed at scale, will certainly be one of the survivors.

Why are Google’s TPUs interesting and important? They aren’t designs that can be purchased from Google, or anyone else. You can ‘rent’ them on Google’s cloud but the ongoing use of TPUs is dependent on Google’s continuing commitment to making them available. They seem like a shaky base to build on.

But this is Google, and just the fact that Google is using TPUs for its own services makes them important. It’s sometimes easy to forget the scale of many of Google services. The company has nine services (Search, Android, Chrome, Gmail, Maps, Play Store, YouTube, Photos) with over a billion users. Plus Google has the third largest ‘public cloud’.

And in an era when so much of the conversation is dominated by discussion of Nvidia’s pre-eminence in data center machine learning hardware, they provide an example of a proven alternative to Nvidia GPUs that has been deployed at scale. And the central idea behind Google’s TPU project, of creating data center hardware dedicated to accelerating machine learning tasks, has prompted lots of other firms to build their own hardware, from startups like Cerebras and Tenstorrent to hyper-scalers like AWS and Microsoft. One of those startups, Groq, recently covered in an excellent post by Dylan Patel, is led by one member of the original TPU team, Jonathan Ross.

The history of the development of the first TPU is interesting too. It shows how even a company like Google can be nimble and move rapidly when it feels the need to. How a company with the necessary resources can develop innovative hardware quickly. And it also shows how ideas from decades ago can resurface to become central to important modern designs.

We should start, though, by clarifying what we are talking about when we talk about a ‘Tensor Processing Unit’.

Tensor Hardware and Software - Disambiguation and Explanation

In recent years, the word ‘tensor’ has started to appear in the naming of several pieces of computer hardware and software. Here is a short, non-exhaustive, list:

Tensorflow: Google’s machine learning framework;

Google Tensor Processing Unit (TPU): Google’s custom data center accelerator.

Tensor Core: An execution unit found in Nvidia’s more recent GPUs;

Google Tensor: An Arm-based System-on-Chip found in the most recent Pixel smartphones;

This post will focus on the second of these, Google’s custom data center accelerator, covering both the history and the architecture of the first version of the Google TPU. I’ll call this TPU v1, where necessary, to distinguish it from its successors, which we’ll look at in later posts.

The sudden appearance of all these tensors is, of course, due to the rapid increase in the importance of machine learning and, in particular, of deep learning. Tensors are central to the formulation and implementation of deep learning.

But what is a tensor? Readers who are already familiar with tensors can safely skip this section.

Wikipedia starts with, for me, a less than ideally helpful answer!

In mathematics, a tensor is an algebraic object that describes a multilinear relationship between sets of algebraic objects related to a vector space. Tensors may map between different objects such as vectors, scalars, and even other tensors.

Please don’t close the page at this point! In practice, we can make this a little simpler and rely almost entirely on one of the ways tensors are represented.

A tensor may be represented as a (potentially multidimensional) array.

So, depending on the nature of the tensor, it can be represented as an array of n dimensions where n is 0,1,2,3 and so on. Some of these representations have more familiar, names:

Dimension 0 - scalar

Dimension 1 - vector

Dimension 2 - matrix

Why is a Tensor Processing Unit so called? Because it is designed to speed up operations involving tensors. Precisely, what operations though? The operations that are referred to in our original Wikipedia definition which described a tensor as a “map (multilinear relationship) between different objects such as vectors, scalars, and even other tensors”.

Let’s take a simple example. A multilinear relationship between two one-dimensional arrays can be described using a two-dimensional array. The mathematically inclined will recognize the process of getting from one vector to the other as multiplying a vector by a matrix to get another vector.

This can be generalized to tensors that represent the relationship between higher dimensional arrays. However, although tensors describe the relationship between arbitrary higher-dimensional arrays, in practice the TPU hardware that we will consider is designed to perform calculations associated with one and two-dimensional arrays. Or, more specifically, vector and matrix operations. We’ll examine how the TPU speeds up these operations, in Part 2 of this post.

Google’s TPU Origin Story

To understand the origins of the TPU, we need to cover Google’s long and evolving history of engagement with machine learning and deep learning. The rationale for Google’s interest in machine learning is clear. Google’s stated mission is “to organize the world's information and make it universally accessible and useful.” Machine learning provides a set of powerful tools to help Google organize that information and make it more useful. From image and speech recognition to language translation to ‘large language models’. And, of course, numerous applications in the part of Google that makes billions of dollars every year, its advertising business. Machine learning is a huge deal for Google.

So Google’s interest in machine learning started in the early 2000s. The firm’s attention started to shift to deep learning in the early 2010s. Some key milestones, showing Google’s rapidly escalating focus and commitment to deep learning, include:

2011: Google Brain started as a research project on Deep Learning as part of Google X by Jeff Dean, Greg Corrado, and Andrew Ng.

2013: Following the success of their AlexNet image recognition program in late 2012 and after an auction process that also involves Microsoft, Baidu, and DeepMind, Google acquires the startup consisting of Geoffrey Hinton, Alex Krizhevsky, and Ilya Sutskever for $44m.

2014: Google acquires DeepMind, founded by Demis Hassabis, Shane Legg, and Mustafa Suleyman for a price of up to $650m

A key moment came in 2012 when the Google Brain team was able to demonstrate a step change in the performance of image recognition by using deep learning.

Using this large-scale neural network, we also significantly improved the state of the art on a standard image classification test—in fact, we saw a 70 percent relative improvement in accuracy. We achieved that by taking advantage of the vast amounts of unlabeled data available on the web and using it to augment a much more limited set of labeled data. This is something we’re really focused on—how to develop machine learning systems that scale well so that we can take advantage of vast sets of unlabeled training data.

Discussions about developing custom hardware for machine learning took place as early as 2006. The conclusion at that stage was that the computing power needed for the applications envisaged at that time could be provided at low cost using server capacity already available in Google’s huge data centers. The paper documenting this “Building High-level Features Using Large Scale Unsupervised Learning” documents how they used “a cluster with 1,000 machines (16,000 cores) for three days” to train the model described in the paper. That’s 1,000 CPUs each with 16 cores.

Cade Metz’s book Genius Makers recounts how when Alex Krizhevsky, the developer who built AlexNet, arrived at Google in 2013 he found that their existing models were all running on CPUs. Krizhevsky needed GPUs. So he took matters into his own hands!

In his first days at the company, he went out and bought a GPU machine from a local electronics store, stuck it in the closet down the hall from his desk, plugged it into the network, and started training his neural networks on this lone piece of hardware.

Eventually, Krizhevsky’s new colleagues would realize that they would need GPUs, and lots of them. In 2014 Google decided to buy 40,000 Nvidia GPUs for a cost of around $130m. These GPUs were put to work training deep learning models for use across Google’s businesses.

As an aside, a firm like Google buying 40,000 GPUs isn’t something that would go unnoticed. If Nvidia needed a signal that GPUs applied to Deep Learning could be a business of significant scale, then Google almost certainly provided it in 2014.

But these GPUs didn’t necessarily solve Google’s biggest potential problem. They were great for training the models that Google was developing. But what about deploying these models at Google’s scale? Applications for deep learning, such as speech recognition, were likely to be extremely popular. This potential popularity presented Google with a problem. Let’s consider the product that Google is synonymous with, search.

Google search is free to anyone with a web browser and an internet connection. The quality of that search combined with the fact that it is free were essential factors in driving its explosive growth and rapid dominance. It’s estimated that in 2023, Google provided the results of around two trillion searches. To be able to provide search for free and at this scale, Google needs to be able to provide each set of search results very cheaply indeed.

Google’s team could envisage a range of services built using these new deep-learning techniques that would be enormously popular. Google could leverage its brand, and integration into its existing wildly popular products such as search, Gmail, and Android, to again make these services dominant.

But there was a significant problem. At this scale, these services would require investment in more hardware. In 2013 the Google team devised an example using speech recognition that illustrated the scale. If users were to speak to their Android phones for only three minutes a day and Google used deep learning to turn that speech into text using CPUs, then the company would need to add 2 times or 3 times the number of servers that it already had in use. This would be prohibitively expensive.

One alternative was to expand the use of GPUs, with Nvidia GPUs being the obvious first choice. By 2013 Nvidia’s GPUs and the associated software ecosystem were already well established and were being used as key tools in machine learning research, both for training and inference. The first Nvidia GPU tailored for general-purpose computing tasks appeared in 2006 and Nvidia’s CUDA framework, used to program general-purpose computing tasks on GPUs, appeared in 2007. Crucially, Nvidia GPUs were available to buy immediately.

And, as we’ve seen, Google did buy Nvidia GPUs in volume and install them in its data centers. However, relying on Nvidia GPUs wasn’t necessarily the optimum solution, either technically or strategically. GPUs are much better than CPUs for the calculations needed for deep learning but they aren’t completely specialized in those calculations. Relying on GPUs would leave potential efficiencies on the table, and, at Google’s scale, those efficiencies would represent significant cost savings foregone. Furthermore, reliance on a single supplier for hardware that was crucial to Google’s strategy would add significant risk to that strategy.

There were two other alternatives. The first was that Google could use Field Programmable Gate Arrays (FPGAs) that they could program to perform the specialized calculations required for deep learning.

The second was that Google could design and build its own custom hardware in the form of an Application Specific Integrated Circuit (ASIC). Using this custom hardware, specialized for deep learning, would open up the potential for even more efficiencies and would reduce Google’s dependence on a single supplier.

Experiments with FPGAs quickly showed that their performance would be beaten by the GPUs that were already installed. Those FPGAs weren’t completely discarded though.

The FPGA still landed in the data center, six months before the ASIC was available; it served as a “pipe cleaner” for all of the Google deployment processes to support a new accelerator in production.

But the focus would be on an ASIC. The objective would be to develop an ASIC that would generate a 10x cost-performance advantage on inference when compared to GPUs.

But it would take time to develop custom hardware. Precisely how much time would be crucial. For the project to make sense it could not be a ‘research’ project that would drag on for years. It needed to deliver hardware, at scale, to Google’s data centers, and to do so quickly. To do so the project would need to draw on whatever resources and existing knowledge Google could quickly access.

TPU Team and Existing Research

How to design complex and innovative hardware quickly without an existing in-house team. Remarkably Google was quickly able to assemble a remarkably effective team. How did it manage it?

Google was already creating custom hardware for its data centers and could call on members of that team to help with the new project. However, the company hadn’t created its own processor chip before, so it started to bring in new team members with relevant experience. How did they do this? One of the recruits, Norm Jouppi, has recalled how he was recruited to the TPU team after talking to Jeff Dean at a conference and how the idea of working on an innovative ‘green field’ project was attractive. Other well-known names that joined the team included David Patterson, developer of the original Berkeley RISC design and a key figure in the development of the RISC-V Instruction Set Architecture.

Google soon had a talented and experienced team. But even then they might have struggled to meet the target set for them if they were to develop the architecture for the new system from scratch. Fortunately for Google, there was an existing architectural approach that could be readily applied. An approach was first developed more than thirty-five years before work started on the TPU.

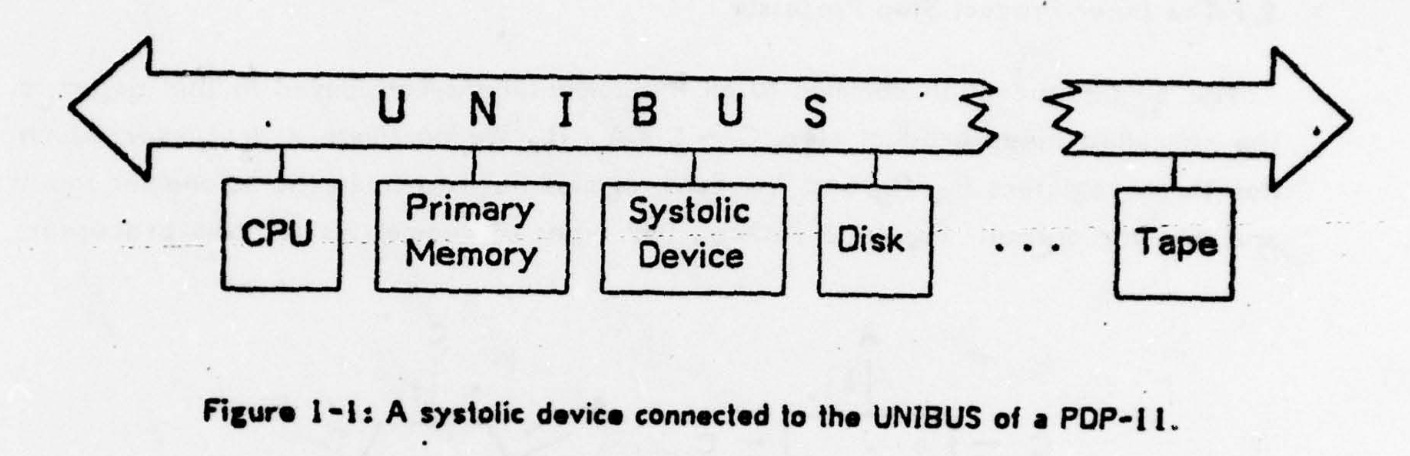

In their 1978 paper Systolic Arrays (for VLSI) H.T Kung and Charles E. Leiserson of Carnegie Mellon University had set out proposals for what they called a ‘systolic system’.

A systolic system is a network of processors which rhythmically compute and pass data through the system….In a systolic computer system, the function of a processor is analogous to that of the heart. Every processor regularly pumps data in and out, each time performing some short computation so that a regular flow of data is kept up in the network.

They then identify one application for systolic systems, matrix computations.

Many basic matrix computations can be pipelined elegantly and efficiently on systolic networks having an array structure. As an example, hexagonally connected processors can optimally perform matrix computation…These systolic arrays enjoy simple and regular communication paths, and almost all processors used in the network are identical. As a result, special purpose hardware devices based on systolic arrays can be built inexpensively using the VLSI technology.

As an illustration of the vintage of the technology that Kung and Leiserson were dealing with, they illustrate the use of a ‘systolic system’ as an attachment to a DEC PDP-11 minicomputer.

The paper describes alternative ways in which the processing elements of a systolic array might be connected.

One of the proposed arrangements - orthogonally connected - echoed the arrangement of processing elements in the ILLIAC IV supercomputer, which we discussed in ILLIAC IV Supercomputer: DARPA, SIMD, Fairchild and Stanley Kubrick's '2001').

Kung and Leiserson go on to describe how a hexagonally connected systolic array can be used to perform matrix multiplication

This original work by Kung and Lieberson was followed over the next decade by more research and many more papers, showing how systolic arrays could be used to solve a variety of problems.

How does a systolic array work? We will look in much more detail, in the next post, at how the concept was applied by Google’s TPU team to efficiently implement matrix operations. The basic idea though is that data is fed into the system through one or more sides of the array and that data and intermediate results flow through the system with each ‘pulse’. The results emerge from one or more sides of the array after the required number of pulses have led to the required calculations being completed.

By 2013 some of the original motivation behind Kung and Lieberson’s ideas had fallen away, particularly dealing with the limits of fabrication technology of the 1970s, had fallen away. However, the inherent efficiency, and of particular relevance in 2013, the relatively low power consumption, of this approach for tasks such as matrix multiplication remained. So the TPU would use systolic arrays.

Google had the engineers and the architectural approach but there was still a gap in the capabilities that it would need to turn the ideas into working silicon. For these, it would turn to LSI Corporation (now part of Broadcom). At first sight, what is now Broadcom may look like a surprising partner. It’s not a company that is usually associated with machine learning hardware. But they could work with a manufacturing partner like TSMC to make Google’s designs a reality and to do so at scale.

Finally, getting the TPU to the point where it could be used for real workloads meant a lot more than just manufacturing lots of chips. It meant building software so that Google’s Deep Learning tools could run on the new designs. The new designs necessarily meant a completely new instruction set architecture and that meant that compilers had to be adapted for the new architecture, no small feat in itself.

Deployment and Use

Members of the TPU team have summarised their objectives as:

Build it quickly

Achieve high performance

......at scale

...for new workloads out-of-the-box...

all while being cost-effective

We’ll cover the performance and costs in the Part 2 of this post. They certainly did build it quickly. The first TPUs were deployed in Google’s data centers in early 2015, just 15 months after the project started.

How did they achieve this? The paper Retrospective on “In-Datacenter Performance Analysis of a Tensor Processing Unit” summarises some of the factors:

We sometimes tout the TPU’s 15-month time from project kickoff to data center deployment, much shorter than is standard for production chips. Indeed, subsequent TPUs, which Google relies upon for production, have multiyear design cycles. The fast time-to-market was enabled by a singular schedule focus, not just in architecture—where the 700 MHz clock rate enabled easy timing closure and the aging 28nm process was fully debugged—but also in heroic work by our datacenter deployment teams.

The ‘aging 28nm process’ and relatively low clock rate were helpful factors. What this omits though is Google’s ability to marshal the necessary resources to support a project like this.

The TPU would quickly find itself used inside Google for a wide range of applications. We’ll look at these in more detail in Part 2, but first one, interesting and high-profile, example: Google’s DeepMind used TPUs for its AlphaGo Zero project. AlphaGo Zero was the model that defeated world Go champion Lee Sedol in March 2016.

AlphaGo Zero is the program described in this paper. It learns from self-play reinforcement learning, starting from random initial weights, without using rollouts, with no human super-vision, and using only the raw board history as input features. It uses just a single machine in the Google Cloud with 4 TPUs.

AlphaGo Zero’s success in 2016 seems like a momentous moment. That it was achieved with so little hardware is remarkable.

TPU Announcement

A firm like Google doesn’t need to share details of what is going on behind the scenes in its data centers. So the TPU remained a secret for more than a year after its first introduction in 2015.

Then Google’s CEO Sundar Pichai announced in his Keynote at the Google IO conference on 18 May 2016 that:

We’ve been running TPUs inside our data centers for more than a year, and have found them to deliver an order of magnitude better performance per watt for machine learning.

This announcement was accompanied by a short blog post Google supercharges machine learning tasks with a TPU custom chip. Alongside some very brief technical details, the post shared some of how the TPU was helping power Google’s services.

TPUs already power many applications at Google, including RankBrain, used to improve the relevancy of search results and Street View, to improve the accuracy and quality of our maps and navigation. AlphaGo was powered by TPUs in the matches against Go world champion Lee Sedol, enabling it to "think" much faster and look farther ahead between moves.

This new openness about the TPU was followed by several presentations by members of the TPU team, describing the architecture and its development. We’ll share the highlights of these presentations in Part 2 of this post.

TPUv2

The first version of the TPU had been developed with one objective in mind. To reduce the cost of inference at scale. It was not designed to speed up or to reduce the cost of training models. In fact, TPU v1 had several deficiencies when used for training. So in 2014, before TPU v1 had even shipped, Google started a project to create its successor. TPU v2 would address the deficiencies of TPU v1. We’ll look at TPU v2 and its successors in later posts. The next post in this series though will look at the architecture and performance of TPU v1.

Thank you for the excellent read 💡 ! Would love to understand better the differences between GPUs and TPUs, I don't know if such comparison is in scope of future articles :). Looking forward to part 2!

So fascinating and exciting! Thank you Babbage!