GPU Compute : Costs, Trends, Huang's Law and FLOPS in the Brain

What can we learn about GPU performance trends and how many FLOPS performance does a human brain provide?

In our last post, we looked at the impact of the current ‘AI boom’ on the world’s total accessible computing resources. If you haven’t already, then you may want to read that post first for background.

Deep Thought, Deep Learning and the World's Total Computing Resources

In this post we look at the impact that investment in AI hardware is having on the world’s total computing capabilities.

We estimated that if 550,000 Nvidia H100’s are produced in 2023 then that would add around 14 ExaFLOPs of 64-bit floating point (or 400 ExaFLOPS of 32-bit tensor floating point) compared to an existing installed base of 25 ExaFLOPS (or around 50 ExaFLOPS of 32-bit floating point).

We didn’t consider the cost of adding these new resources. So are we getting value for money relative to the cost of existing ‘supercomputers’?

Costs of the AI Boom vs Supercomputers

The cost of an H100 that is usually quoted is around $30,000.

With 26 TeraFLOPS of 64-bit floating point (FP64) capability, we would need around 46,000 H100s in order to reproduce the FP64 capability of the Frontier supercomputer.

So the cost of that capability would be around $30,000 x 46,000 or $1.38 billion.

The quoted cost of Frontier is around $600m, so the H100 alternative, perhaps, looks a little expensive! This shouldn’t be too surprising though.

H100 is a very profitable product. Nvidia is, unsurprisingly, taking advantage of the current demand for its products to extract substantial margins - probably a lot more than AMD was able to do with Frontier.

As we’ve seen, the H100, with its tensor cores, is specialised for machine-learning type calculations. Frontier would not be able to reproduce the performance of the H100 for this type of calculation.

If you just want FP64 performance then H100s probably aren’t the best option!

It’s useful to do some other sense checks on our original estimates too.

Frontier uses 37,888 Radeon Instinct MI250X GPUs which are optimised for FP64 capabilities. The H100 is specialised for machine learning, so needing 20% more H100s to meet a FP64 compute target, doesn’t seem unreasonable.

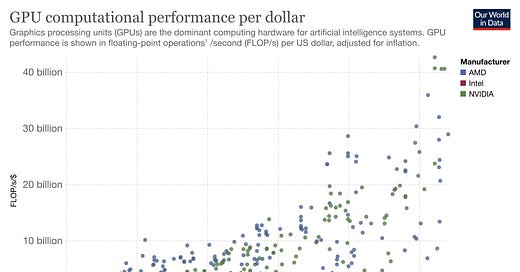

After the break, more on AMD vs Nvidia price / performance over time, GPU performance trends, Huang’s Law, where have GPU performance improvements come from and how many FLOPS does the human brain provide?

The rest of this edition is for paid subscribers. If you value The Chip Letter,then please consider becoming a paid subscriber. You’ll get additional weekly content, learn more and help keep this newsletter going!