iAPX432 : Gordon Moore, Risk and Intel’s Super-CISC failure

The legacy of Intel's over-ambitious 32-bit 'micromainframe' failure.

When I heard that Gordon Moore had passed away, my first instinct was to write something about Moore’s Law. After all, it’s helped shape our history over more than five decades. And Moore was more than just an observer of his ‘law’ as a phenomenon, not just a visionary, seeing the future. He contributed materially to shaping that future.

We’ll return to Moore’s Law at a later point. Today though, I want to focus on another aspect of Moore’s life which has had less attention. Overshadowed by his achievements in other areas, it’s almost hiding in plain sight. It’s Moore as risk taker.

Moore took calculated risks all his life. He was one of the ‘traitrous eight’ that left William Shockley to found Fairchild. He joined with Robert Noyce to found Intel. And he took significant risks whilst at Intel.

But when we see pictures of Moore, we don’t think of him as a risk-taker. Perhaps it’s that bespectacled, genial image. Or perhaps it’s the fact that he was so spectacularly successful. If all your bets pay off, then where is the risk?

So today’s post is about a risk that Moore took that didn’t work. It was a project that, commercially, was a disaster. But, with the benefit of hindsight, we can now see that it was a reasonable risk to take. In fact, the spectacular failure of this project probably helped lay the seeds for Intel’s success in the late 1980s, 1990s and beyond.

So today’s post is about Intel’s iAPX432, the 32-bit super-CISC processor that Intel developed in the latter half of the 1970s.

We’re going to be highly critical of the iAPX432, explore why it failed and why it was misconceived, but also look at why Intel’s management might have believed that it was a sensible project to take on.

All the way through, we need to remember one thing. Moore and Intel were right to push into complex, high-performance microprocessor design. The company’s future lay in adding value to the, increasingly complex, integrated circuits that they were manufacturing. And the processor was where there was most value to add.

So this is going to be an odd sort of tribute to Gordon Moore. One that focuses on something he got wrong. But I hope that in doing so he will emerge as an even bigger figure than we can see through the narrower lens of ‘Moore’s Law visionary’.

It is lucky for all of us that Intel's 432 processor project never made it back in the early eighties. Otherwise a really horrible Intel architecture might have taken over the world. Whew!

Steve Friedl

If we can learn a lot from studying technology failures, then the Intel iAPX432 should be a rich source of lessons. The most common reaction to Intel’s ‘flagship’ processor development program of the late 1970s and 1980s is probably “just what were they thinking?”

With the benefit of hindsight, it seems misconceived on just about every level. Six years in development, it was repeatedly delayed and when it was finally launched it was too slow and hardly sold at all. It was officially cancelled in 19861, just five years after it first went on sale. It’s not an exaggeration to call it a commercial disaster.

Yet this was still the Intel of Robert Noyce, Gordon Moore and Andy Grove, some of the brightest and shrewdest minds not just of this, but of any era.

So whilst it’s interesting to look at the reasons why the iAPX432 failed, it’s also useful to consider why Intel’s senior management thought it would work and why they got it wrong. If they could make these mistakes, then anyone could.

We’ll look at the story of the iAPX432, examine some of its technical innovations and failures, and then try to understand why Intel got it wrong.

Just to be clear on one point. The iAPX432 was a commercial failure, but it can be argued that it was very much a technical success. The actual hardware and software worked. For such a challenging project with so much technical innovation, that was a remarkable achievement by itself. If this post is very critical of the iAPX432, it’s of the overall approach and the strategy behind the design and not of the work of the engineers who made it. In fact, I’m a bit in awe of what they achieved and believe that it’s a shame that their work has been overshadowed by the iAPX432’s problems.

It’s also worth emphasising that Intel’s push into high-performance microprocessors was correct. It’s just that this particular design was misconceived. So at the highest level Intel, and particularly Gordon Moore who was Intel’s CEO at this time, got it absolutely right.

‘Success has many fathers, but failure is an orphan.’ There seems to be little material on the failure of the iAPX432 project available to study. There are no oral histories or extensive YouTube interviews, which is a shame. There is however an excellent paper that analyses the iAPX432’s technical failings, and particularly its poor performance, which we’ll discuss in detail later.

Forty years on there seems to be no trace online of any useful software that was written for the iAPX432. Possibly as a result, there doesn't seem to have been any attempt to build an emulator for the architecture.2 Apart from the extensive documentation of the era, it’s disappeared, leaving little trace behind.

Except, arguably, its failure has left an important legacy that still influences the way we do mainstream computing today.

So let’s dig into the iAPX432 and see what went wrong, what we can learn and how it's failure influenced the development of computing in the years that followed.

A ‘Successor’ To The 8080

The iAPX432 started as the Intel 8800 (also sometimes referred to as the 8816), a project to develop a successor to the Intel 8080. The 8080 had been a major success, powering key machines, such as the Altair 8800, in the first generation of 8-bit personal computers.

Work on the design started at Intel’s offices in Santa Clara. Management of the project was taken up by Bill Lattin, who had been recruited from Motorola, where he had helped to build the 6800, Motorola’s successful rival to the 8080.

Soon he had concerns about the new design, known internally as ‘future’. He visited Gordon Moore, then Intel’s president, to express his concerns about the extreme complexity of ‘future’ and his inability to evaluate how good the proposed architecture was. The response from Moore is recounted in the book ‘Inside Intel’:

Moore was undaunted. For all the success of the 8080 to date, Intel wasn’t making any inroads into the computer business itself. .. The new design, Moore believed, would be the one that would catapult Intel into the computer business. He sent Lattin away inspired, reassured and convinced that the company’s top management was behind the project 100 per cent.

In March 1977 the team of Lattin plus seventeen engineers moved to Portland. The project grew and grew. Eventually, Intel admitted that the project had cost around $25 million (although other estimates have put the cost as high as $100 million), equivalent to about $100 million in 2023

The 8800 became the iAPX 432, with the 32 indicating that this would be a 32-bit system. iAPX stood for ‘Intel Advanced Performance Architecture’. For a while the iAPX brand was used for x86 designs, with the 80286 becoming the iAPX 286, only reverting to the previous designation when it was clear that the iAPX 432 was a failure.

The new design was finally released in 1981. Knowledge that Intel was working on an advanced design, labelled as a ‘micromainframe’, had been public for a number of years, though, and expectations were high.

The iAPX 432 launched with a resounding thud.

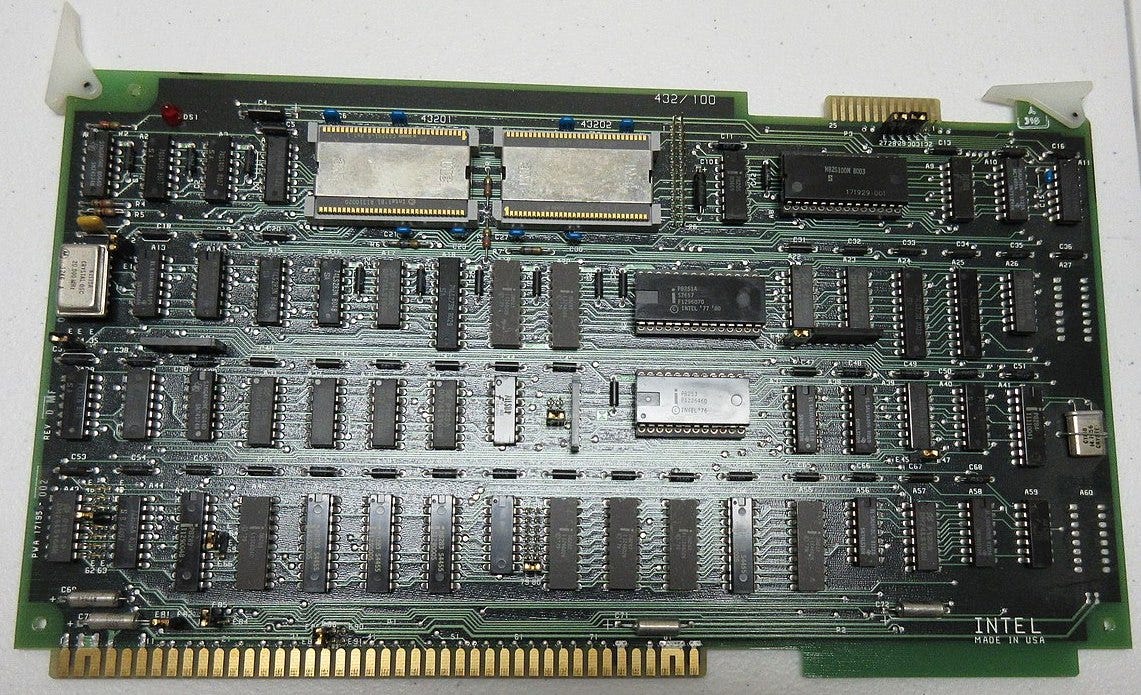

A basic implementation needed three VLSI chips. Two of these chips were known as the ‘General Data Processor’ with the third called the ‘Input / Output Processor’.

There was no attempt to make it backwards compatible with existing Intel products. In fact the novelty of the design seems like an attempt to make it as incompatible as possible not only with Intel’s products but with everything, including most existing programming languages.

The full set of three chips cost $1500 at launch and an evaluation kit $4250. For comparison, the 80286 based IBM PC/AT cost around $6,000 at launch in 1984. Byte reported that if you didn’t want to use the evaluation kit then:

Intel has also released an Ada cross-compiler for the iAPX432. The compiler runs on a DEC (Digital Equipment Corporation) VAX-1 1/780 or an IBM 370. It costs $30,000. A $50,000 hardware link is needed to download the compiled code to Intel's $4250 development board.

So that’s $84,250 plus the cost of the VAX. Perhaps it’s just me, but I detect a degree of incredulity in Byte’s reporting of this.

The same magazine reported that Intel expected to sell 10,000 of the chipsets in the first year. There doesn’t seem to be a published record of how many actually sold.

And the iAPX432 was incredibly slow. It was around four times slower than the simpler and cheaper 80286 that would be launched in 1982. With a much faster alternative, with a bigger range of software available, the iAPX432 was doomed.

The iAPX432 Architecture

So let’s have a look at the iAPX432 architecture.

Rather than look to improve their existing design, or follow the lead of another successful architecture, the iAPX432 design took a ‘shopping list’ of apparently desirable and fashionable features and added them all into the design.

The key new features included:

Ada : The architecture would be programmed using the Ada programming language, which at the time was seen as the ‘next big thing’ in languages.

Object-oriented programming : Consistent with this the architecture would support object-oriented programming natively in hardware.

Garbage collection : Again to support Ada the system would natively implement garbage collection in hardware.

Data structure support : The system would include native support for management of data structures needed to implement high level programming languages.

Tagged memory support : The system had ‘tagged’ and protected memory for improved security.

Multiprocessors : Multiple processors could be linked together in a single system.

Fault tolerance : The system would be able to detect and deal with errors as they occurred.

Floating point : Floating point arithmetic was implemented in hardware.

In principle these features do seem to be strongly aligned with the way in which computing has developed over the last forty years. With the exception of Ada, which is still in use, but is definitely niche, all of the other ideas are mainstream in the 2020s.

So if the general concepts incorporated in the iAPX432 were actually quite prescient why did it fail? I think we can identify three key and inter-linked areas, complexity, flexibility and performance.

Complexity : Just too much

The most complex processor design that Intel had built prior to starting on the iAPX432 was the 8-bit 8080. Federico Faggin who led the design of the 8080 and his key aide Masatoshi Shima. By 1975 Faggin and Shima had left Intel after falling out with Andy Grove. So Intel had lost its most experienced designers.

Then the architecture was just too complex for the manufacturing processes of the time. The design of the 8080 still involved cutting rubylith by hand. It must have been clear early on that the design would not fit onto a single die. That made the design less efficient and more expensive, both for Intel to make and for customers to use.

The combined transistor count of the iAPX432’s two General Data Processors was 97,000 and that of the Interface Processor was 49,000 transistors. The Intel 8080 had 4,800 transistors. So the combined transistor count was for an architecture started in 1975 was around 30 times the ‘state of the art’ for the time. That’s around 5 ‘generations’ of Moore’s Law, or around seven and a half years. Indeed, roughly consistent with this, the 80286, launched in 1982, had around 120,000 transistors.

So it was probably inevitable that the iAPX432 would take around six years from inception to launch, even with the compromise of a multi-chip implementation.

Perhaps six years doesn't sound too bad from the perspective of 2023. But architectures were evolving quickly in the late 1970s, and six years left a gaping hole in Intel’s product line and an opportunity for competitors (both inside and outside Intel) to fill.

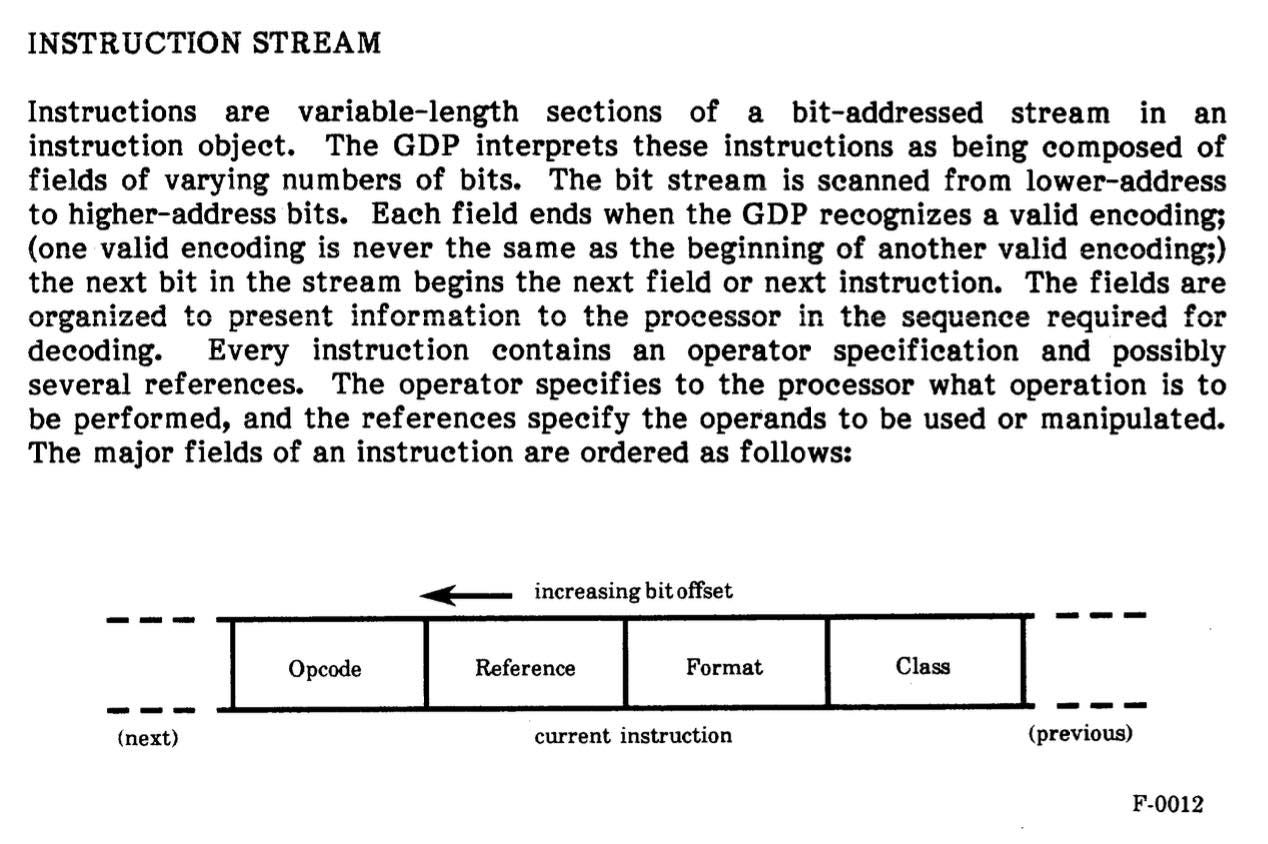

Some of the complexity was necessary to implement the iAPX432’s design. Here is a diagram of the iAPX432’s addressing path:

Without going into a lot of explanation of what’s happening here, I think it’s clear that there was a lot of indirection in the iAPX432’s basic approach to memory addressing!

Plus, the iAPX432 had quite a bit of unnecessary complexity. One example: It had variable length instructions that ranged from 6 to more than 300 bits long. Yes, instructions weren’t byte aligned and could be more than 37 bytes long!

This meant that the design needed a ‘barrel-shifter’ to get the instruction bits ‘in the right place’ for them to be decoded, and that barrel-shifter needed transistors that could be used elsewhere. It was yet another piece of complexity added to an already complex design.

One final point on complexity. We know (for example from construction projects) that it’s really hard to estimate how long complex projects will take. I suspect that Intel’s management thought at outset that the iAPX432 would take considerably less than the six years that it eventually did take.

Flexibility : Not enough

The next problem is that the architecture froze into hardware, implementations of concepts what were evolving quickly. Hardware versions of these concepts could be left behind as thinking on each of these topics developed.

Object-oriented programming languages have become pervasive. Today we use Java, Python, Ruby, C++ and so on. But each of these languages takes subtly different approaches.

Just one example of where the iAPX432 would definitely have been quickly outdated. Objects were limited to at most 64KB in length. This might have looked enough in 1975, but even by 1981, I suspect it was looking problematic.

In the same way that x86 has developed and extended over the years, then I’m certain that iAPX432 would have needed to develop. I shudder to think though at the level of complexity that a 2023 version of the architecture, with legacy support, would have needed.

Performance : Super-CISC and Anti-RISC

All the previous problems might have been fixable if the iAPX432 didn’t have such atrocious performance.

It might be better considered though as a kind of ‘anti-RISC’ processor

I’ve called the iAPX432 a super-CISC processor, and it did have lots of very complex instructions. It might be better considered though as a kind of ‘anti-RISC’ processor, in that its design actively hindered the fast and efficient execution of simple operations.

If RISC was about removing instructions that might slow the architecture down, the iAPX432 removed instructions that had a chance of executing quickly. It should be no surprise that it was painfully slow even when compared to legacy-derived design such as the Intel 80286.

Two examples. First, it was a stack machine with no user accessible registers and so any operation required one or more memory accesses. The performance penalty was made even worse by the fact that the complexity of the design left no room for a data cache, which might have reduced the frequency with which the design needed to access external physical memory.

Second, it had no notion of immediate, or constant, values other than zero or one.

For a detailed and highly readable account of the performance failings of the iAPX432 there is the paper “Performance Effects of Architectural Complexity in the Intel 432” by Robert Colwell, Edward Gehringer and Douglas Jensen.

Colwell was working for Multiflow Corporation at the time of the paper. Despite his criticisms of the iAPX432 he would go on to work for Intel and had a leading role in the design of several later key Intel designs of the early 1990s including the Pentium Pro.

Colwell’s conclusions succinctly quantify where the iAPX432’s performance problems come from:

This paper has shown that the 432 loses some 25-35 percent of its potential throughput due to the poor quality of code emitted by its Ada compiler. Another 5-10 percent is lost to implementation inefficiencies such as the 432’s lack of instruction stream literals and its instruction stream bit-alignment. These losses are substantial, and essentially unrelated to instruction set complexity or object orientation.

Having established what the 432 should have done differently, we proceeded to investigate what it could have done had its implementation technology been incrementally better. We found that a combination of plausible modifications to the 432, such as wider buses and provision for local data registers, increased performance by another 35-45 percent.

Colwell concludes that whilst some of the iAPX432’s inefficiencies were down to its basic architecture, most were down to how that architecture was implemented.

A Survivable Disaster

The iAPX432 was a big bet for Intel, and one that it got wrong, but it was a survivable bet.

It was survivable in part because Intel was growing so quickly. The, at least, $25 million spent on the project was more than Intel’s total R&D budget for the year. By 1982 that R&D budget had grown to $130m.

And it’s just possible that the failure of the iAPX432 was the making of Intel. The project was so late that when the time came to sell the ‘stopgap’ 8086, Intel’s sales teams were not distracted by having to try to sell the iAPX432 as well.

As we know, ‘Operation Crush’ was an enormous success, and the 8086 and its x86 successors came to dominate the desktop and server processor markets. Not a bad outcome for Intel in the end.

The Legacy of the iAPX432

As the most ambitious and most CISCy design of its era, the iAPX 432 certainly gave CISC a bad name at the start of the 1980s, just when RISC ideas were coming to prominence. It probably deterred others from trying anything similar.

Quoting Robert Colwell again:

The 432 was unique among microprocessors in the degree to which it incorporated architectural innovations. Perhaps due to the initial barrage of publicity and the consequent high expectations, the disappointing reality of the 432’s performance made it the favorite target for whatever point a researcher wanted to make.

As a research effort the 432 was a remarkable success. It proved that many independent concepts such as flow-of-control, program modularization, storage hierarchies, virtual memory, message-passing, and process/processor scheduling could all be subsumed under a unified set of ideas. These concepts have attracted wide interest, but interest has lately been dulled somewhat by a fear that the 432’s experience strikes at the viability of the concepts.

So perhaps the failure of the iAPX432 cast a long shadow.

In an alternative history Intel, rather than developing the iAPX432, invested heavily in a less ambitious new architecture that wasn’t backwards compatible with the 8080, perhaps something like the Motorola 68000 or DEC VAX. Maybe IBM would have chosen that architecture too and so today we’d be using that rather than x86.

On a less speculative level, aspects of the iAPX 432 design made it into the i960 processor, which, perhaps ironically, was a RISC based design intended for embedded applications and which was moderately successful.

More broadly, building such an ambitious design, must have honed Intel’s skills which could then be applied to developing other microarchitectures, most importantly, of course, the x86 series. It’s entirely to the company’s credit that key individuals who successfully built the iAPX432 went on to have long and distinguished careers at Intel, despite the failure of the design.

To return to Gordon Moore again, not only did he support the iAPX432 project at outset, as President and CEO through its development he must have had to reaffirm that support as the project continued. In fact, according to his biographer Arnold Thackray, in “Moore's law : the life of Gordon Moore, Silicon Valley's quiet revolutionary”, Moore took a lot of personal responsibility for the project:

Gordon admitted that "to a significant extent," he was personally responsible. "It was a very aggressive shot at a new microprocessor, but we were so aggressive that the performance was way below par."

Perhaps the project was a mistake, but I think we can all admire the willingness to take risks, to invest in the future, to back those doing the work and to take responsibility. Not just a visionary, but a leader who made the future happen.

Just What Were They Thinking? Six Lessons from the iAPX432 and Further Reading

So let’s have a look at what Intel’s management might have been thinking, and why things didn’t turn out as they expected.