Once Again Through Eratosthenes Sieve : 40 Years of Progress

How much extra performance has 40 years of Moore's Law generated?

Performance has always been a key factor in evaluating how capable computer systems are, so it’s interesting to look back at how far we have come since the earliest days of personal computing. Today, we’re first going to look at a snapshot of performance in the 1983 across hundreds of systems from that era.

Having enjoyed our nostalgic look back over four decades, we’re then going to compare these results with where we are today and see how much progress has been made. We know that Moore’s Law has increased the number of transistors in a single processor core from a few tens of thousands to hundreds of millions, but what has that meant in practice.

Paid subscribers can download a spreadsheet with 1983s results in full. Just a gentle reminder too that there is 24 hours to go in our subscription offer. Grab a heavily discounted annual subscription while they are still available, and get supplementary information, extra posts and more links and commentary every week.

Personal Computers in January 1983

In January 1983, Byte magazine ran an article where it compared how long 241 system / language combinations took to run a simple test program. The systems ranged through early 8-bit microcomputers, the original IBM PC, other early 16-bit systems, a small selection of minicomputers and mainframes, and even Cray supercomputers. The languages included C, Pascal, BASIC, Fortran, Forth and Assembly.

In January 1983 the computer industry was in a period of transition. Eight-bit microcomputers, typically based on the MOS Technology 6502 or Zilog Z80 were still very common alongside early sixteen-bit systems using microprocessors from Intel (8086 in 1978), Motorola (68000 in 1980) or Zilog (Z8000 in 1979).

The IBM PC using the Intel 8088 had launched in 1981 but the ‘PC’ and compatibles had not yet established their complete dominance of the personal computer market.

It would be a year before Apple would launch the Macintosh. Its predecessor, the Lisa, had debuted a couple of months earlier but either there wasn’t enough time to get it into the benchmarks (quite likely) or Byte readers couldn't afford the launch price of ($9,995 - also quite likely!).

The Program(s)

To generate comparable results across all these systems, Byte chose a single simple task, the Sieve of Eratosthenes, a way of identifying prime numbers inside a numerical range. From Wikipedia:

It does so by iteratively marking as composite (i.e., not prime) the multiples of each prime, starting with the first prime number, 2. The multiples of a given prime are generated as a sequence of numbers starting from that prime, with constant difference between them that is equal to that prime.

Crucially, the approach doesn't rely on division to test if a number is prime.

The actual approach taken in the Byte tests - which avoids the even numbers by default - was explained in detail in an earlier article in Byte in September 1981.

So it’s not really testing the numerical capabilities of the machines (which would have been quite limited in most cases) apart from simple addition. The image below gives a visual representation of one version the Sieve in action.

Incidentally, prime numbers aside, Eratosthenes (276 BC – c. 195 BC) was an versatile character, innovating in geography, music and astronomy as well as being a poet He learned Stoicism from the founder of the discipline and was also chief librarian at the library of Alexandria in Egypt.

The Entrants

The Microprocessors

Back to 1983. Most of the machines tested in the Byte survey were 8-bit microprocessor based. The three most popular CPUs were:

Zilog Z80 : 64 appearances

MOS Technology 6502 : 23

Motorola 6809 : 19

I was a bit surprised just how popular the 6809 was here, as it was never used in one of the really popular home or personal computers. Equally, it’s a bit of a surprise how few 6502s appear on the list. Quite a few Apple II’s were tested, but nowhere near the number of Z80’s running the CP/M operating system.

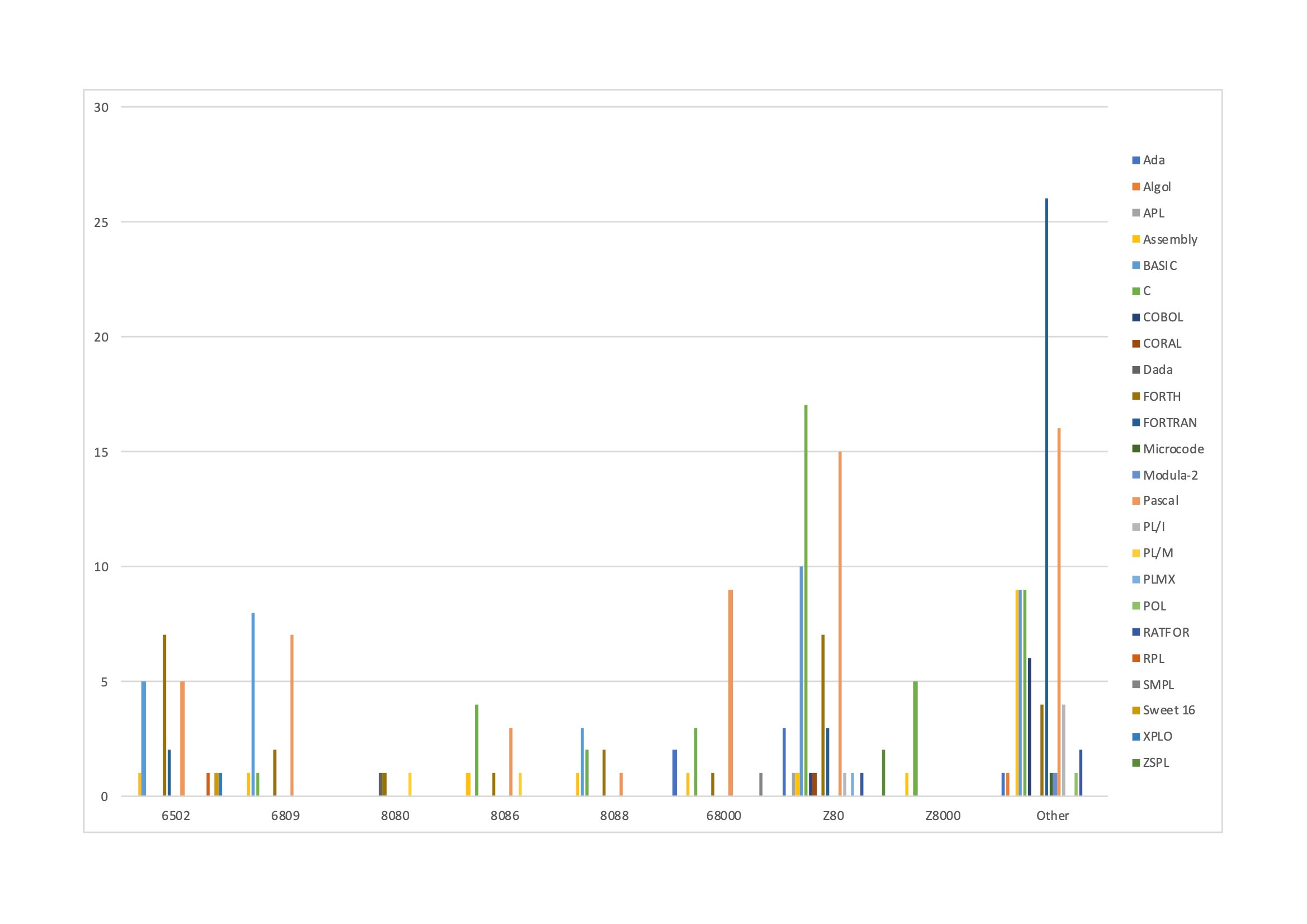

The Languages

The languages used provide a particularly interesting snapshot of language popularity in 1983. The top 5 in order are:

Pascal : 56

C : 41

Basic : 35

Fortran : 31

Forth : 25

It’s perhaps surprising that BASIC isn’t the most popular entry as it came supplied with many of the systems on the list. Perhaps users were a bit embarrassed at how slow their systems were when running the supplied BASIC?

Pascal’s place at the top of the table reflects its popularity on all the systems tested, ranging all the way from humble 6502s through to many minicomputers (although it doesn’t appear on any of the mainframes).

The Fortran systems tested were almost all minis or mainframes, although the language was also available on the Z80 or 6502 based systems.

Here’s a graphical representation of the breakdown of systems represented.

There are some delightfully obscure languages on this list: I’d never heard of XPLO or ZSPL.

Winners and Losers

The ratio of the speed between the fastest and slowest execution times was 700,000 to 1.

So who were the winners and losers? To ensure fair competition between radically different systems I’ve divided these into a small number of categories.

First of all the winners. The first category is the mainframes / minis and the fastest is:

IBM 3033 programmed with assembly with a time of 0.0078 seconds

The IBM 3033 was IBM’s most advanced mainframe of the era. Here it handily beat a Fortran version of the Sieve running on a Cray-1 which, clocked in at 0.11 seconds. The Cray design was 7 years old by 1983 and was built with the objective of optimising vectorised numeric operations.

Then we have the 16-bit micros where winner is:

Motorola 68000 programmed with assembly with a time of 0.49 seconds.

Finally, the 8-bit micros where the winner is:

Motorola 6809 programmed with assembly with a time of 5.1 seconds.

This seems to bear out the conventional wisdom that the Motorola 6809 and the Motorola 68000 were the fastest 8-bit and first generation 16-bit CPUs respectively. The sixteen-bit CPUs did seem to offer a material speed up over their 8-bit predecessors.

The gaps between the early 16-bit systems is interesting too. Assembly versions of the benchmark for the Intel 8086, Motorola 68000 and Zilog Z8000 came in at 1.9, 0.49 and 1.1 seconds respectively which again confirms the conventional wisdom about the relative power of these CPUs. The 8086 was still a big step up from the most popular eight-bit systems though: the fastest Z80 (assembly) result was 6.8 seconds.

And the loser:

Z80 based Zerox 820 running RMCOBOL, with a time of 5740 seconds.

Gosh, that seems slow. I guess that COBOL being slow shouldn’t be a surprise.

Updating to 2023

How far have we come in performance over the last forty or so years?

The language landscape has changed enormously over this period. Today, we would reach for Python or Javascript instead of BASIC or Pascal. Only C has survived as a popular mainstream language.

So I chose C and Python as representative 2023 language choices. C is compiled and enables us to do a direct comparison with 1983’s C compilers. For C we are using Clang version 14.0.0 and for Python version 3.9.6. When compiling with C we use Clang’s default -O0, the lowest level of optimisation.

I tested these programs on a 2020 Intel Core i5 laptop. More specifically it’s an ‘Intel(R) Core(TM) i5-1038NG7 CPU 2.00GHz’ otherwise known as an ‘Ice Lake’ or Intel 10th generation Core i5, built using Intel’s 10nm process technology. It’s not quite a 2023 machine but close enough for our purposes.

The ten iterations used in 1983 is too few for reliable results so we have to increase that to 10,000. Here are the run times, in seconds, for 10,000 iterations:

C : 0.44

Python : 30.81

So compared to result for the Intel 8086 running C in 1983 (8 MHz 8086 running CPM/86 and Digital Research C) of 2.8 seconds for 10 iterations we have a speed up of a factor of roughly 6,300 or around 24% per annum.

My very rough estimate is that the 2020 Core i5 has low hundreds of millions of transistors per core (excluding cache): we’re going to assume 200 million transistors. The 8086 had 29,000 transistors. So that’s an increase of roughly 6,900x over 40 years, or a 25% increase per year. It’s a bit behind Moore’s Law but there are lots of transistors being used on the GPU and on other cores on the i5.

Of course, the benchmark omits testing floating point and Single Instruction Multiple Data instructions where much of the transistor budget has been spent (my Ice Lake Core i5 laptop even has AVX512 which would have taken quite a lot of the transistor budget!)

It’s worth noting that the code and data are likely to fit into the 2020 Core i5’s Level 1 cache, which probably works to the advantage of the modern machine.

Should We Be Impressed Or Not?

The gulf between where we are today and the world of 1983 seems quite impressive. This is particularly so as the benchmark doesn't really play to some of the 2020 Core i5’s strengths especially in floating point and SIMD.

Looking at the comparison through another lens though, the i5 has a base clock speed of 2 GHz compared to 8 MHz for the 8086 and can reach 3.8 GHz in Turbo Mode. So that’s a speed up of at least 250x from clock speed alone. One could argue (perhaps somewhat provocatively) that we have spent those hundreds of millions of extra transistors on getting an additional 25x speed-up (or around 8% per annum).

As an aside, we can do a sense-check on this non-clock related speed-up. The 8086 might be expected to take a number of clock cycles per instruction (as examples, a MOV instruction accumulator to memory instruction took 10 cycles and a register-to-register ADD took 3 cycles), whereas the i5 would be expected to retire a small number of instructions per clock cycle so a 25x speed-up doesn’t seem unreasonable. Please let me know if you disagree with this check!

How far have we come?

I think the speed improvements are really impressive. On top of this modern CPUs, of course, do so much more than just speed up this sort of simple program. It’s interesting though how much of the speed-up can be attributed to clock speed increases, which we haven’t seen for a number of years now.

I’m not sure that our systems today feel that much faster today than in 1983. I’ve just read a review of a system from 1987 which comments that ‘just about everything you do happens instantly.’ Even with all the extra performance we have in 2023, that certainly isn’t always true today.

It’s worth reminding ourselves too that even in 1983 it was still possible to get work done. People ran spreadsheets, edited documents in word processors and played games. The Lisa with a full GUI and a WIMP (Windows Icon Mouse Pointer) interface was already available. To paraphrase the well known saying1: the past is not really a foreign country but it's much slower.

Paying subscribers can download a pdf and an Excel workbook with the original results in full below.