The Arm Story Part 1 : From Acorns

How a new RISC architecture was developed at a small British company.

There will be two types of computer company in the future, those with silicon design capability and those that are dead.

Hermann Hauser

A Personal Prologue

One dark and cold evening in the mid-1980s a young student walked through the ancient streets of Cambridge in the U.K. to a Victorian lecture theatre. Once in the building, he was joined by a couple of dozen other students to listen to a talk about a new piece of computer hardware.

The man giving the talk worked for Acorn Computers, makers of the BBC Microcomputer. His presentation was startling. Acorn needed a replacement for the ageing 8-bit 6502 microprocessor used in the BBC micro. They’d looked at new designs from US firms like Intel and Motorola, and they didn’t like them. So they’d designed their own.

That was surprising enough. It seemed bold for a company with no previous experience to design a microprocessor from scratch. What followed was even more remarkable. The chip Acorn had designed was 32-bit rather than 16-bit like the competitors. And not only was it faster, it also used a lot less power.

That student was me. The new microprocessor was the first Acorn RISC Machine, the first of a series of designs that we now know as Arm. There are many things about that evening where my memory has faded. I think the presenter was Steve Furber, but I can’t be certain. I don’t believe that there was a demonstration of the new system that evening. I do remember being surprised, impressed and somewhat sceptical about Acorn’s new microprocessor.

Fast-forward almost four decades, and we know how the story has played out. Arm processor designs are now used in hundreds of billions of devices around the world.

In this series of posts, I’m going to revisit the story of Arm, starting with its origins inside Acorn.

I was initially a little reluctant to write about the early years of Arm. There have been lots of excellent and extensive descriptions of the story (see the supplement to this post for lots and lots of links!), but in the end there were a few aspects of the story that I felt deserved more focus. I hope that, even if you’re familiar with the Arm story, then you’ll find some new points of interest.

Throughout this series of posts, we’ll be looking to answer one simple question. Why did this architecture from a small, ultimately failed, British company come to be so important and to survive and prosper against much larger competition?

Rather than comment on this as the story progresses, I’ll look to summarise the conclusions in a post at the end of the series.

If you’re enjoyed this then you might enjoy the supplement to this post which is available to paid subscribers and which has links to over eight hours of video and lots of other materials on the early days of the Acorn RISC Machine.

So let’s travel to the ancient university City of Cambridge in the UK in the late 1970s.

Cambridge Processor Unit

It all starts with Clive Sinclair: visionary, compulsive inventor of new gadgets, and variably successful businessman.

Sinclair started his career writing technical guides for electronics enthusiasts in the early 1960s. He soon started to market a variety of electronic products, moving through radios to calculators and then to digital watches.

In 1978 Sinclair, working with his longstanding employee Chris Curry, launched a computer kit, the MK14, based on the National Semiconductor SC/MP 8-bit microprocessor. When Sinclair was reluctant to develop the MK14 further, Curry teamed up with Hermann Hauser, a physics postgraduate at the University, who had also grown interested in the MK14.

Hauser had been born in Austria and had taken his first degree in Vienna before leaving to start his PhD at Cambridge. Hauser met Chris Curry, who shared his enthusiasm for microprocessors and convinced the Austrian to start a company with him to build products based on microprocessors.

The new company was originally called (somewhat prophetically) Cambridge Processor Unit Limited (or CPU Ltd). They also needed a trading name and wanted a name that would put them ahead of Apple in advertisements and in the telephone directory. Acorn seemed appropriate for a company that wanted to grow, so ‘Acorn Computers’ was born.

Curry and Hauser were soon joined by Andy Hopper from the University of Cambridge Computer Laboratory, buying his company Orbis, which was then commercialising the Cambridge Ring networking system (an early proprietary Ethernet competitor). Hopper became a director of CPU / Acorn whilst maintaining his work in the University.

They soon employed a couple of bright young students from the university, Steve Furber and Sophie Wilson. Furber, originally from Manchester in England, was working towards a PhD in aerodynamics. He joined the Cambridge University Microprocessor Group, a society for those who enjoyed building computers for fun, and built a machine using a Signetics 2650 microprocessor. Hauser had joined meetings of the society, and Furber soon found himself working part-time for Hauser and Curry.

Wilson was also from the North of England, being brought up in Leeds in Yorkshire. She became known to Hauser through the Cambridge University Microprocessor Group as someone who had expertise in low-power electronics as Hauser wanted to build an ‘electronic pocketbook’, what would now be known as a ‘Personal Digital Assistant’. Wilson shared the other designs she had been working on, including for a single board microprocessor-based computer. Soon, Wilson was also working for Acorn whilst completing her degree at the University.

Together, they developed Wilson’s single board design into the Acorn System 1. This was followed by the Acorn System 2 and then the Acorn Atom, this time a complete system with a keyboard, all of which were based on the MOS Technology 6502 microprocessor.

Then in 1981, the BBC started looking for a microcomputer design to accompany a new television series. The BBC had already started a national debate about the impact of computing with its program ‘Now the chips are down’, first broadcast in 1978. Now, with the UK government’s support, the BBC’s new series would focus on computer literacy. Acorn bid, putting forward a design that the team had pulled together in a few days, competing with several other firms including Curry’s old boss Clive Sinclair’s Sinclair Research. Acorn won, Sinclair was furious and soon launched his own much cheaper, though less sophisticated, rival, the ZX Spectrum.

The winner of the BBC competition, originally known as the ‘Proton’ within Acorn, became the ‘BBC Micro’ or affectionately as the ‘Beeb’. Both the Acorn and Sinclair machines soon became very successful in the UK personal computer market, the BBC Micro leading in schools and the ZX Spectrum in homes. Acorn was so successful that by September 1983 the company was able to float its shares on the London Stock Exchange with a market capitalisation that would reach over £100m.

A Successor To The 6502

The BBC Micro launched at the end of 1981, but very soon afterwards Furber and Wilson realised that they would face a problem updating the design. The 6502 microprocessor it used had first been introduced in 1975, was showing its age and there was no obvious successor. By this time much more sophisticated 16-bit designs were emerging from firms such as Intel, Motorola and National Semiconductor.

The Acorn team looked at the performance of these new 16 bit microprocessors emerging and were not impressed. The BBC Micro had the ability to add a ‘second processor’ through what was known as the ‘Tube’ interface. This enabled the team to quickly build boards to support different processors whilst still using the BBC Micro for input and output. The team compared the performance of a 6502, Motorola 68000, the Intel 80186 and the National Semiconductor 32016 and were surprised at the poor performance of the new designs. To quote Steve Furber:

We had formed the firm view that the primary determinant of a computer's performance is the memory bandwidth you can access to the processor. Notionally the 32016 has a nice instruction set and the 6502 has a primitive one, but if you looked at the performance you got it just scaled with the bandwidth. The 16-bit microprocessors could not use the bandwidth that was available in the memory that people put in these machines.

So they concluded that these new microprocessors were being held back by wasting the memory bandwidth that was available from the commodity dynamic random access memory chips that they were using. They were also unhappy with how quickly the new processors could respond to interrupts, which was worse than the performance they had been able to get from the 6502.

Enter RISC

Andy Hopper had become aware of research being done in the US on a new approach to processor design, and in turn introduced Hauser to the ideas. One day, Hauser put an academic paper on the topic on Furber’s desk. The paper discussed a concept called the ‘Reduced Instruction Set Computer’ being developed in the University of California at Berkeley. Soon the Acorn team had read papers on RISC-1 processor from Berkeley and on similar designs such as the IBM 801 and MIPS from Stanford.

They were intrigued by the approach set out in the paper, and particularly that the RISC-1 paper described a microprocessor being developed by a small team of postgraduate students. So Furber and Wilson started to joke that maybe they could build their own microprocessor. Over the summer of 1983 Wilson started sketching out a possible RISC instruction set.

They next set about finding out more about microprocessor design. They travelled to Israel to visit National Semiconductor to talk to the team developing the 32016. There they were not impressed by the fact that the large team building this complex CPU were on revision H of the design and were still fixing bugs (and it would take until around revision K until those bugs were fixed).

They also travelled to Arizona where Bill Mensch, one of the original designers of the 6502, had set up the Western Design Centre and was designing his own extended variant of the 6502. Expecting to find another large office building populated with hundreds of engineers, they instead found a suburban bungalow and that Mensch was employing students using Apple II computers to help with the designs.

With the examples of architectures being designed either at Universities in the US or by small commercial teams, they started to think that maybe they really could build their own. So in October 1983, the Acorn team started work in earnest on a new microprocessor.

No Time and No Resources - Except a Silicon Design Team

Hauser would later joke that he gave the team two things that no-one else would give their teams: ‘No time and no resources’. This was not quite true, as he gave them a semiconductor design team!

Acorn were not complete newcomers to semiconductor design: the BBC Micro had included two chips designed by Acorn. UK semiconductor manufacturer Ferranti offered a product called an ‘Uncommitted Logic Array’ (or ULA) which contained numerous logic gates but allowed firms to customise by specifying the final metal layer. The BBC Micro used ULAs for video and serial processing, as well as to link to any second processor.

Hermann Hauser coined a saying, ‘there will be two types of computer company in the future, those with silicon design capability and those that are dead.’ So, encouraged by Hopper, Hauser recruited a small (about a dozen strong) but capable silicon design team.

Acorn then partnered with San Jose firm VLSI Technology Inc. VLSI (which can properly called one of the Fairchildren as three of the founders had formerly worked at Fairchild) made semiconductors under contract and also integrated circuit design tools. Customers could use these tools to create designs that VLSI would then build.

The work with Acorn was not the first time that VLSI Technology had worked with a computer manufacturer. In 1982 they had been approached by Steve Jobs to design and manufacture a custom integrated circuit for Jobs’s planned Macintosh computer. The VLSI Technology team quickly delivered a working prototype, known as the Integrated Burrell Machine after Apple engineer Burrell Smith, but its performance disappointed, and it wasn’t used in the Mac.

The Acorn design team used VLSI Technology’s software installed on expensive, Motorola 68000 powered, Apollo Workstations1 The only problem now was that the team had little to do.

So with a ‘free’ VLSI design team at hand, Wilson and Furber set out to build their microprocessor. The instruction set was designed by Wilson, who as a first step built an emulator for the design in Basic running on a BBC Micro with a 6502 second processor. Furber in turn took this initial Instruction Set Architecture design and developed a microarchitecture to implement it, with the two working together to refine the architecture as the project developed.

Furber and Wilson’s approach was pragmatic. They took the things they liked in other RISC designs and omitted things they didn’t or which they couldn't work out how to implement efficiently. The Arm design wasn’t a direct copy of the approach they had seen in the Berkeley, Stanford or IBM papers. Instead, they took the general RISC approach and adapted it adding in features that the Acorn team knew would be useful.

Throughout the process, the Acorn team weren’t convinced that the new design would be a success. On one hand, they thought that RISC was such an obviously good idea that a much larger firm would take it up and come to dominate the market with another RISC-based design. On the other, they expected that there must be some mysterious aspect of microprocessor design that they had missed and would catch them out and cause the project to fail.

The ARM 1 Instruction Set

Wilson and Furber have talked about the tension between instruction set and microarchitecture design. There were things that Wilson would probably have liked to have included, but which didn't sit comfortably with the microarchitecture. During the development of the instruction set, Wilson, Furber and Hauser would head to the local pub at lunchtime to discuss and debate the latest issues with the design.

Three features of the design they developed stand out. First, they had leapfrogged many of the competing processors of the era with a fully 32-bit design. Second, they focused on making the most of the memory bandwidth that a (non-multiplexed) thirty-two bit data bus would make available. Finally, the addressing range of the new processor was large, based on 26 bit addresses and so allowed up to 64 megabytes of memory, far more than would be typical for computer designs of the time.

Other key features of the design they came up with included:

Sixteen 32-bit user addressable registers (R0-R15 with R15 a neatly combined program counter and flags register).

An additional eight 32-bit registers accessible in supervisor mode and which helped support fast response to interrupts.

A simple three stage instruction pipeline.

The instruction set was kept simple, in line with the RISC concept. There were only 45 instructions with five distinct addressing modes.

A ‘load-store’ architecture, with data processing operations only performed on registers and not on memory locations.

The new design needed a name. Given its use of the RISC concept, ‘Acorn RISC Machine’ was the obvious choice, which of course naturally abbreviated to ARM.

The new ARM processor ran at 6MHz – less than the competition such as the 80286 or the 68000 – but the higher memory bandwidth and pipelined execution more than made up for the slower clock speed. The team even extracted more bandwidth by using ‘page mode’ cycles, speeding up successive memory accesses within a single page, that cheap dynamic RAMs now offered.

Wilson added some novel features to the ISA, though. Most instructions had the option of conditional execution, meaning that they would be either executed or skipped depending on the state of particular flags.

The architecture also included a ‘barrel shifter’. By now, Wilson and Furber had seen the Apple Lisa (launched in January 1983) and the Macintosh (launched January 1984) and knew that a graphical user interface (GUI) would likely be a key requirement for leading edge machines in the future. The barrel shifter would enable the new Acorn design to more easily handle the manipulations that would be needed for GUI software. The importance that Wilson and Furber placed on the barrel shifter can be deduced from the fact that the circuits to implement this took up around 10% of the silicon die.

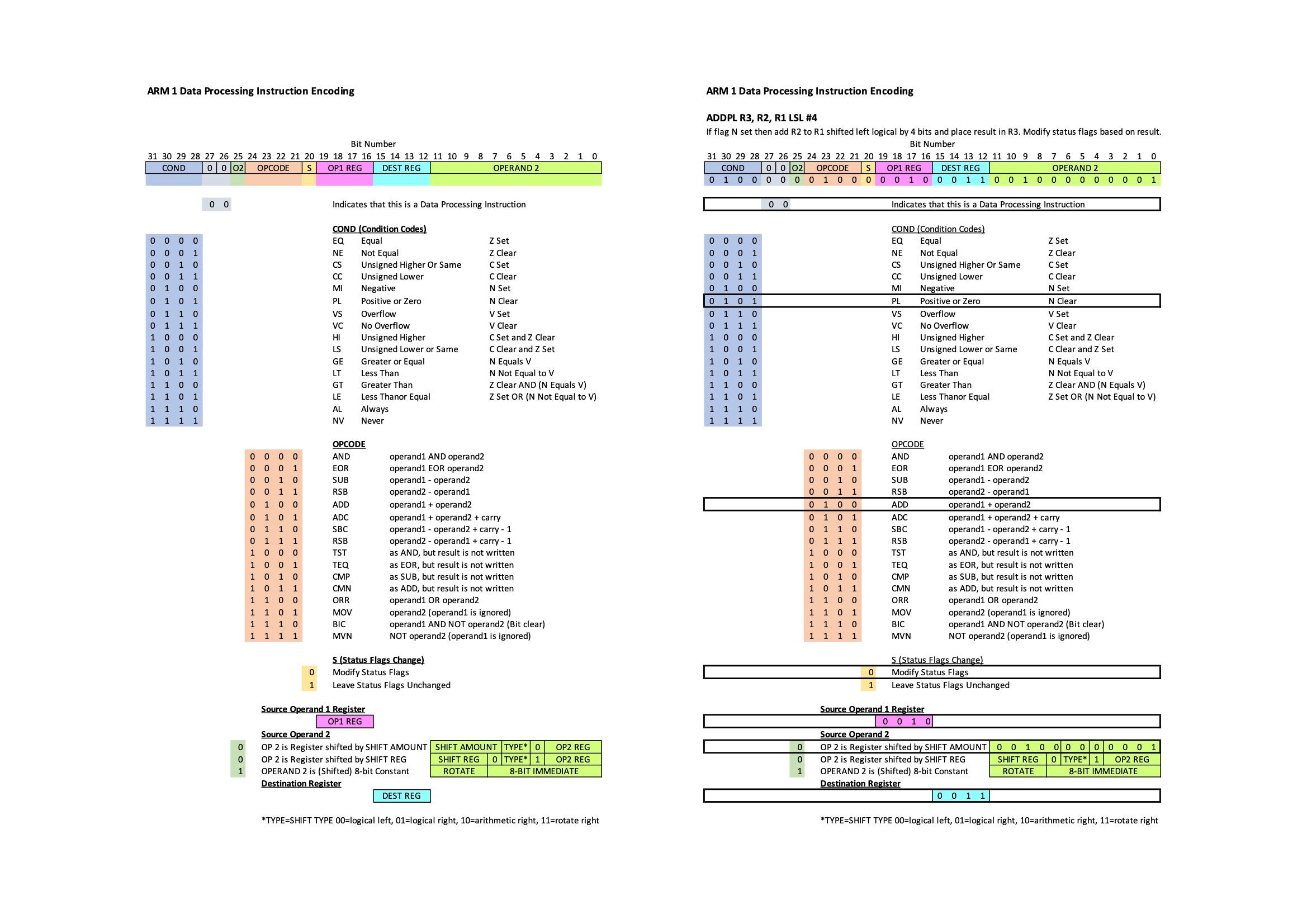

The diagram below demonstrates how all the (register to register) ‘data processing’ instructions are encoded. The table on the left shows how instructions are encoded into a 32-bit instruction, and on the right is an example instruction.

There is quite a lot packed into this single instruction. It only executes if the ‘Negative’ flag is clear, and it performs a 4 bit logical shift on one of the operands. Even with all this the ARM 1 can execute one of these instructions every clock cycle.

The instruction set was highly ‘regular’ even when compared to 8-bit designs such as the 6502. So much so that I think it would be straightforward, not only to remember the full instruction set, but even the encoding of all the instructions, something that would be much harder for say an Intel 80286. It’s notable that the ARM 1 instruction set was designed by someone who had extensive assembly language programming experience and wasn’t explicitly designed to be the target for a compiler, as was the case, for example, for the IBM 801.

The design had no floating-point operations, or even integer multiply or divide, and had no memory management hardware, as the intention being to provide these capabilities in additional support chips. There was no cache as memory was still fast enough to keep the processor running at its full clock speed.

The ARM design generally kept to the broad RISC principles articulated in the Berkeley and other papers, but some instructions were decidedly un-RISC like. Although the design followed a ‘Load-Store’ architecture where it wasn’t possible to combine memory access with data manipulation operations, it did have individual instructions that specified multiple register loads or stores.

In most respects it was the simplest of the RISC chips designed to date.

Overall, the design remained very simple though. In most respects it was the simplest of the RISC chips designed to date. The Berkeley RISC-1, the first processor design to emerge from the Berkeley RISC project, used 44,500 transistors and had 78 32-bit registers with six 14-register ‘windows’. The MIPS R-2000, based on work at Stanford and introduced in 1986 used 110,000 transistors. By contrast, the first ARM microprocessor used less than 25,000 transistors.

First Silicon Powers Up

Furber travelled to VLSI in Munich in Germany to perform some final tests and then ‘tape out’ the completed design in January 1985. The first chips arrived from VLSI on 26 April 1985, roughly 18 months after work had started on the design.

Hauser was on hand with two bottles of champagne to celebrate. The team plugged the chip into the circuit board they had designed for it and which was connected to a BBC micro via a ‘Tube’ interface. No response. Hauser, left the rest of the team to figure things out. He was summoned back two hours later. By now the system was working, and a simple BASIC program had written a ‘Hello World’ message on the screen.

So the new CPU, the circuit board and Sophie Wilson’s new version of BBC BASIC – written manually in assembly language – for the new architecture had all worked first time. Hauser’s champagne was duly opened.

But there was a curious thing. The team had wanted to build a design that was low power as they wanted to keep the cost down by using plastic packaging which cost a few cents rather than ceramic which would cost a few dollars. The team were aiming for power consumption of less than a watt, even though they intended it to be used in a desktop machine. Lacking tools to do make a precise estimate of power consumption, they erred in the side of caution.

A few days after the chips arrived from VLSI, Furber decided he should test the power consumption. Connecting his ammeter to the CPU, he was surprised to see a zero reading even though the CPU was working. After some investigation, he discovered that the CPU power supply pins were disconnected but that it was being powered by current leaking through the other pins. It turned out that the new microprocessor used a tenth of a watt, around ten times better than the target they had been aiming for.

‘There was no magic with the low power characteristics apart from simplicity’.

The lower power consumption was almost completely due to the simplicity of the design. As Steve Furber has later said ‘There was no magic with the low power characteristics apart from simplicity’.

The lower power consumption didn’t come at the expense of performance though. Quoting WikiChip:

Originally intended to perform at roughly 1.5 times the performance of the VAX 11/780, the prototypes ended up achieving between 2x to 4x the performance of the DEC VAX 11/780; this is roughly equivalent to 10 times that of that original IBM 80286-based PC AT or that of the Motorola 68020 operating at 16.67 MHz.

The Acorn processor was slower than some alternative RISC designs of the time, such as the MIPS R2000, but it was much less expensive to make.

The contrast with the 80386, launched by Intel in 1985, is remarkable. The 80386 used 275,000 transistors, but the new Acorn processor outperformed it whilst using less than one tenth of the transistors using a conservative 3 µm process, when compared with the 1.5 µm of the Intel 80386.

Wilson has said that the project was inspired by the slogan ‘MIPS for the masses’. They had designed a powerful processor that could be made at low cost. The team and the RISC concept had been vindicated.

Acorn in Trouble

Meanwhile, Acorn’s business was starting to fall apart.

In 1982 the ongoing rivalry with Chris Curry’s old boss, Clive Sinclair, led Acorn to design a machine that would take on the Sinclair Spectrum in the booming home computer market. The Spectrum had used a Ferranti ULA to keep costs down dramatically, so Acorn followed a similar route. At the start Furber and the other Acorn engineers, weren’t keen:

We were not that enthusiastic about doing this cost-reduction exercise. But ultimately we were persuaded by Chris and by Hermann that there was a market.

The result was the Acorn Electron (also known as the ‘Elk’), a 6502 based largely BBC Micro compatible machine, that again used a Ferranti ULA (this time with 2400 logic gates - much larger than had been used earlier) to shrink the number of integrated circuits used in the BBC machine from over 100 to around a dozen.

The existence of the Electron was soon well known, as Curry and Hauser were talking about it in the press. But the machine was repeatedly delayed, as Furber and his team grappled with problems with the ULA.

When the Electron did launch late in 1983, it gained generally positive reviews, except for one drawback: it was very slow when compared to the BBC Micro and that was in part due to the machine’s poor memory bandwidth.

“A major quirk of the Electron is the way RAM is organised. In the interests of economy, four 64Kb RAM chips have been used, but as these can only be read four bits at a time, memory access time is virtually doubled so that the Electron is dramatically slower than the BBC,” Keith and Steven Brain in Popular Computing Weekly.

Ramping up production of the Electron was problematic too. Ferranti was struggling to produce enough working ULAs and in 1984 Acorn commissioned a CMOS version of the circuit from VLSI Technology.

But by 1984 though, the home computer market in the UK had shrunk dramatically. Acorn was left with £43m of unsellable stock. Acorn had also tried to break into the computer market in the US, an effort that ended in complete failure. It made over £5m profits in the last six months of 1983, but that turned into a loss of almost £11m in the second half of 1984.

In February 1985 the company had to seek financial help, and it arrived in the shape of Italian firm Olivetti, who invested over £10m in return for just under half of the company. Hauser and Curry kept large shareholdings, but lost control of the company.

Acorn RISC Machine Revealed

“I don’t believe you. If you’d have been doing this I’d have known.”

The development of the new microprocessor had been a well-guarded secret. Most surprisingly, it had been kept secret even from Olivetti during their negotiations to buy a stake in Acorn. Now the machine was working, though, gradually word about Acorn’s new processor started to make it out into the world.

When Furber called a journalist in July 1985 to tell them about the new microprocessor, he was met with disbelief. “I don’t believe you. If you’d have been doing this I’d have known.” came the response, before hanging up.

The November 1985 edition of the UK’s most popular computer magazine, Personal Computer World, led on its cover with the new processor, with the headline ’Soul of A New Machine. Secrets of Acorn’s new processor unveiled.’ On the inside, the magazine started with the words ‘RISCy business. The Reduced Instruction Set Processor (RISC) era has begun …’

Across the Atlantic, Byte magazine followed up in January 1986 with a report tucked away on page 387. A detailed description of the new microprocessor ended with:

‘It represents a striking vindication of the RISC philosophy in terms of performance, time it took to develop and its ease and low manufacturing cost.’

The first batch of ARM microprocessors were used in BBC Micro second processor evaluation systems. A cream ‘cheese wedge’ shaped box which attached with to the BBC Micro and labelled ‘Acorn. The ARM Evaluation System’ contained an ARM microprocessor and 1 Megabyte of dynamic random access memory. It also came with an impressive range of programming languages for such a new machine: Sophie Willson’s BBC Basic, C, Fortran, LISP, an Assembler and even PROLOG.

The ARM 1 was the first commercial RISC processor.

This was the first time anyone could buy a RISC processor. The ARM 1 was the first commercial RISC processor.

The ARM evaluation system was now the fastest machine that the Acorn team had available, and so became one of the tools that the team used to design both the chips that would accompany the ARM microprocessor and its successors.

The question now was what would Olivetti, now in control of Acorn, do with the technology they had unknowingly acquired.

We’ll find out what happened next in part two of this series.

If you’re enjoyed this then you might enjoy the supplement to this post which is available to paid subscribers of “The Chip Letter” and which has links to over eight hours of video and lots of other materials on the early days of the Acorn RISC Machine. If you’d like to know more about the inner workings of the ARM 1 CPU then there are links to lots of material that explain how it all works.

Just to whet your appetite, here is Steve Furber talking with immense modesty and humour about his role in the development of Arm:

I mean I am staggered by the success of arm. I mean the success of arm very much underpins my reputation, which I sometimes feel a bit guilty about because although I certainly played a role in sowing the seeds you know it's taken thousands of people a lot of effort since then. Some of the time I think the three point three thousand people working for arm are just working to enhance my reputation.

Thanks for subscribing to The Chip Letter.

Photo Credit

Acorn System 1

By Flibble - Own work, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=17401028

Arm Evaluation Board - "Peter Howkins"

CC BY-SA 3.0

https://upload.wikimedia.org/wikipedia/commons/0/0a/Acorn-ARM-Evaluation-System.jpg

All other images ‘fair use’

Apparently installed by Paul McClellan who is still blogging today on Cadence’s Breakfast Bytes.

‘The was no magic with the low power characteristics apart from simplicity’.