Why Are There No Minicomputers Any More?

What lessons can we learn from the demise of 'mini' form factor, architectures and companies?

There is one class of machine that once had a vital role in the evolution of computing. Companies grew large and profitable building them. But now they’ve vanished, notable only for their absence, and for the legacy of the software that was originally developed on them.1

It’s the ‘minicomputer’. A class of general purpose computer that was popular over the period from the mid 1960s to the mid 1980s, a sector where, according to Wikipedia, “almost 100 companies formed and only a half dozen remained.”

Asking why there are no more minicomputers is really asking two distinct questions. Why did the mini ‘form factor’ vanish? Then why did minicomputer architectures disappear? And finally there is the related question as to why the minicomputer makers all disappeared too? We’ll answer each of these questions in turn.

Mini Form Factor : Babbage’s ‘Law Of Computer Size’

Let’s start by looking at a minicomputer from the 1980s.

This is an IBM System/36.2 It’s a smallish ‘minicomputer’, but by modern standards, it’s not very ‘mini’ at all! In fact it’s roughly the size of a large domestic freezer.

This ‘mini’ form factor - machines typically this size or somewhat bigger - has disappeared. I’d argue that this is a consequence of my own ‘Law of Computer Size’:

Babbage’s Law of Computer Size

Your computer wants to either be small, or very large indeed.

Form factors have changed a lot over the years, but there is a clear trend in size for the most popular computers: from mainframes that need a large room, to large refrigerator sized minis, to desktop personal computers, to briefcase sized laptops, to handheld smartphones.

This has been possible, of course, because the advent of first the transistor and then the combination of the integrated circuit and Moore’s Law has made successively smaller machines possible. Because of their portability, smaller machines are usually more useful. I can play games, browse the web or hail an Uber with my smartphone. I can only do two of these things with a desktop PC.

But machines also got bigger. That is, if we count the ‘datacenter’ as a machine.

Bringing lots of compute together in one place delivers economies of scale. The most economical way of creating that compute has been in the form of thousands of servers with commodity CPUs derived from ‘commodity’ desktop machines.

The advent of the internet meant that if you really wanted more FLOPS (or simple OPS) than a desktop PC could deliver, then you could easily get access to it through an internet connection.

And the advent of ‘the public cloud’ has then added the flexibility to scale those compute resources up or down as required.

So, there really was no reason any more why anyone would want a ‘fridge’ or larger sized computer in one corner of their office. Equivalent computing power could be delivered much more cheaply and economically. And, even better, you no longer had to give up expensive office space to have it too.3

And ‘minicomputer size’ occupies a particularly awkward spot in the size range. It’s the smallest machine that is too big to be moved without professional help. The smallest machine that you can’t put in the back of your car.

Mainframes have survived, but that’s largely because the software that they run is really so vital to the organisations that use them, that there has been no viable alternative.

Minis ran software that could be replaced or which could be transferred to another architecture running either on a desktop machine or to a datacenter.

Mini Architectures : Low Volume CISC

If this answers why minicomputer sized machines have disappeared, it doesn’t really tell us why minicomputer architectures have vanished.

We can argue that it wasn’t entirely because of limitations of the architectures themselves.

Intel’s x86 has its origins in the 8008 microprocessor which, in turn, was based on the Datapoint 2200 terminal. Over five decades though, it has evolved hugely, and today delivers performance and capabilities that generally match or exceed its competitors.

If the 8008’s architecture could evolve in this way, then I’d argue that minicomputer architectures should have been capable of making the same journey. But none did.

Of course, some were not well placed to evolve. The 18-bit word-size of PDP-7 from Digital Equipment Corporation (DEC) wasn’t an ideal starting point.

But what about the 16-bit PDP-11? The PDP-11 did evolve into the 32-bit VAX-11, which was a very successful ‘superminicomputer’. So why was the VAX an evolutionary dead end? There is one technical, and one commercial reason.

The technical reason? The VAX had an architecture that was very CISCy indeed.

Here is an example VAX instruction in an excerpt from Patterson and Hennessy’s Computer Organisation and Design:

When it became clear that RISC architectures were a good idea, then the days of VAX as an architecture were numbered. Indeed, DEC would put considerable effort into developing RISC architectures rather than putting that development effort into VAX.

You may protest that x86 is a CISC architecture. It is, but it is also one of the least ‘CISCy’ of the CISCs and so has been amenable to lots of measures that allow it to get around some of the flaws of the original designs. It also had the great commercial advantage of having the scale to support huge investment, both in the development of the architecture and manufacturing processes to build it.

John Mashey has given a great account of these issues in A Historical Look at the VAX : Microprocessor Economics. This excerpt gives some indication of the disparity in volumes between VAX and x86:

… x86s tend to have a very distinct advantage against other MPU families. Anne & Lynn Wheeler recently pointed out in comp.arch that VAX unit volumes have traditionally been quite low. The MicroVAX II, a very successful product, shipped 65,000 units over 1985-1987; by comparison over 1985-1987, there was roughly 14,000,000 IBM PCs and clone systems sold. Intel’s large x86 volumes mean that they can spend more time and effort optimizing their CPUs, and probably still have lower average costs.

And this is the commercial reason why minicomputer architectures, in general, failed. They never had sufficient volume to support the levels of investment that would enable them to compete with PC derived architectures.

Minicomputer Makers : Pivoting Is Difficult

There is one final question that we ought to answer. Why did none of the firms focused on making minicomputers - DEC, Data General, Prime, Wang and others - survive?

These firms did see the writing on the wall and tried to break away from reliance on minis.

DEC, at its peak, was a highly successful and profitable company. It hit $11bn in sales in 1988 and had operating income of over $1.6 bn. Adjusted for inflation, DEC’s sales in 1988 were bigger than Nvidia’s in 2022.

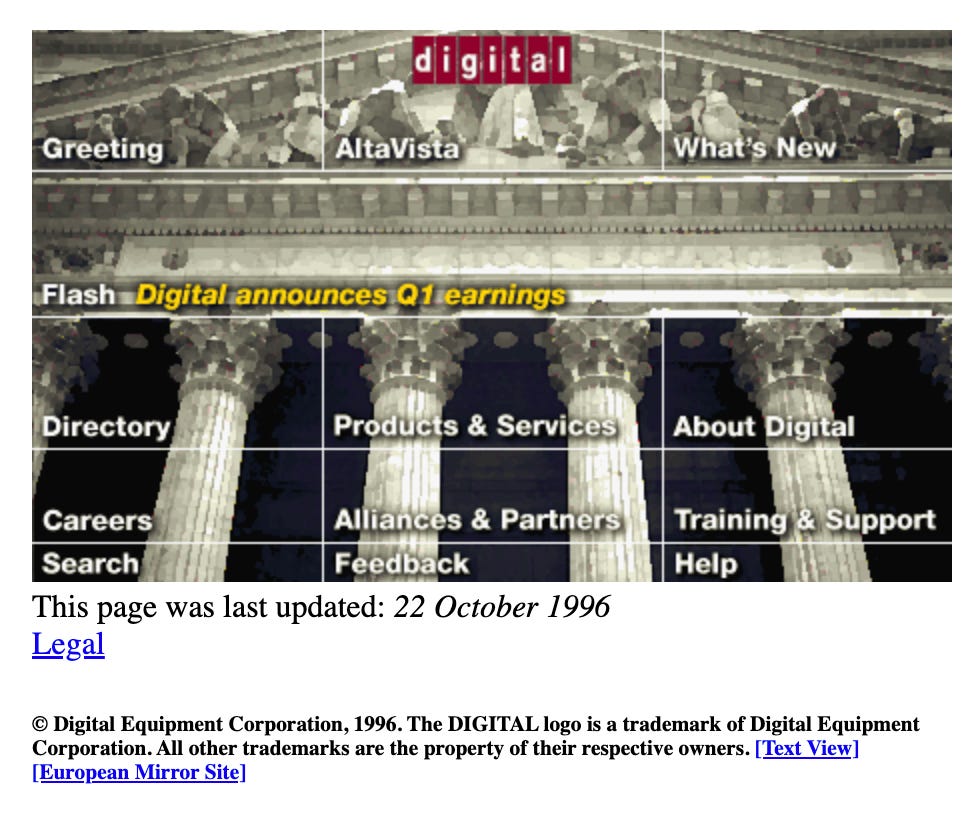

What’s more, DEC remained a highly innovative company throughout its lifetime. We’ve seen that DEC developed its own, highly regarded, RISC architecture, DEC Alpha. It was also one of the first companies on the web, being the fifth company to register a domain (dec.com) in 1985.

But DEC was to disappear in a merger with Compaq in 1997. Compaq, in turn, would vanish into HP in 2002.

The missteps and subsequent demise of DEC deserves its own, quite long, post.

I think, though, that there is a simple explanation for why DEC lost its way: DEC was a minicomputer company. It was partly economics as we have seen above, but also other factors. From the John Mashey post again (my emphasis):

Mainframes and minicomputer systems companies thrived when the design style was to integrate a large number of lower-density components, with serious value-added in the design of CPUs from such components. For an example of this, look at a VAX 11/780 set of boards. As microprocessors came in, and became competitive, systems companies struggled to adapt to the change in economics, and to maintain upward compatibility. Most systems companies had real problems with this. There were pervasive internal wars, especially in multi-division companies.

It’s really hard to pivot an entire business, to focus on new products built with different technology and selling into different markets with dramatically different structures (sales channels, cost structure etc). Arguably, Louis Gerstner, achieved it to a degree with IBM, but it’s a rare case.

DEC didn’t manage it. And if a company as innovative as DEC couldn’t survive, then what hope was there for Data General, Wang, Prime and the other smaller and less innovative mini makers?

Afterthought

It’s easy to talk about the failure of ‘minis’. It’s harder to identify the ‘minis’ of today. An important category of important devices that is doomed to extinction.

There are other, once important categories of computing devices that were once highly popular but have since disappeared: bit-slice processors, workstations, iPods and others.

It’s thought provoking (and much harder) to try to identify devices that are being made today that have a limited life-span. I don’t think anyone thought minis were doomed in their heyday. What categories of device are important today but are ultimately doomed?

Most notably, of course, the first version of Unix was written on a DEC PDP-7 minicomputer.

I’ve chosen the System/36 for this illustration partly because part of my job, during my brief time at IBM the 1980s, was to help sell IBM’s salesforce sell these machines. We didn't have much success in an era in which the IBM PC was starting to sell in huge volumes.

There is an exception to this. If the computing power needs to be close to a particular site. And indeed there are ‘mini’ sized boxes at, for example, mobile wireless base stations. But the actual computer in these boxes is usually much smaller.

It's worth noting that MS supported the Alpha processor. I remember that NT setups came on two distinct folders: Intel and Alpha.

Categories of devices that are doomed.

1. Laptop computers. These will be replaced by interfaces that are driven by a personal compute device and work compute devices.

2. Desktop Computers. These will be replaced by interfaces that are driven by work compute devices, and personal compute devices.

Compute device will continue to shrink. The reality is all interfaces can be driven by the compute that is carried by a standard human today, and that capability will only continue to exponentially increase.