How Intel Missed the iPhone: XScale Era

A challenge to the conventional narrative on Intel's smartphone miss

There is a mythology about Intel’s iPhone ‘miss’. It’s a mythology about how Intel couldn’t see the iPhone’s potential and so ‘turned it down’ in a decision driven purely by the financials of a possible deal. In other words, a classic case of ‘disruption’, with an incumbent hooked on high prices and margins and disinterested in a simpler and cheaper product.

There is a problem though. Key elements of this narrative are demonstrably false. Others just don’t ring true.

This is a follow-up to our recent article on Intel’s Immiseration, which charted Intel’s current woes. That post traced the firm’s malaise to its historic failure in the smartphone System-on-Chip market. This post explores how that failure happened. In it, we’ll expand on our previous discussion in The Paradox of x86 and challenge much of the conventional narrative about how Intel ‘missed’ the iPhone.

This is a long post, so it’s been split into two installments: XScale Era and Atom Era. Even this first installment is too long for many email readers, so you may need to click the title to read the full article online.

Otellini’s Farewell

“We ended up not winning it or passing on it, depending on how you want to view it. And the world would have been a lot different if we'd done it …"

Paul Otellini, Intel CEO, 2013

There is a bittersweet quality to the late Paul Otellini’s 2013 valedictory final interview as CEO of Intel for Alexis Madrigal in the Atlantic. The company's achievements under his leadership, set out in the interview, were undoubtedly impressive:

Under his watch since 2005, it created the world's best chips for laptops, assumed a dominant position in the server market, vanquished long-time rival AMD, retained a vertically integrated business model that's unique in the industry, and maintained profitability throughout the global economic meltdown.

… he delivered quarter after quarter of profits along increasing revenue. In the last full year before he ascended to chief executive, Intel generated $34 billion in sales. By 2012, that number had grown to $53 billion.

The $11 billion Intel earned in 2012 easily beats the sum total ($9.5) posted by Qualcomm ($6.1), Texas Instruments ($1.8), Broadcom ($0.72), Nvidia ($0.56), and Marvell ($0.31), not to mention its old rival AMD, which lost more than a billion dollars.

And yet, one ‘miss’ hangs over the whole narrative:

But, oh, what could have been! Even Otellini betrayed a profound sense of disappointment over a decision he made about a then-unreleased product that became the iPhone.

The iPhone!

Every once in a while a revolutionary product comes along that changes everything.

Steve Jobs, 2007 WWDC Keynote

Steve Jobs’s introduction to the Keynote that launched the iPhone might have initially seemed like hyperbole. But any sense of exaggeration soon disappeared. In 2013, the year of Otellini’s departure from Intel, Apple would sell 150 million iPhones helping it generate over $170bn in sales and $37bn in profits, far surpassing Intel’s results.

The most important silicon in 2013’s iPhone 5S, the A7 System-on-Chip (SoC) was made, not by Intel, but by Korean rival Samsung. The SoC contains the processor that runs user apps - the ‘application processor’ - and lots of other silicon powering graphics and much more. The SoC is almost always the most complex chip in a modern smartphone.

A year later, Apple switched the manufacturing of the A7’s successors to Taiwan Semiconductor Manufacturing Company (TSMC), providing TSMC with a huge revenue stream. By the late 2010s, over half of the Taiwanese company’s revenue would come from making smartphone chips. That revenue—particularly from Apple—provided essential support for TSMC’s investments in new and more advanced fabs. Fabs that would take Intel’s decades-long crown as technology leader in ‘logic’ integrated circuits.

By 2013 the importance of this ‘miss’ for Intel was clear to all, not least Otellini. The Atlantic article is prophetically titled “Can the Company That Built the Future Survive It?”

In 2024, the survival of Intel is a pressing issue, not just for shareholders and employees, but for the United States as a semiconductor technology leader.

How did this happen? How could Intel miss such an important opportunity?

In the Atlantic article, Otellini frames it as a personal decision based purely on financial terms:

The thing you have to remember is that this was before the iPhone was introduced and no one knew what the iPhone would do... At the end of the day, there was a chip that they were interested in that they wanted to pay a certain price for and not a nickel more and that price was below our forecasted cost. I couldn't see it. It wasn't one of these things you can make up on volume. And in hindsight, the forecasted cost was wrong and the volume was 100x what anyone thought [my emphasis].

There is a mythology about Intel’s iPhone ‘miss’. It’s a mythology about how Intel couldn’t see the iPhone’s potential and so ‘turned it down’ in a decision driven purely by the financials of a possible deal. In other words, a classic case of ‘disruption’, with an incumbent hooked on high prices and margins and disinterested in a simpler and cheaper product.

A Vox article from 2016 provides a good example - probably strongly influenced by Otellini’s comments - of how Intel’s smartphone miss is often presented:

Intel’s decline is a classic story of disruptive innovation …

With many companies selling ARM chips, prices were low and profit margins were slim. It would have been a struggle for Intel to slim down enough to turn a profit in this market.

And in any event, Intel was making plenty of money selling high-end PC chips. There didn’t seem to be much reason to fight for a market where the opportunity just didn’t seem that big.

Or take TechCrunch’s analysis also from 2016 - complete with a link to Otellini’s Atlantic article:

By the time ARM was gaining traction and Jobs had secretly gone to work on the iPhone, Intel was thoroughly addicted to its fat margins. Thus it was no surprise that Intel passed Steve Jobs’ suggestion that they fabricate an ARM chip for the iPhone. Intel didn’t want to be in the low-margin business of providing phone CPUs, and it had no idea that the iPhone would be the biggest technological revolution since the original IBM PC. So Intel’s CEO at the time, Paul Otellini, politely declined.

There is a problem though. Key elements of this narrative are demonstrably false. Others just don’t ring true.

First, it’s not true that Intel had little interest in the market for phone chips.

At the time of the iPhone’s launch, it had already invested heavily in trying to break into the smartphone market. It would continue doing so for many years afterward when it was clear that the opportunity was ‘big’.

Second, if any company and CEO had the concept of disruption in their DNA, then it was Intel and Otellini.

Andy Grove, Intel’s CEO between 1987 and 1997, worked closely with Clayton Christensen, who devised the concept. Christensen wrote a warm tribute to Grove when he passed away in 2016, one that emphasized their discussions on disruption:

When Intel’s microprocessor business was being threatened by low-cost competitors, he asked me what to do about it. But instead of telling him how to respond, I gave him what he really wanted: I laid out the theory of disruption for him and his team so that they could predict the next threat — or opportunity — in their path.

Otellini had served as Grove's technical assistant early in his career. Even though Grove had, by the mid-2000s, been long retired, it’s hard to believe that Otellini, as a relatively new CEO, didn’t seek the counsel of his former mentor on a crucial decision like this. Or that Grove didn’t pick up the phone to his protege.

Otellini later recalled in the Atlantic interview how in 2005 [around the time of discussions with Apple] he had started to try to “… get Intel to start thinking about ultracheap [chips for $10 or less]” on the basis that there would be sales of billions of computing devices with these chips. Intel was already creating cellphone chips that sold for between $10 and $20, so this was not that much of a leap. It’s hard to believe that he would turn down such an opportunity when he knew that Intel had to start to make less expensive chips.

As a final hole in the, ‘it was all about price and Intel couldn’t or wouldn’t compete’ argument, we’ll see later that Intel did get one chip into the first iPhone - a memory chip that was probably more price-sensitive than the SoC.

Thirdly, the success of an Apple phone would not have been hard to predict.

In 2006 Apple sold nearly 40 million iPods. The world was waiting expectantly for Apple to launch a mobile phone. Otellini (and Grove) must have seen the possibility that a new phone from Apple might be a runaway success.

Finally, it gives no weight to the quality and suitability of Intel’s technology compared to its competitors.

Is it credible to think Apple was solely driven by price for a vital component of its most important product in decades? If Intel had the best technology would they have been turned away purely to save a few nickels? The narrative also denies credit to Intel’s competitors in the ARM ecosystem for their response to Intel’s attempts to enter the phone market.

This leaves lots of questions. What happened in the discussions between Intel and Apple? How could a company acutely aware of the risk of being disrupted, miss the iPhone? Why did Otellini, in his Atlantic interview, frame the decision as one solely based on price?

Part of the answer to the final question, perhaps, is that Otellini was acting as a lightning rod for criticism. He was creating a narrative that the problem had been costs, faulty forecasting, and the departing CEO’s vision and decision-making. It was not a problem with Intel or its technology. Intel could still succeed in the smartphone SoC market under new CEO Brian Kzranich.

But if this narrative is questionable, why did Intel miss out on the smartphone market? Scratch the surface and it’s a much more complex and interesting story than the conventional narrative. Rather than one questionable decision, it is a story of a series of missed opportunities, and multiple strategic errors, combined with bad luck and poor timing over almost two decades. It’s a story where Intel had the technology that it needed to be successful in the phone market but, driven by a combination of lack of patience and hubris, gave it away.

In the first part of this article, we’ll outline the story of Intel’s efforts in the phone market leading up to the launch of the iPhone. Then we’ll try to deduce what happened in the discussions between Intel and Apple, including how Otellini’s comments might be simultaneously true and misleading.

In the rest of this post - Part 1 of the story - we’ll look at the first decade of Intel’s efforts in the cellphone SoC market culminating in the launch of the iPhone at the start of 2007. Part 2 - the Atom Era - will consider Intel’s attempts to break into the post-iPhone smartphone market.

Strong and Speedy ARM

The story starts at minicomputer maker Digital Equipment Corporation (DEC). In the late 1980s DEC was searching for an architecture to power the successor to the popular, but highly complex (in the sense of Complex Instruction Set), VAX series of minicomputers.

After a series of iterations an architecture, initially known as DECchip but later renamed as DEC Alpha, was launched in November 1992. DEC promoted the Alpha as the world’s fastest commercially available microprocessor.

The fastest commercial reduced-instruction-set computer (RISC) chip around today is based on a new, 64-bit architecture called Alpha. Designed and manufactured by Digital Equipment Corp., the chip works at a clock speed of 200 MHz, making it twice as fast as any previously delivered microprocessor.

IEEE Spectrum, How DEC developed Alpha, July 1992

Intel’s fastest design at the time was the 80486DX2, with a maximum clock speed of just 66MHz.

But the Alpha’s performance came at a price: power consumption that made it unsuitable for lower power applications.

Dan Dobberpuhl and colleagues at DEC on the Alpha team, inspired by work at UC Berkeley on low-power devices, started to look at the possibility of making a lower-power version of the Alpha. Initial results were promising:

So we reduced the VDD supply from 2.5V to 1.5V. As a result we got a pretty decent performance/power ratio on an Alpha chip. As I recall, the frequency went down to about 100 megahertz from 200, but the power was down to less than 5 watts from the original 30.

At the same time, a group of DEC engineers based in Austin, Texas was approaching the challenge from the opposite direction, by exploring how to ‘soup-up’ an existing low-power RISC architecture. In the end, the Austin team’s approach won out:

Well, the low-power Alpha turned out to be an interesting technical idea but not an interesting marketing and business idea, because the whole premise of Alpha was high performance. Doing anything of lesser performance didn’t seem to make a whole lot of sense.

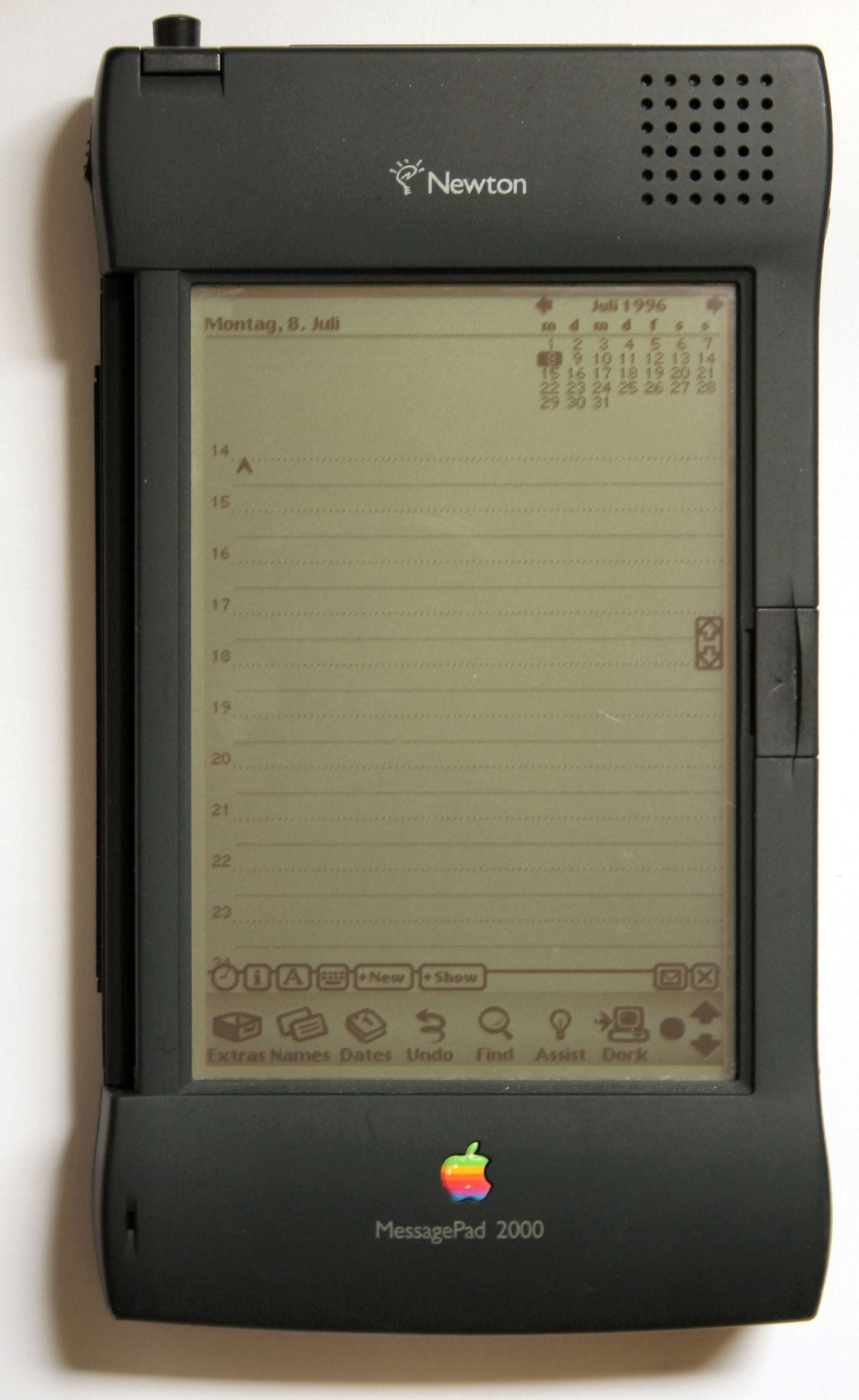

But which existing low-power RISC architecture? There were several alternatives including MIPS and Hitachi’s Super-H, but the team eventually settled on ARM. Not the least of the advantages of using ARM was that there was an existing ARM customer with a thirst for more powerful designs: Apple Computer who were using ARM designs in the Newton. With Apple as an ARM shareholder, they could pressure ARM to license the architecture to DEC. Not that pressure was probably needed, with ARM’s management focused on expanding its licensee base rather than building more powerful chips for Apple. A deal was struck between ARM and DEC with a new kind of license, for DEC to design chips using the ARM ISA, which would become known as an ‘Architecture License’.

DEC’s Alpha team, based in Austin and Palo Alto, was soon working with ARM engineers to create the StrongARM series of processors. The first fruit of that collaboration was the SA-110, announced in February 1996.

The market the StrongArm team was aiming for was clear from the paper that introduced the new design:

As personal digital assistants (PDA’s) move into the next generation, there is an obvious need for additional processing power to enable new applications and improve existing ones. While enhanced functionality such as improved handwriting recognition, voice recognition, and speech synthesis are desirable, the size and weight limitations of PDA’s require that microprocessors deliver this performance without consuming additional power. The microprocessor described in this paper—the Digital Equipment Corporation SA-110, the first microprocessor in the StrongARM family—directly addresses this need by delivering 185 Dhrystone 2.1 MIPS while dissipating less than 450 mW. This represents a significantly higher performance than is currently available at this power level.

Unsurprisingly, the first design win was at Apple and the SA-110 soon appeared in a new version of the Newton, the MessagePad 2000.

StrongARM’s performance meant that soon it gained design wins in PDAs such as the Compaq iPAQ and outside its original niche, in desktop computers and set-top boxes.

DEC sues Intel, Intel sues DEC, and DEC sells.

It wasn’t long, though, before StrongARM changed hands.

By the mid-1990s 1990s DEC’s minicomputer business was in a steep decline and the company had notched up several years with multibillion-dollar losses. For all their technical prowess the Alpha microprocessors were making limited headway against Intel’s - rapidly improving - designs.

In May 1997 DEC sued Intel, alleging that the Pentium processor infringed on several of DEC’s Alpha patents. Wired reported that:

DEC has claimed that it approached Intel about working together on the chip technology in 1990 and later offered to license the Alpha technology to Intel for use in improving the performance of its chips. DEC said Intel studied the chip, but decided not to go with it.

Intel countersued, alleging that DEC had misappropriated trade secrets and had infringed Intel’s patents.

In August 1997, the two companies settled, reaching an agreement where DEC sold its semiconductor manufacturing operations and the StrongARM business to Intel for $700m. It was a deal that worked for both sides. DEC divested its uncompetitive fabs and Intel acquired the successful StrongARM business, as a potential replacement for its aging i860 and i960 RISC designs.

But there was a twist to the inclusion of StrongARM in the sale. Might Intel, as owner of the powerful StrongARM designs, pose a threat to ARM’s business?

Robin Saxby, ARM’s CEO at the time, has recalled a conversation with Steve Jobs, still a major ARM shareholder, but no longer a major customer:

Intel acquired Digital and Intel got the rights to the Arm license through that contract … I had to get board approval on every deal. I discussed it with Apple and basically Steve Jobs … sorry he's not around anymore … was not in favor of licensing Intel and tried to stop me doing it. I spoke to him on the phone and he said you're the Chief Executive of ARM Robin, who am I to argue with you and he approved the deal.

And here, although Saxby’s view prevailed, there was a credible argument to be made against transferring the architecture license to Intel. ARM was a minnow (turnover £27m - around $45m - in 1997) compared to Intel (1997 turnover $25bn). Intel couldn't compete directly with ARM by licensing its designs to third parties, but they might represent formidable competition for other firms considering adopting ARM’s own, less powerful, processor designs.

By the late 1990s, there was a growing interest in using those designs in cellphones following Nokia’s use of an ARM-based Texas Instruments SoC in one of their most successful handsets. We’ve covered this earlier in The Arm Story Part 3: Creating a Global Standard.

The Arm Story Part 3 : Creating A Global Standard

In Part 3 of the Arm Story, we will see how Arm made it from a precariously funded spin off from Acorn Computers to a viable and successful, but not yet world-beating, business. To my mind, this is the most intriguing part of the story. How a small team, with an interesting, but in some ways flawed, technology, could, with limited financial resources, b…

Cellphones presented a huge opportunity and ARM was the emerging standard, but Intel, with its resources and ownership of StrongARM, was now well placed to compete and win against ARM and its other partners.

XScale

Initially, Jobs’s concerns about Intel seemed like they might be well founded. Intel owned the most powerful ARM designs and looked to make the most of them.

Under CEO Craig Barrett, Intel invested in new devices in the StrongARM family but rebranded them as XScale, removing the ARM connection from their naming. The XScale family included several distinct lines targeted at different markets. The PXA line, starting with 2002’s PXA210 and PXA250, was for mobile phones and PDAs, while the IXC, IOP, IXP, and CE lines targeted storage, network processors, and consumer electronics devices.

If the XScale rebrand signaled a distancing from ARM then the addition of Intel’s own ‘Wireless MMX’ instructions in the PXA270 in 2004 moved XScale further away from other ARM designs.

Wireless MMX transplanted into XScale Intel’s MMX instructions, used to accelerate multimedia operations on desktop CPUs. Wikipedia has a good summary of what Wireless MMX added to the XScale architecture.

Wireless MMX (code-named Concan; "iwMMXt"): 43 new SIMD instructions containing the full MMX instruction set and the integer instructions from Intel's SSE instruction set along with some instructions unique to the XScale. Wireless MMX provides 16 extra 64-bit registers that can be treated as an array of two 32-bit words, four 16-bit halfwords or eight 8-bit bytes. The XScale core can then perform up to eight adds or four MACs in parallel in a single cycle. This capability is used to boost speed in decoding and encoding of multimedia and in playing games.

Formally, the Wireless MMX instructions were implemented as an ARM architecture-compliant co-processor. However, whatever the technical and legal status of this addition to the architecture it sowed confusion and concern:

Intel Corp.'s move this week to deploy new, multimedia instructions in its RISC chip architecture is being seen as its latest effort to distance itself from its processor partner, UK-based ARM Holdings plc, according to analysts. The announcement … also raised some questions–if not confusion–over compatibility issues between the respective RISC chips from Intel and from other ARM licensees.

Intel’s intentions looked like a classic embrace, extend, and extinguish strategy. If a customer chose XScale and used Wireless MMX they would be locked into Intel. It threatened parts of the ARM ecosystem and prompted a response from ARM. In the book Culture Won, the former ARM executive Keith Clarke describes that response and the reaction to Intel’s moves from OEMs (Original Equipment Manufacturers) in the mobile phone market (my emphasis):

ARM feared this would lead to an imbalance of competition in the market and responded with the Neon multimedia extensions for Armv7, first implemented in Cortex-A8. It's probable that some of the OEMs in the mobile market also saw Intel's Wireless MMX as a threat to their purchasing flexibility. The mobile market was not the PC market - Intel was not going to dominate that easily.

Wireless MMX didn’t just sow suspicion, it was also a questionable choice for the mobile phone market where including a Digital Signal Processor (DSP) would have been a better decision. Quoting The Register:

A series of poor management decisions ensured that StrongARM was well positioned for a market that was on the decline [PDAs] and rarely competitive in a market that boomed [mobile phones]. Early on, Intel decided against integrating dedicated digital signal processing into the StrongARM chip, later renamed XScale. While this decision was justifiable for fixed embedded markets and for PDAs, it put the chip at a huge disadvantage for lower-cost devices that needed voice capabilities.

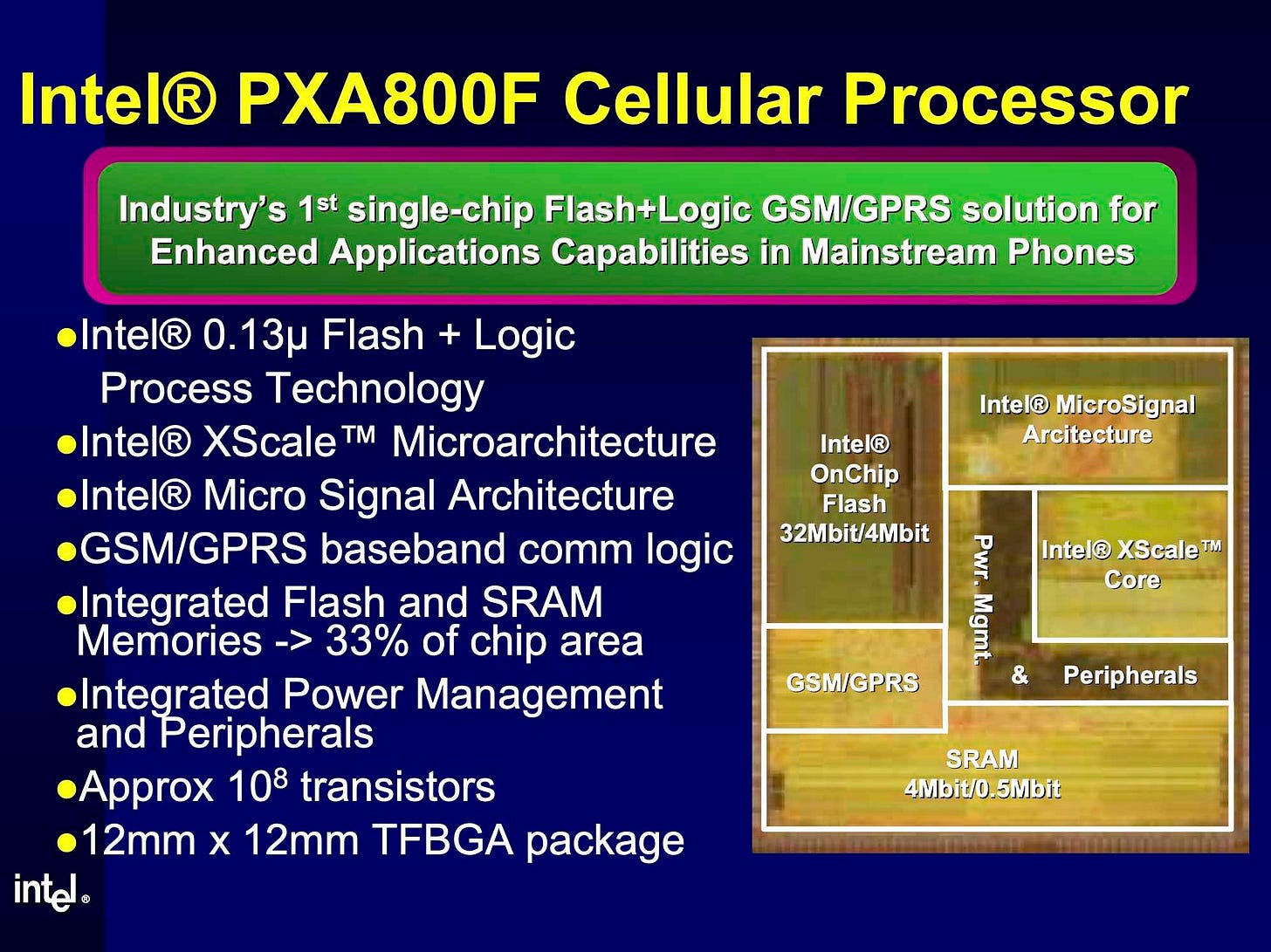

By 2004 though, Intel had corrected this mistake, with the PXA800F cellular processor which included an Intel MicroSignal Architecture DSP.

Intel created example cellphone designs with the PXA800F.

This wasn’t the limit of Intel’s aspirations for the XScale PXA line. In 2005 Intel announced a PXA chip that could reach a clock speed of over a gigahertz:

Intel today introduced 'Monahans', the gigahertz-class successor to 'Bulverde', the current XScale PXA27x family.

The 90nm part is due to be formally launched in Q4 2005, Sean Maloney, VP and General Manager of Intel's Mobility Group, said today at Intel Developer Forum.

Describing the chip as "another step ahead in performance", Maloney demo'd a pre-production Monahans running at 1.25GHz. The chip was being used to decode and play back HD video on a PDA screen.

However, the PXA800F and Monahans had to compete with well-established incumbents like TI’s OMAP platform.

And by now ARM’s in-house cores - particularly the Cortex A8 announced in 2005 - were closing the gap and even promised to surpass XScale. According to Mobile Unleashed by Daniel Denni and Don Dingee:

Support for Cortex-A8 was instantaneous. Samsung, TI, and Freescale … were first, along with EDA tool vendors Cadence and Synopsys, and operating system providers Microsoft and Symbian.

It was the first note of the death knell for Intel XScale, at least as an applications processor. The gap in processor design elegance that DEC had initially established had closed quickly. As ARM progressed to Cortex-A8, gaps in low-power DSP integration for 3G, multimedia such as mobile GPUs, and advanced OS features such as Java performance blew wide open.

And Intel’s phone division had problems beyond its technology:

One of the problems at Intel was (and still is) sales force synergy; selling parts for PCs and parts for mobile devices requires two vastly different skill sets and compensation strategies. Without a robust design win pipeline, momentum wanes. The last major design wins for Scale in mobile devices were unwinding.

There was no lack of ambition, but XScale was struggling against the competition.

Otellini Takes Over

Meanwhile, by the mid-2000s, Intel’s main desktop and server businesses were struggling. The company had bet heavily on Itanium, a new Very Long Instruction Word (VLIW) architecture, as the successor to x86 designs on servers and high-end desktops. Itanium turned out to be a disaster. While Intel was distracted, AMD developed a 64-bit version of x86 that gave customers better performance and backward compatibility. By the middle of 2005, AMD was rapidly gaining ground:

AMD now accounts for 21.4 percent of all desktop, notebook and server processors using the x86 instruction set that were shipped during the fourth quarter, Mercury Research plans to announce next week. The chipmaker's share grew from 17.7 percent in the third quarter, on strong gains in all three of those segments.

Intel’s lunch was being eaten in real-time.

Decisive action was needed when Paul Otellini took over as Intel CEO from Craig Barrett in May 2005.

The choice of Otellini as CEO was controversial for some, largely because he was the first Intel CEO not to have a technical background. A Fortune magazine article published in April 2005 asked ‘Is Paul Otellini The Right Man for Intel?’

Andy Grove had no doubts:

Andy Grove, the great ex-CEO of Intel, is talking about Paul Otellini, his onetime aide who is set to become the chip pioneer’s fifth chief executive on May 18. And Grove is annoyed. “Bullshit!” he says. Parroting a remark I’ve heard repeatedly interviewing people about the new boss, I’ve just asked if it matters that Otellini is not a technologist. Current and former Intel insiders, Wall Street and industry analysts, competitors, and customers–they all make the same obvious point about Otellini, whose background is in finance and marketing.

Grove retorts it is “superficial” to focus on Otellini’s lack of an engineering degree. “Paul is a self-taught technologist. He knows the products better than I ever did,” Grove says.

Let’s deal with one myth about Otellini straight away. He was not just a ‘numbers guy’. He started his career in Intel’s finance department but, by the time of his appointment as CEO, he had already had extended periods successfully running Intel’s microprocessor division, groupwide sales and marketing, and then the Intel Architecture Group.

This Fortune article makes it clear what others thought Otellini needed to do:

Atik Raza, former president of AMD and now a venture capitalist, thinks Otellini has the skills to refocus the company. “Intel’s meanderings will reduce under Paul,” he says. “You will see a sense of purpose once he has totally taken control.”

How Otellini intended to apply that sense of purpose was soon clear. In April 2006 a broad restructuring of Intel was set in motion:

“No stone will remain unturned or unlooked at,” Otellini said at an analyst conference in New York City. “You will see a leaner, more agile and more efficient Intel Corp.”

And the '“meanderings” would come under particular focus:

Over the next 90 days, executives will identify underperforming business groups and cost inefficiencies but will not wait until the end of the review to start implementing changes, Otellini said.

In September 2006 the results of this review were made public:

Intel said after the market closed that it will eliminate 10,500 jobs — about 10 percent of its work force — through layoffs, attrition and the sale of underperforming business groups as part of a massive restructuring.

The refocusing was not just about cutting jobs and business units. There would be a new focus on what Intel’s customers wanted:

No longer should it focus on processor speed for speed’s sake alone. Instead it should listen to what customers want–the way a marketer, not an engineer, would approach designing products. “People still want performance, but they want other things, and we need to deliver and discuss performance in a different way,” says Otellini. Now that he is becoming boss, Intel engineering is all about catering to customers. Call it the Otellini amendment to Moore’s law: “This is the first time in Intel’s history where the product orientation of Intel is outward-facing,” he says.

And what they wanted was low power and wireless:

… he successfully fought with engineers over the need to create Centrino, Intel’s wireless brand. In typical Intel tradition, the engineers were bent on designing a faster, more powerful processor. Otellini was convinced that users of laptops already had plenty of power and speed–what they cared about was heat generation, battery life, and wireless capabilities. He won, and Centrino paid off. Not only has it been a giant success, but it is now the model for the way Otellini is reshaping all of Intel’s businesses.

Listening to customers and refocusing on power consumption led to one of the early successes of Otellini’s tenure. Apple had been using IBM PowerPC processors in its Mac desktops and laptops but the latter were struggling due to PowerPC’s excessive power consumption. Otellini persuaded Steve Jobs to switch to the new Intel designs using Intel’s newest, lower-power, Core architecture.

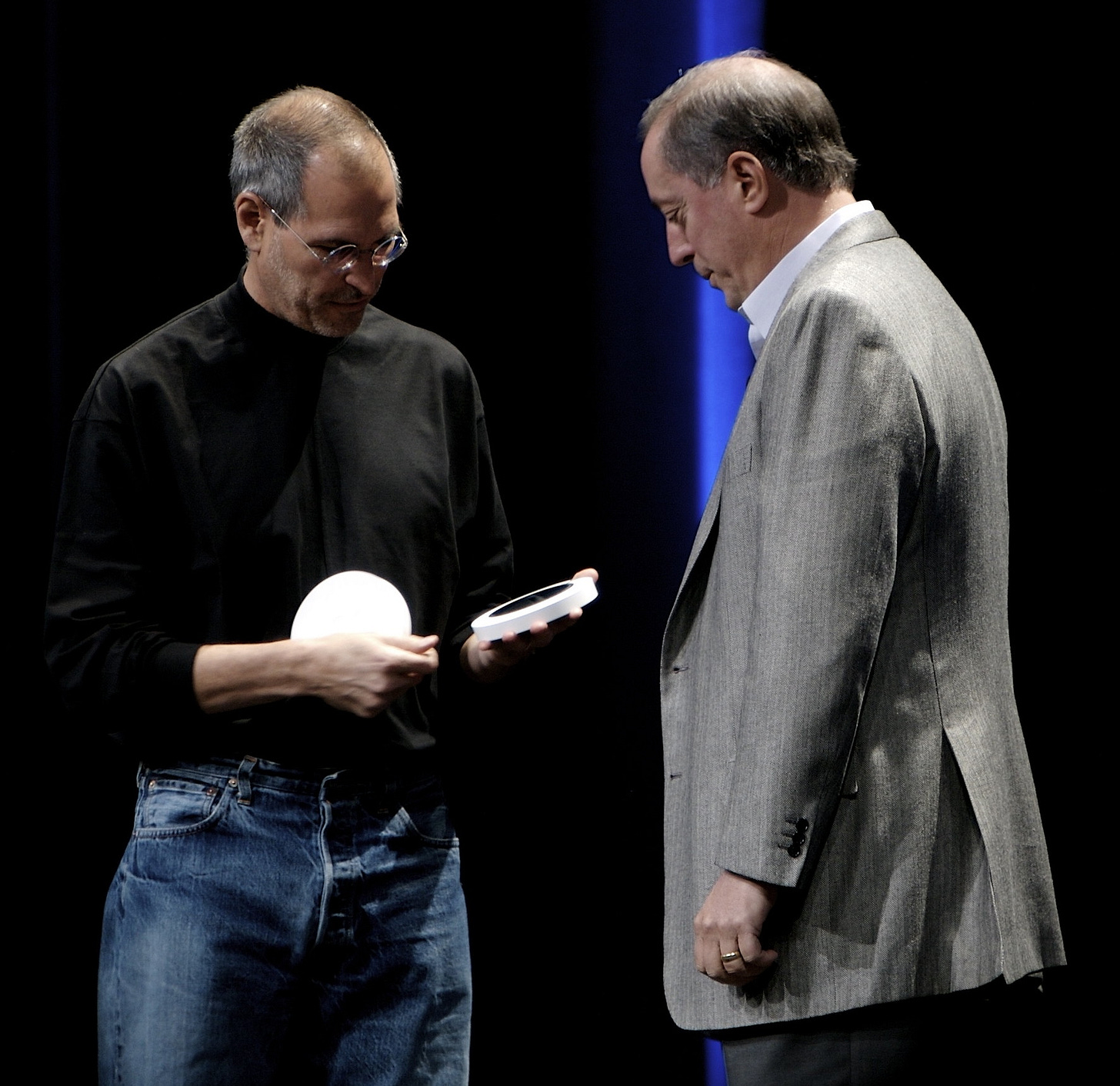

The importance the usually reserved Otellini, with his marketing background, placed in capturing such a high-profile customer for Intel’s chips is clear from his willingness to appear in a ‘bunny suit’ on stage with Steve Jobs at the launch of the Intel Macs.

Intel Sells Xscale

Otellini’s enthusiasm for low power and wireless connectivity didn’t extend to the struggling, money-losing XScale business, where the much-needed patience was in short supply.

Already by the start of 2005, there was speculation about the future of XScale and Intel’s commitment to the phone business:

Progress has been so slow that rumors circulate periodically that Intel will get out of cellphone chips altogether.

Which prompted a vigorous response:

“No way… We’re not getting out of cellphones”

However, a commitment to phone chips wasn’t a commitment to XScale phone chips.

In the middle of 2006 as part of Otellini’s restructuring, and just before the launch of the iPhone, Intel sold XScale’s PXA mobile SoC business to Marvell for a modest $600m, roughly what it had paid for the StrongARM business almost a decade previously. Intel had 1,400 engineers working on XScale, and the company confirmed that many of these, presumably including those with the most experience in mobile SoCs, were expected to join Marvell.

Why did Intel sell XScale? Part of the answer comes from some of the lessons that Intel and Otellini had learned in the previous decade which in part were a return to Grove-era thinking. Intel had succeeded under Grove when it focused on x86, an architecture with enormous software support - on the desktop at least - and a cozy duopoly with AMD. It struggled when it tried an innovative new architecture like Itanium or an architecture with lots of competitors like ARM. Otellini’s first job working for Grove had been to analyze one of Intel’s failed architectural adventures, the BiiN project which in turn had emerged from the failed iAPX432 project:

His first assignment was to write a postmortem for the board on a failed joint venture with Siemens. Insiders joked that the project, called BiiN, stood for “billions invested in nothing,”

Ouch! Otellini would ensure that Intel’s focus would be on x86 with no ‘meanderings’.

The fact that the XScale business was struggling would have reinforced Otellini’s view that ARM-based devices were not the answer.

Almost a year earlier, soon after becoming CEO, Otellini had laid out a vision for low-power portable computing (my emphasis):

Otellini's pitch was a new generation of devices he dubbed the 'handtop'. The platform is nothing new, of course - PDAs, palmtops and handheld PCs have been around for years - but past attempts to create truly mobile, wireless micro PCs have been hindered by performance and battery life limitations.

Otellini said Intel's new focus on "performance per watt" will remove those limits. The vision is a 0.5W processor with sufficient horsepower to run Windows Vista by 2010.

If Otellini’s ‘handtop’ SoC ran Windows Vista - with access to the rich ecosystem of Windows software - then it would need to use the x86 instruction set. The powerful Wintel relationship with Microsoft that had dominated the desktop would be recreated in a new series of portable devices.

Plus Intel believed it had a potential cellphone winner in its WiMAX wireless technology. In the 2005 Fortune article on Otellini, Sean Maloney, head of Intel’s new Mobility business made the approach clear:

“It’s a strategic thing for the company. We’re on the attack, not the defensive.” What Maloney has in mind for conquering cellphones is an end-around strategy. It’s called WiMAX, a radio technology being developed by an Intel-led consortium of manufacturers. WiMAX aims to make possible wireless broadband communications for up to a 50-mile radius in much the same way Wi-Fi works at up to 150 feet or so. That could change the game in mobile communications by making today’s cellphones obsolete.

“Bidding” for the iPhone: What we know and one possible scenario

Let’s try to build a picture of Intel’s bid for, or at least discussions with Apple about, the SoC for the first iPhone, probably taking place sometime in 2005.

Walter Isaacson’s biography of Steve Jobs describes the relationship between the two companies, and their CEOs, around that time. Intel was bidding to replace the PowerPC CPUs in the Mac:

Most of the negotiating was done, as Jobs preferred, on long walks, sometimes on the trails up to the radio telescope known as the Dish above the Stanford campus. Jobs would start the walk by telling a story and explaining how he saw the history of computers evolving. By the end he would be haggling over price.

The two companies reached an agreement about CPUs for the Mac and with Apple as a customer Intel seemingly had a golden opportunity to get its designs into the iPhone. Intel still had the XScale ARM business and was developing high-performance SoCs for the mobile market. However, dig a little deeper and the position seems much less rosy.

We may never know what transpired in the discussions between the two CEOs, neither of whom, sadly, are still with us, on the iPhone SoC. We have strong clues though, and can perhaps perform some informed deduction based on these clues.

First, Apple and Jobs were highly secretive and would have been especially so about a project of such extreme confidentiality as the iPhone.

Jobs would later make it clear to Isaacson that he was sensitive about sharing information with Intel in particular:

… we just didn't want to teach them everything, which they could go and sell to our competitors.

Jobs famously quipped about senior executives sharing sensitive data in an earlier regime at Apple:

“There used to be saying at Apple,” Jobs recalled at our D5 conference: “Isn’t it funny? A ship that leaks from the top.”

Is it likely that Jobs would ‘leak from the top’ about the iPhone to Intel?

Given all this, it’s possible that Jobs didn’t tell Otellini that Apple was developing a phone, and it’s impossible to conceive of him sharing precise details of the first iPhone. Instead, it’s likely that at most Jobs specified the outline of the application processor design that Apple needed: its performance, power consumption, price, and so on. It might have been presented as the opportunity to bid for the chip for the next version of the iPod. We’ll see in a moment that this probably didn’t seem like the specification for a chip for an innovative high-end phone and it probably wasn’t obvious to Otellini what the chip was for.

If so then it’s questionable to say Intel ‘passed on the first iPhone’ if it didn’t even know what product the SoC was for.

Second, Intel didn’t have the right product for Apple.

By the start of 2005, Otellini was already thinking about x86-based smartphone chips, and as we’ve seen, Intel’s commitment to XScale was in doubt publicly and Otellini had probably made his mind up to sell. I suspect he was reluctant to push a design from a business that he expected Intel would soon be disposing of. At that time though, no Intel x86 design was remotely suitable for a device like the iPhone, as the power consumption of all of Intel’s x86 designs was far too high.

Caught between XScale and x86 the timing could not have been worse.

Let’s also switch this around and look at the decision from Apple’s perspective. Apple’s engineers knew what products Intel had in their XScale phone SoC business. If they believed that Intel had a competitive or superior product then it seems likely that engagement would have progressed further than it did. Apple would also have known that Intel’s commitment to XScale was in doubt.

Third, although the transition to Intel on the Mac was, in many respects, a triumph in engineering and project management, it was not unblemished.

Apple had to create a 32-bit version of the OSX operating system for Intel’s x86 architecture before quickly phasing it out because Intel was late releasing its 64-bit Core 2 architecture designs.

The strains between the two companies came to the surface in Jobs’s interviews with Walter Isaacson, where Jobs comments:

They're like a steamship, not very flexible. We're used to going pretty fast.

This is not a view that would be conducive to working with Intel on a project, like the development of the iPhone, which would be vital, risky, and highly time-sensitive.

Finally, Otellini is ambiguous about whether Intel bid for the iPhone SoC.

“We ended up not winning it or passing on it, depending on how you want to view it.”

Did Intel pass on the iPhone’s SoC, or did it bid and fail based on price?

I’d suggest that had the process progressed to a formal bid, the position would have been clear, and Otellini’s description would have reflected that.

So what happened? Here is one possible scenario:

Otellini had informal discussions with Jobs about Intel making an SoC for an unspecified portable device.

There was little enthusiasm on either side. Intel was unaware that the SoC was for a device like the iPhone. Maybe Jobs quoted a price that seemed low to Otellini compared to the prices for Intel’s advanced XScale SoCs. In any event, Otellini was thinking of an x86-based mobile future.

On the other side, Apple was wary of Intel, and its XScale products, and questioned Intel’s commitment to the cellphone business.

That lack of enthusiasm likely meant the discussion didn’t progress very far.

So Intel missed the iPhone.

The iPhone Launch and its Aftermath

One can imagine Otellini’s surprise at the iPhone’s launch in 2007. Steve Ballmer may have laughed at the iPhone soon after its launch, but almost everyone else knew how important it was.

There was perhaps a dawning realization in the months after the launch that Intel’s best chance of building a series of SoCs for future iPhones and their competitors - XScale’s cellphone products and the staff that had designed them - was heading out of Intel’s doors.

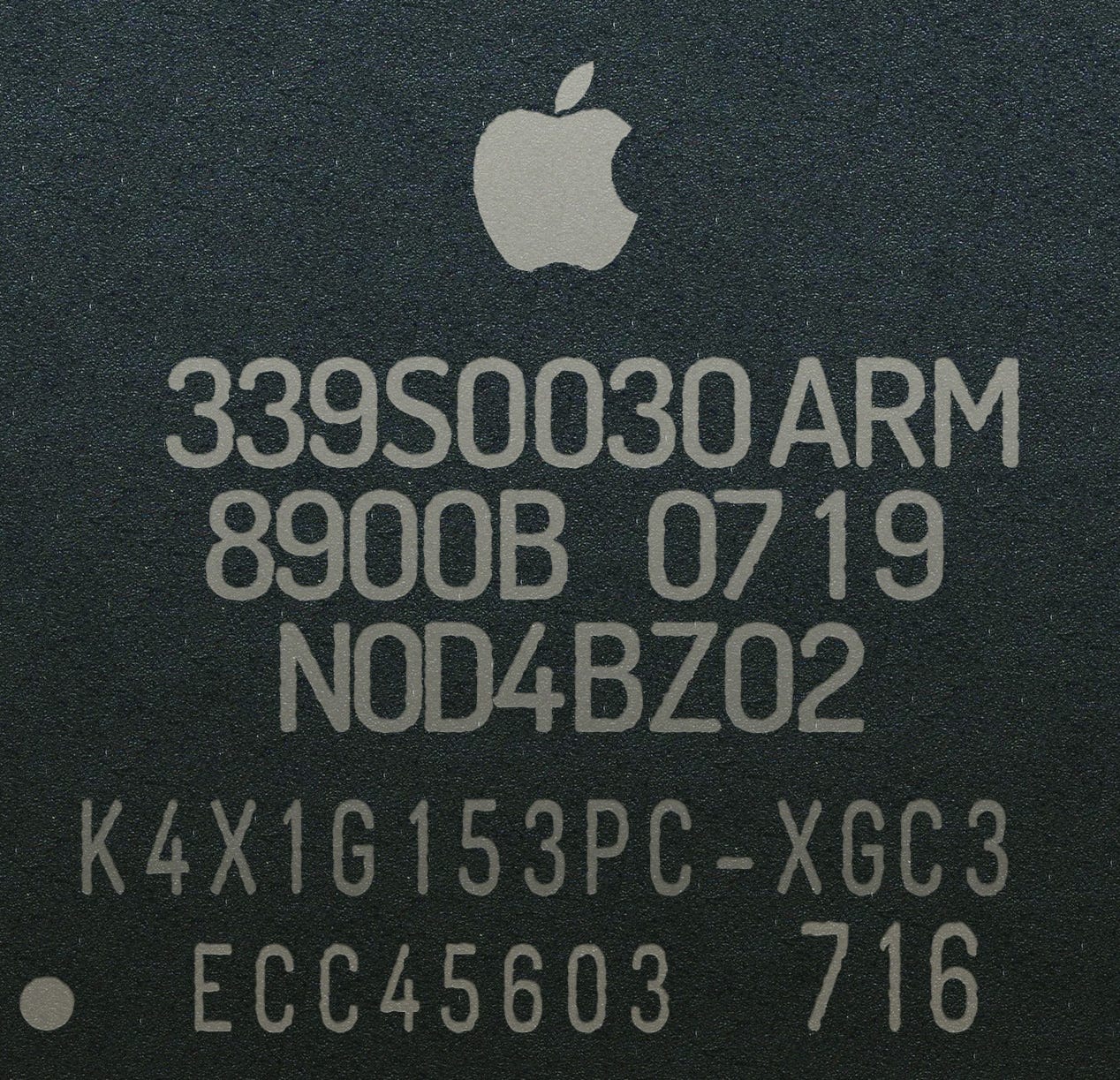

If Intel and Otellini were surprised by the iPhone when it launched then they were probably also startled by what was inside the new design. Apple used a relatively modest design from Samsung, the latest version of a series used in the iPod:

The S5L8900 contains a 32-bit ARMv6 compatible ARM 1176JZF-S CPU core [one of the ARM cores whose development had, in part, been prompted by the emergence of StrongARM] and is manufactured in the 90 nm CMOS process. The default clock rate of the CPU core is normally 666.6 MHz, but has been lowered by Apple to about 412 MHz, and the bus frequency is about 103 MHz. … As SoC, S5L8900 also has an integrated GPU, a PowerVR MBX LITE clocked at 60 MHz.

Talking about powerful SoCs has been a feature of Apple’s recent iPhone and Mac launches. Discussion of the SoC is notably absent from the first iPhone Keynote. The performance of the SoC just wasn’t a selling point for the new phone.

While Intel had been developing ever more powerful versions of XScale with clock speeds aiming at over a gigahertz, the iPhone materially reduced the clock speed of its SoC from its default to improve battery life. Apple had squeezed every bit of performance - and squeezed the operating system that would become known as iOS - out of the modestly specified SoC it had sourced from Samsung.

As an aside, Intel did make one chip that appeared in the original iPhone, as can be seen in the iFixit teardown photos, where the chip with the distinctive ‘i’ symbol is tentatively identified as ‘an Intel Wireless Flash stacked 32 Mb NOR + 16 Mb SRAM chip.’ Intel could compete on price in highly price-sensitive markets!

Even if the opportunity to build the SoC for the first iPhone had passed, perhaps all was not lost for Intel. Future iPhones and their Android competitors would need more advanced SoCs and the opportunity would present itself, year after year, to capture that business.

We’ll explore what happened next in Part 2 of this post “The Atom Era”.

iPhone 1 image courtesy of Frederik Hermann

https://www.flickr.com/photos/netzkobold/902434710/

Original License Attribution-NonCommercial-ShareAlike (CC BY-NC-SA 2.0)

After the intel and apple story for iPhone, the rise of Qualcomm and Snapdragon SoC would be a good read too.

Could intel have succeeded even if they made processors with armv7-A instruction set as qualcomm used same anti competitive practices similar to intel w.r.t by their cdma standard modems and patents like with intel x86 arch...

Do you believe intel have succeeded with out cdma modem??