This post is a brief ‘warm up’ to a short series of posts on interesting aspects of Nvidia’s and competitors histories and technology. We’ll next look at Nvidia’s PTX ‘assembly language’ (actually an intermediate representation) that has been making headlines due to its use by DeepSeek. I’ll argue that PTX is part of Nvidia’s ‘secret sauce’ and we’ll look at its place in Nvidia’s software ecosystem and the firm’s strategy.

First though, it’s worth doing a recap on Nvidia’s CUDA, which is built on top of PTX. Understanding the role that CUDA plays and it’s crucial importance is a useful prelude to any discussion of PTX.

Nvidia has once again been making headlines with several important announcements at GTC 2025. Here is the company’s own summary on their blog and Jensen Huang’s Keynote.

Whilst the new hardware might grab many of the headlines CUDA remains central to Nvidia’s proposition.

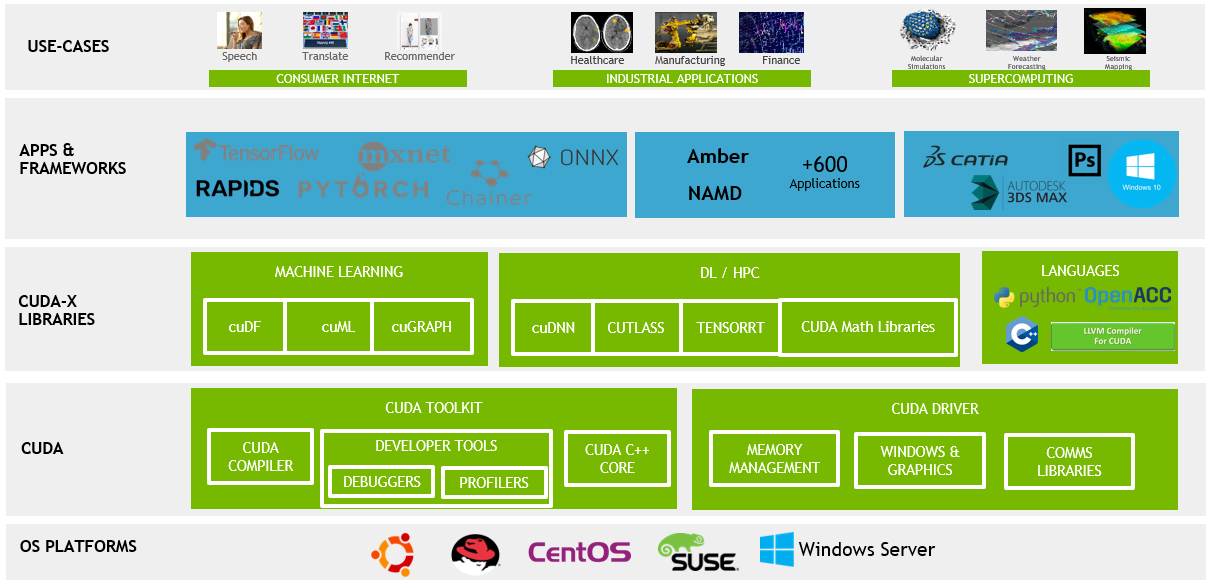

… this slide is is genuinely my favorite …

Jensen Huang on the CUDA-X slide

The first version of CUDA appeared in February 2007 so it’s just passed its 18th birthday. In many places CUDA would be old enough to buy some champagne to celebrate its own success (although not in its home state!)

CUDA may be all grown up but it hasn’t forgotten its early years. That first 1.0 version of CUDA is still available for download and the documentation is still online.

Despite it’s importance and maturity, there is still some confusion as to what ‘CUDA’ refers to though. Nvidia tries to clear things up in a recent blog post What is CUDA?

So, What Is CUDA?

Some people confuse CUDA, launched in 2006, for a programming language — or maybe an API. With over 150 CUDA-based libraries, SDKs, and profiling and optimization tools, it represents far more than that.

Wikipedia defines CUDA as:

… a software layer that gives direct access to the GPU's virtual instruction set and parallel computational elements for the execution of compute kernels. In addition to drivers and runtime kernels, the CUDA platform includes compilers, libraries and developer tools to help programmers accelerate their applications.

Perhaps we should see CUDA as a (powerful) ‘brand’ for Nvidia’s software ecosystem.

We’ve discussed the background to Nvidia’s investment in CUDA back in 2023 (post previously paid and now free):

Nvidia's Embarrassingly Parallel Success

Disclaimer: For once I have to add that this isn’t investment advice. I don’t hold or intend to hold Nvidia stock!

From that post:

… Jensen Huang and Nvidia could have had no idea that either of these two major use cases [AI and Crypto] for CUDA would be so important, let alone that they would be as valuable for Nvidia as now seems likely.

But then CUDA was always to enable General Purpose computing on GPUs.

There are a whole class of computing problems that are embarrassingly parallel (meaning that it’s obvious that they can be split into sub tasks, given to a number of processors and run in parallel). Modelling Neural Networks and mining Bitcoin are just the two most important so far.

There are many important classes of problems that fall into this category. And we are discovering more all the time.

Computational biology, fluid dynamics, financial modelling, cryptography are all important fields where computational work is often embarrassingly parallel.

So we shouldn’t say that Nvidia has built a ‘moat’ around AI. Rather that it has a moat around ‘embarassingly parallel computing’.

…

This was always a sensible bet for Nvidia to take. Why? Because the world is very, very often embarrassingly parallel.

It’s worth noting that whilst this was a ‘sensible’ bet in 2007 it wasn’t a ‘pain-free’ one. CUDA would have come with both a financial cost and an opportunity cost for Nvidia. The firm’s net income for 2007 was less than $450m. The investment in CUDA would have been material relative to that income.

And crucially the firm stuck with that bet.

This is all in contrast with Nvidia’s competitors. AMD was actually the first choice for some key early machine learning projects. In 2004 there was the paper ‘GPU implementation of neural networks’ by Kyoung-Su Oh and Keechul Jung. From the abstract:

Graphics processing unit (GPU) is used for a faster artificial neural network. It is used to implement the matrix multiplication of a neural network to enhance the time performance of a text detection system. Preliminary results produced a 20-fold performance enhancement using an ATI RADEON 9700 PRO board. The parallelism of a GPU is fully utilized by accumulating a lot of input feature vectors and weight vectors, then converting the many inner-product operations into one matrix operation.

AMD was in some financial trouble at this time though. The firm lost more than $3bn in each of fiscal 2007 and 2008. By contrast Intel made net profits of over $7bn in 2007 so has no such excuse.

By 2012 Nvidia and CUDA were the first choice for machine learning research:

2012 saw AlexNet, demonstrating the power of Convolutional Neural Networks (CNNs) in image recognition. AlexNet was a major step forward but wasn’t the first to use GPUs for image recognition

AlexNet was not the first fast GPU-implementation of a CNN to win an image recognition contest. A CNN on GPU by K. Chellapilla et al. (2006) was 4 times faster than an equivalent implementation on CPU.

But by 2012 CUDA was available and so AlexNet used CUDA. From then on GPUs became an essential tool for machine leaning and CUDA became by far the most popular way of using GPUs for machine learning.

Speaking of AlexNet, the Computer History Museum has just released the program’s original source code along with a fascinating blog post.

In 2020, I reached out to Alex Krizhevsky to ask about the possibility of allowing CHM to release the AlexNet source code, due to its historical significance. He connected me to Geoff Hinton, who was working at Google at the time. As Google had acquired Hinton, Sutskever, and Krizhevsky’s company DNNresearch, they now owned the AlexNet IP. Hinton got the ball rolling by connecting us to the right team at Google. CHM worked with them for five years in a group effort to negotiate the release. This team also helped us identify which specific version of the AlexNet source code to release. In fact, there have been many versions of AlexNet over the years. There are also repositories of code named “AlexNet” on GitHub, though many of these are not the original code, but recreations based on the famous paper.

CHM is proud to present the source code to the 2012 version of Alex Krizhevsky, Ilya Sutskever, and Geoffery Hinton’s AlexNet, which transformed the field of artificial intelligence. Access the source code on CHM’s GitHub page.

This original AlexNet source contains roughly 6,000 lines of CUDA code.

According to Wikipedia:

The model was trained for 90 epochs over a period of five to six days using two Nvidia GTX 580 GPUs (3GB each).These GPUs have a theoretical performance of 1.581 TFLOPS in float32 and were priced at US$500 upon release. Each forward pass of AlexNet required approximately 1.43 GFLOPs. Based on these values, the two GPUs together were theoretically capable of performing over 2,200 forward passes per second under ideal conditions.

Two GPUs that cost $1,000!

We can fast forward to 2024 and CUDA is now on version 12.8.

As SemiAnalysis has described, Nvidia’s leading competitor AMD struggles to get core AI software running reliably on its hardware:

AMD’s software experience is riddled with bugs rendering out of the box training with AMD is impossible. We were hopeful that AMD could emerge as a strong competitor to NVIDIA in training workloads, but, as of today, this is unfortunately not the case. The CUDA moat has yet to be crossed by AMD due to AMD’s weaker-than-expected software Quality Assurance (QA) culture and its challenging out of the box experience. As fast as AMD tries to fill in the CUDA moat, NVIDIA engineers are working overtime to deepen said moat with new features, libraries, and performance updates.

I’d just like to end with what is an underestimated factor - at least in some discussions - in CUDA’s success. We now have eighteen years evidence of Nvidia’s commitment and consistent delivery. That’s something that no-one else can match.

For more, two previous posts (both part paid part free) discuss CUDA in more detail:

Demystifying GPU Compute Architectures

The complexity of modern GPUs needn’t obscure the essence of how these machines work. If we are to make the most of the advanced technology in these GPUs then we need a little less mystique.

Demystifying GPU Compute Software

This post looks at the major tools in the ecosystem including CUDA, ROCm, OpenCL, SYCL, oneAPI, and others. What are they? Who supports them? What is their history? How do they relate to each other?

Nice read! Is the only reason AMD didn't take the cake even when AlexNet initially ran on Radeon cards AMD's financial troubles at the time? Or did they not see the potential in the AlexNet demo to further pursue that path?

Great overview! It’s crazy even at this point the competitors still aren’t particularly close.