The Alchemy of Scale Transformation

Scale, economics, innovation and AI

There is a saying that is sometimes (mis)attributed to Stalin1:

Quantity has a quality all of its own.

We could rephrase this to say that changing the quantity of something changes its quality. Or perhaps more clearly, and without the alliteration, changing the scale of something changes its nature.

I have to come clean and admit that I’m continually intrigued borderline obsessed with the effects of scale.

The impact of scale has come up time and time again in these posts. It has determined whether architectures become dominant or disappear completely. And, as we’ve seen, scale is right at the heart of Moore’s Law. It’s the massive scale of applications of semiconductors that has enabled Moore’s Law to continue for more than five decades.

We’ll return to how this enormous scale has come about later on. First though, a reminder of what scale, or lack of it, can mean, with two examples.

The Inertia of Small-Scale Economics

Starting with the small, sometimes a lack of scale can act as an inhibitor. This came to mind whilst researching a future post on a microcontroller architecture - the Intel 8051 - which was launched back in 1980. Any discussion of the Intel 8051 is usually accompanied by comments bemoaning the fact that it is still widely used today when there are much better alternatives available.

Why hasn’t the 8051 been completely replaced? Because often this isn’t economical. More precisely, the fixed costs of replacing the 8051 outweigh the potential benefits of switching to a more modern architecture.

Designs based on the 8051 still ship in the billions each year, but individual deployments' economics are often small-scale. Those small-scale economics mean that inertia wins.

Large-Scale Economics as Stimulus for Innovation

More usually we notice the flip side of this, when scale stimulates innovation.

Just one example. When Google designed its first Tensor Processing Unit, what drove it to make such a radical change in designing its chips was the likely scale of the services that it wanted to offer using neural networks. Without application-specific hardware, these services would have been unaffordable.

The scale of these services didn’t just make it worthwhile investing in the development, manufacture, and deployment of a novel architecture; it created an imperative to do so.

Scale as an enabler of innovation probably seems obvious. Firms (and investors) would be expected to focus their efforts and their cash on products and services that have the prospect of becoming large enough to generate attractive returns.

But don’t we need to be a little careful here; can’t scale also act as an inhibitor of innovation? Once billions of users are running software on their x86-64 PCs doesn’t that make it much harder to change? Aren’t big firms less innovative than startups?

Scale can create barriers to entry, ‘lock-in’, and moats for incumbent businesses. But even in our example of the humble PC, the massive scale of the x86-64 business has supported continued innovation over decades in both fabrication and architecture. It’s innovations inside the incumbent firms (Intel and AMD) that have, in large part, rendered competitors’ innovations ineffective.

The Alchemy of Scale Transformation

This brings us to, to my mind, one of the most interesting and important features of modern computers2.

The von Neumann computer architecture makes many economically smaller-scale tasks3 - writing a document, editing a photo, sending an email, and countless more - soluble by a single tool, the general-purpose computer, whose economics permit its manufacture at a massive scale.

Two scale transformations are happening here. A single set of tools in a modern semiconductor fab is used to create a wide range of devices with the same basic technology used in phones, desktop computers, data centers, etc. Then, each of these devices can in turn be used for a wide range of tasks.

We could rephrase Marc Andreessen’s Software is Eating the World to ‘the economics of the general purpose computer manufactured at massive scale and repurposed using different software tools allows it to replace many traditional solutions’. Nowhere near as catchy, but with some recognition of the importance of the economics of the hardware alongside the adaptability of software.

It’s this transformation and the resulting scale that has driven investment in the development of semiconductor technology which, in turn, has enabled Moore’s Law to continue for more than five decades.

AI Scale

Today, we can see a further extension of this in the development of tools such as Large Language Models. If the novelty of LLMs is what is at first most striking - we didn’t know that computers could do that! - their generality is also important4. If we compare them to traditional software they potentially ‘solve’ more user problems than a ‘traditional’ single software tool.

These tools are once again expanding the scale of what technology can do with a common set of hardware and software.

Of course, scale is crucial to the effectiveness of these tools, as the Bitter Lesson sets out:

One thing that should be learned from the bitter lesson is the great power of general purpose methods, of methods that continue to scale with increased computation even as the available computation becomes very great. The two methods that seem to scale arbitrarily in this way are search and learning.

But it’s that generality and the resulting prospect of the massive economic scale of likely deployment of these models that are supporting the current jaw-dropping investment in GPUs. Not only is the potential scale of the deployment of these models huge, but so is the scale of the systems needed to train them.

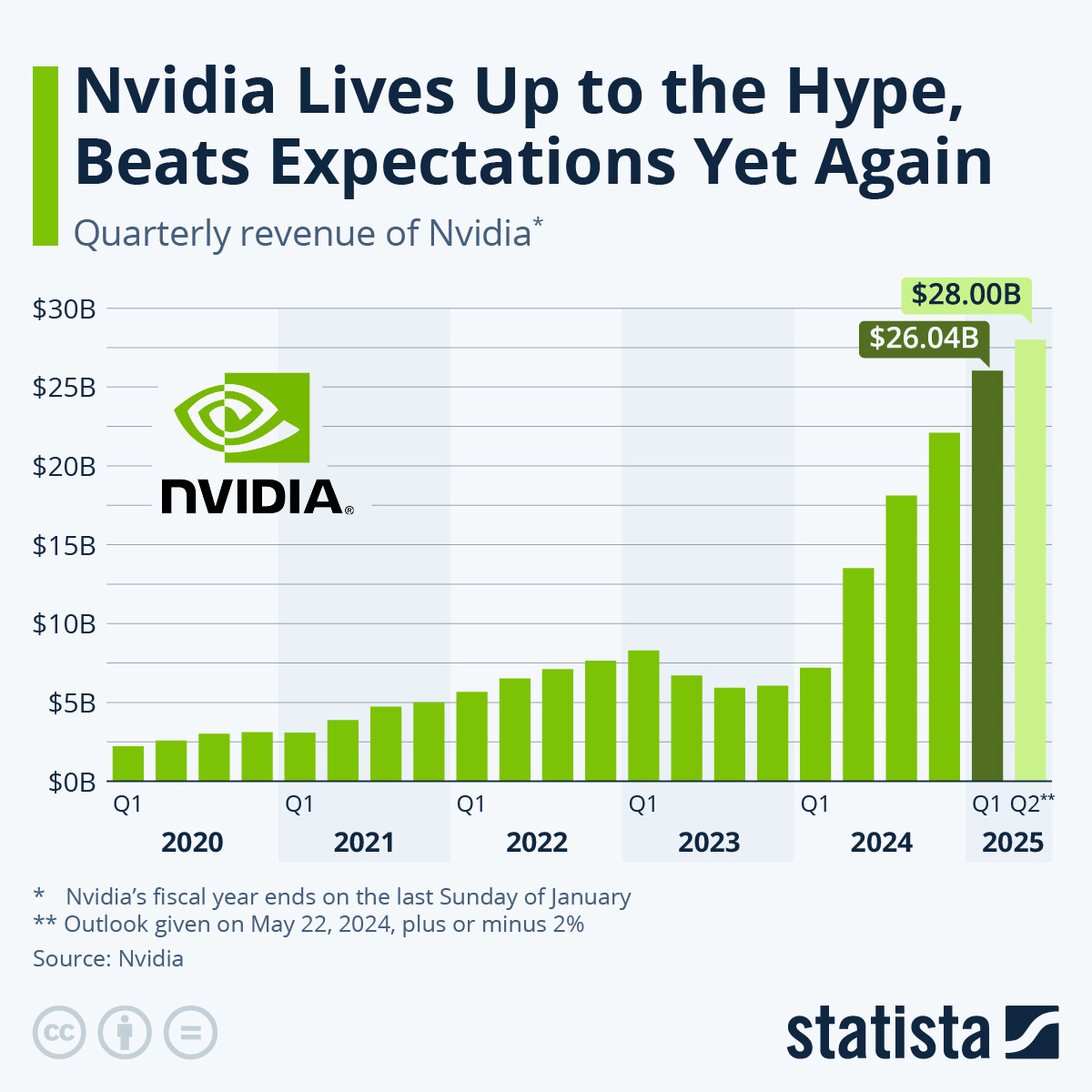

This in turn is leading to a change in the scale, and the nature, of firms - most obviously Nvidia - at the center of the development and deployment of these models.

In the 1980s Gordon Moore extrapolated the size of the semiconductor industry surpassing the world’s output of goods and services sometime around 2045.

In 2024, just how large semiconductors and closely related industries can become, and the implications of this scale, are some of the most interesting and crucial questions of our time.

Photo credit: By Robert Scoble from Half Moon Bay, USA - Microsoft Bing Maps' datacenter, CC BY 2.0, https://commons.wikimedia.org/w/index.php?curid=92293154

Why this is probably a misattribution https://www.quora.com/Who-said-Quantity-has-a-quality-all-its-own

Including smartphones and so on as ‘computers’.

Note ‘smaller-scale’ rather than ‘small-scale’ tasks here. Email, for example, is an economically significant task but its economics are changed fundamentally by not needing to have a dedicated ‘email’ machine, alongside a dedicated word processor, photo editor, and so on.

And this is before we get anywhere close to Artificial General Intelligence.

What an excellent article Babbage. Your point about hardware in regards to “software earing the world is spot on . The GPU/transformer world and other neural processors is turning computing upside down.

Re the quotation attributed to Stalin, while there's lots of discussion, it appears that Stalin did use the quotation even before WWII, per this post in the conversation you link to: https://qr.ae/psV7pQ

Who knows, of course, whether he devised the quote, but you'll surely agree that coming from Stalin, it has a resonance far more memorable than in the mouths or writings of just about anyone else.